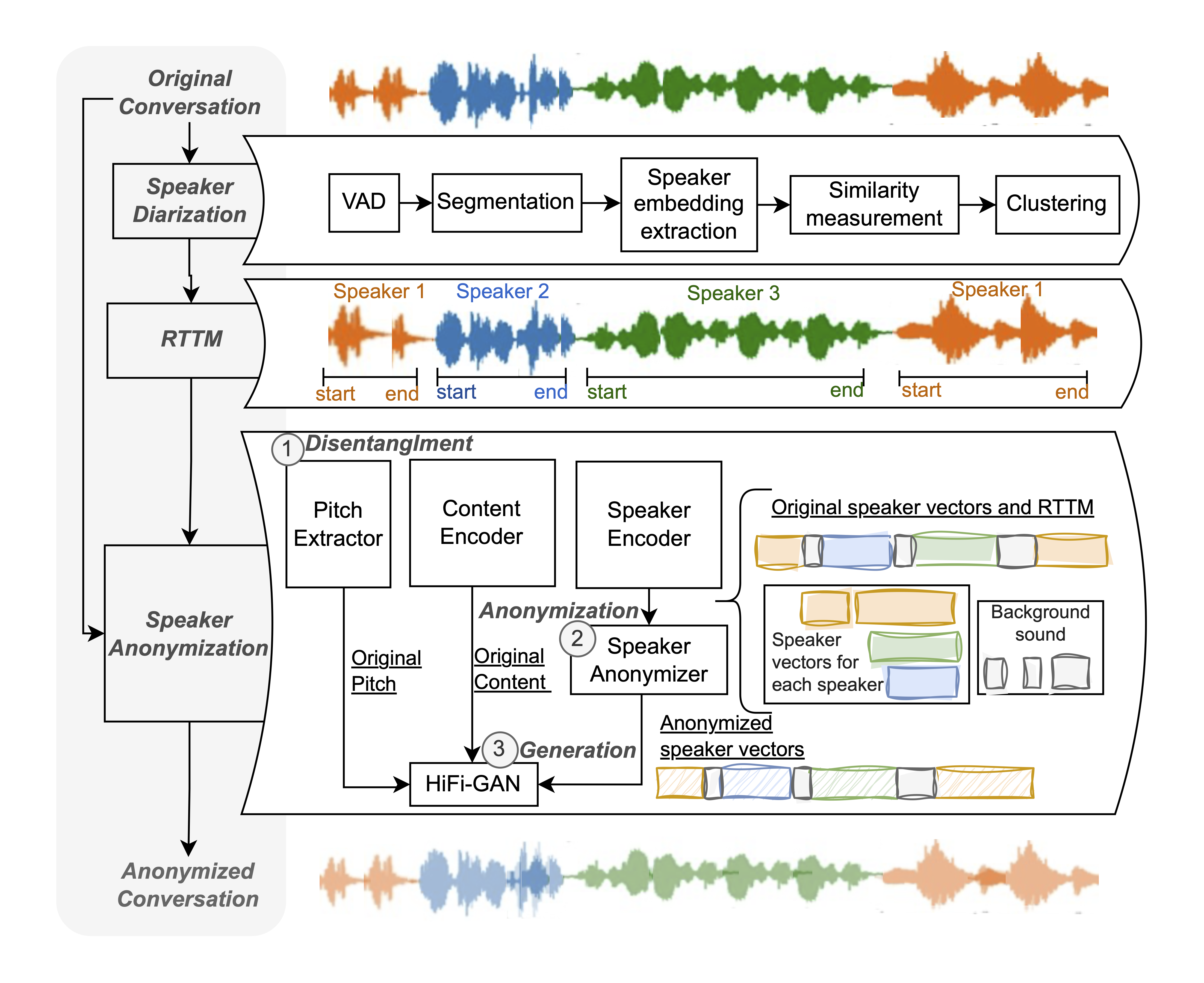

This is an implementation of the paper - A Benchmark for Multi-speaker Anonymization

The authors are Xiaoxiao Miao, Ruijie Tao, Chang Zeng, Xin Wang.

Audio samples can be found here: https://xiaoxiaomiao323.github.io/msa-audio/

git clone https://github.com/xiaoxiaomiao323/MSA.git

cd MSA

bash install.sh

bash demo.sh

Three randomly selected conversations with 3, 4, and 5 speakers respectively are anonymized using different MSAs.

| DER | FA | MS | SC | |

|---|---|---|---|---|

| Original | 5.54 | 0.00 | 0.00 | 5.54 |

| Resynthesized | 7.28 | 0.20 | 0.17 | 6.91 |

| 6.05 | 0.00 | 0.00 | 6.05 | |

| 6.49 | 0.43 | 0.00 | 6.06 | |

| 6.06 | 0.00 | 0.00 | 6.06 |

The results of

- Put your data under "data/wavs"

- [Optional] If you have real RTTMs, put it under "data/rttms" (only needed if you want to compute DERs)

- bash demo.sh

This study is partially supported by JST, PRESTO Grant Number JPMJPR23P9, Japan and SIT-ICT Academic Discretionary Fund.

The anon/adapted_from_facebookreaserch subfolder has Attribution-NonCommercial 4.0 International License. The anon/adapted_from_speechbrain subfolder has Apache License. They were created by the facebookreasearch and speechbrain orgnization, respectively. The anon/scripts and anon /anon_control subfolder has the MIT license.

Because this source code was adapted from the facebookresearch and speechbrain, the whole project follows

the Attribution-NonCommercial 4.0 International License.