GS-LIVM: Real-Time Photo-Realistic LiDAR-Inertial-Visual Mapping with Gaussian Splatting (include & src are coming soon!)

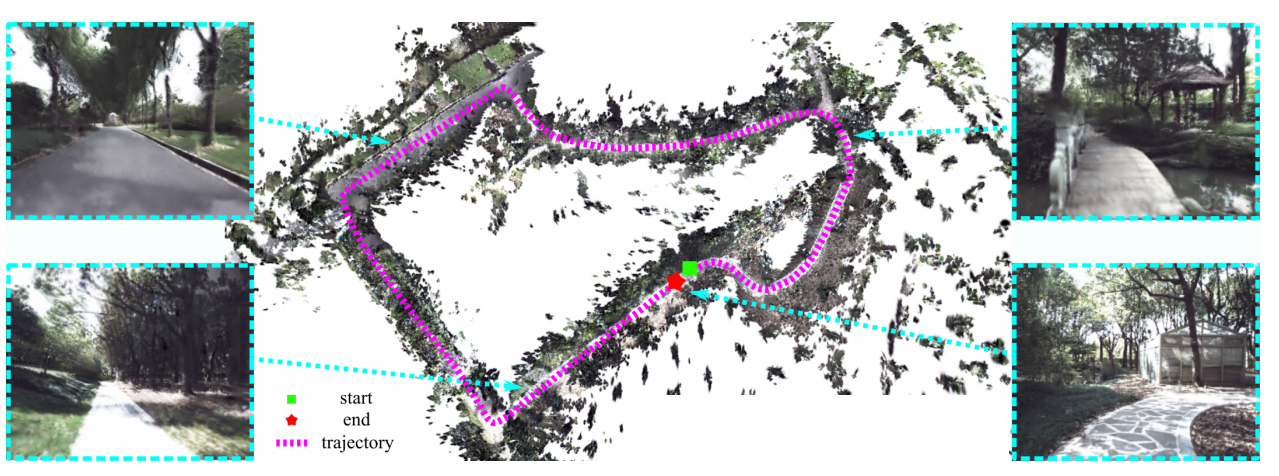

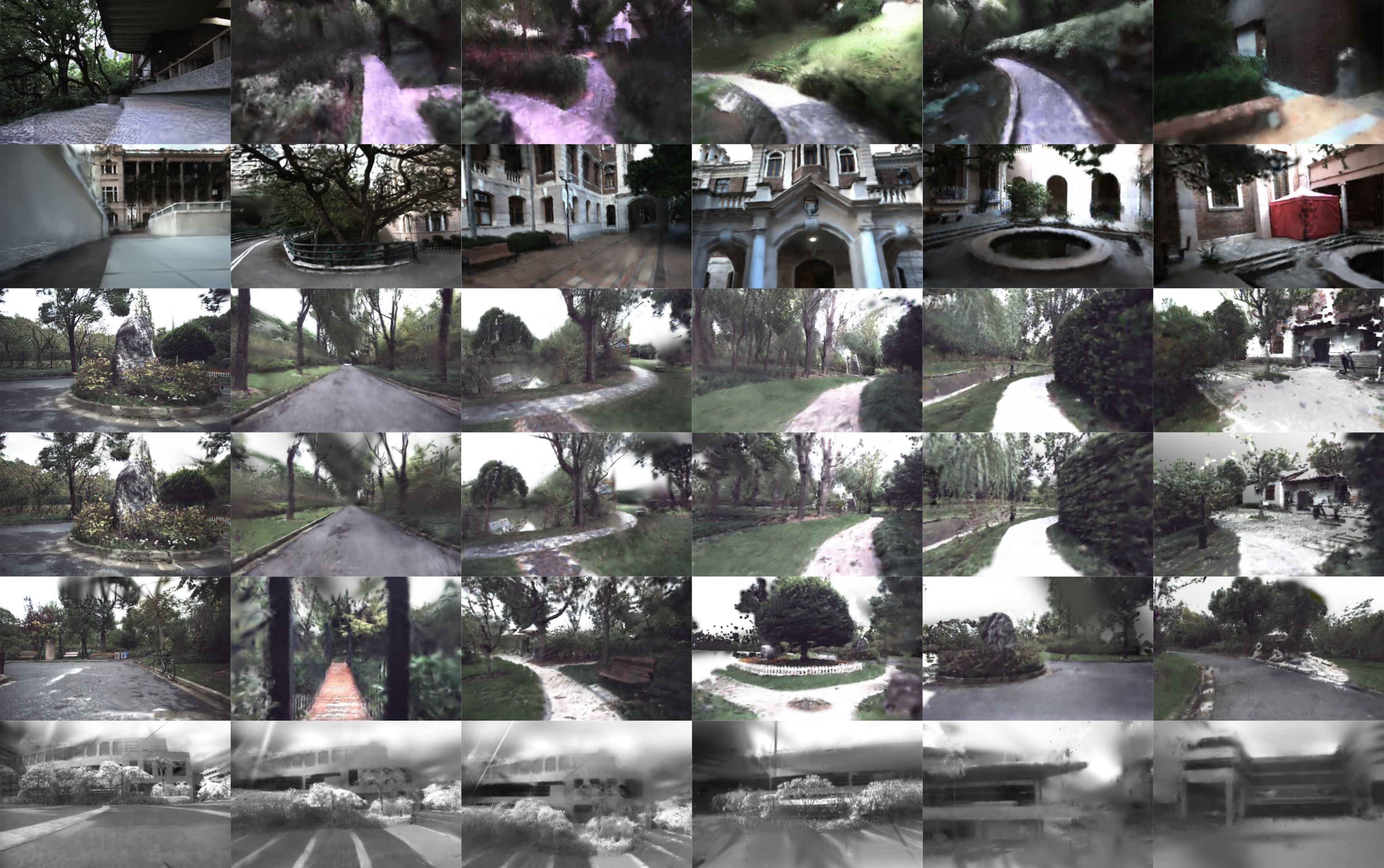

A. Real-time Dense Mapping via 3D Gaussian Splatting in large-scale unbounded outdoor environments.

B. Conventional LiDAR-Inertial-Visual Odometry (LIVO) is used to estimate the pose of the sensor.

C. Self-designed Voxel Gaussian Process Regression (VGPR) is used to handle the spaisity of the LiDAR data.

D. Variance centerd Framework is developed to calculate the initialization parameters of 3D gaussians.

E. Easy-to-use. ROS-related code is provided. Any bags contains image, LiDAR points, IMU can be processed.

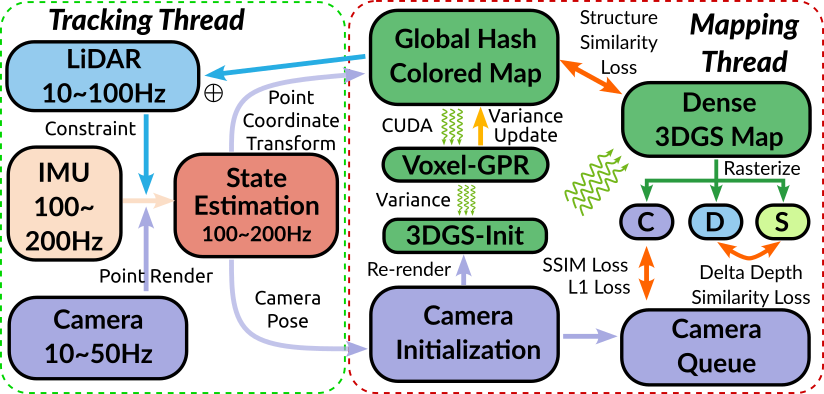

The system takes input from point cloud data collected by LiDAR, motion information collected by an Inertial Measurement Unit (IMU), and color and texture information captured by a camera. In the tracking thread, the ESIKF algorithm is used for tracking, achieving odometry output at the IMU frequency. In the mapping thread, the rendered color point cloud is used for Voxel-GPR, and then the data initialized 3D gaussian is input into the dense 3D gaussian map for rendering optimization. The final output is a high-quality dense 3D gaussian map. C, D, and S represent the rasterized color image, depth image, and silhouette image, respectively.

Demo video will be released soon.

The equipment of this repository is as follows. And this repo contains CPP, TorchLib and ROS in conda, so maybe it's a little difficult to install. If you are not familiar with the following steps, you can refer to the video of environment deploy Youtube in and Bilibili.

2.1 Ubuntu and ROS.

We build this repo by RoboStack. You can install different ROS distributions in Conda Environment via RoboStack Installation. Source code has been tested in ROS Noetic. Building in conda may be more difficult, but the ability to isolate the environment is worth doing so.

2.2 Create conda environment

# create env

mamba create -n {ENV_NAME} python=3.9

mamba activate {ENV_NAME}

# install ros in conda

mamba install ros-noetic-desktop-full -c RoboStack2.2 (Optional) Build Livox-SDK2 & livox_ros_driver2 in conda

# download

mkdir -p ~/catkin_ws/src && cd ~/catkin_ws/src

git clone https://github.com/Livox-SDK/Livox-SDK2

cd Livox-SDK2 && mkdir build && cd build

# cmake options, -DCMAKE_INSTALL_PREFIX is path of your conda environment

cmake -DCMAKE_INSTALL_PREFIX=/home/xieys/miniforge3/envs/{ENV_NAME} ..

# make && make install

make -j60 && make install

#clone livox_ros_driver2 and put it in your catkin_ws/src. If you don not use Livox, you can skip this step by changing -DBUILD_LIVOX=OFF in CMakeLists.txt

cd ~/catkin_ws/src

git clone https://github.com/Livox-SDK/livox_ros_driver2

cd livox_ros_driver2

(Important)(****NOTE, I have chaned the source code in livox_ros_driver2/CMakeLists.txt to support build. Please refer to the video in this operation.)

./build.sh ROS12.3 (Important) Install Torch

mamba search pytorch=2.0.1

# Please find appropriate version of torch in different channels

mamba install pytorch=2.0.1 -c conda-forge2.4 Some packages can be installed by:

mkdir -p ~/catkin_ws/src && cd ~/catkin_ws/src

# clone

git clone https://github.com/xieyuser/GS-LIVM.git

# install other packages

cd GS-LIVM

mamba install --file conda_pkgs.txt -c nvidia -c pytorch -c conda-forgeClone the repository and catkin_make:

# build

cd ~/catkin_ws

catkin build # change some DEFINITIONS

# source

# (either) temporary

source ~/catkin_ws/devel/setup.bash

# (or) start with conda activate

echo "ROS_FILE=/home/xieys/catkin_ws/devel/setup.bash

if [ -f \"\$ROS_FILE\" ]; then

echo \$ROS_FILE

source \$ROS_FILE

fi" >> ~/miniforge3/envs/{ENV_NAME}/setup.sh# Noted: change the path in line 40 of /home/xieys/catkin_ws/src/GS-LIVM/include/gs/gs/parameters.cuh

std::filesystem::path output_path = "/home/xieys/catkin_ws/output";1). Run on R3Live_Dataset

Before running, please type the following command to examine the image message type of ROS bag file:

rosbag info SEQUENCE_NAME.bagIf the image message type is sensor_msgs/CompressedImage, please type:

# for compressed image sensor type

roslaunch gslivom livo_r3live_compressed.launchIf the image message type is sensor_msgs/Image, please type:

# for original image sensor type

roslaunch gslivom livo_r3live.launch2). Run on NTU_VIRAL

roslaunch gslivom livo_ntu.launch3). Run on FAST-LIVO

roslaunch gslivom livo_fastlivo.launch4). Run on Botanic Garden Dataset

Please go to the workspace of GS-LIVM and type:

# for Velodyne VLP-16

roslaunch gslivom livo_botanic_garden.launch

# for Livox-Avia

roslaunch gslivom livo_botanic_garden_livox.launchPlease refer to Gaussian-Splatting-Cuda to build SIBR_viewer to visualize the 3D gaussian model. Certainly it can be built in the same conda environment. I have installed the dependencies (cos7) in conda_pkgs.txt.

Thanks for RoboStack, 3D Gaussian Splatting, Gaussian-Splatting-Cuda, depth-diff-gaussian-rasterization , R3LIVE, CT-ICP, sr_livo, Fast-LIO and Open-VINS.

@article{xie2024gslivm,

title={{GS-LIVM: Real-Time Photo-Realistic LiDAR-Inertial-Visual Mapping with Gaussian Splatting}},

author={Xie, Yusen and Huang, Zhenmin and Wu, Jin and Ma, Jun},

journal={arXiv preprint arXiv:2410.17084},

year={2024}

}

The source code of this package is released under GPLv2 license. We only allow it free for academic usage. For any technical issues, please feel free to contact yxie827@connect.hkust-gz.edu.cn.