简体中文 | English

PaddleVideo is a toolset for video recognition, action localization, and spatio temporal action detection tasks prepared for the industry and academia. This repository provides examples and best practice guildelines for exploring deep learning algorithm in the scene of video area. We devote to support experiments and utilities which can significantly reduce the "time to deploy". By the way, this is also a proficiency verification and implementation of the newest PaddlePaddle 2.0 in the video field.

-

Advanced model zoo design PaddleVideo unifies the video understanding tasks, including recogniztion, localization, spatio temporal action detection, and so on. with the clear configuration system based on IOC/DI, we design a decoupling modular and extensible framework which can easily construct a customized network by combining different modules.

-

Various dataset and architectures PaddleVideo supports more datasets and architectures, including Kinectics400, ucf101, YoutTube8M datasets, and video recognition models, such as TSN, TSM, SlowFast, AttentionLSTM and action localization model, like BMN.

-

Higher performance PaddleVideo has built-in solutions to improve accuracy on the recognition models. PP-TSM, which is based on the standard TSM, already archive the best performance in the 2D recognition network, has the same size of parameters but improve the Top1 Acc to 73.5% , and one can easily apply the soulutions on his own dataset.

-

Faster training strategy PaddleVideo suppors faster training strategy, it accelerates by 100% compared with the standard Slowfast version, and it only takes 10 days to train from scratch on the kinetics400 dataset.

-

Deployable PaddleVideo is powered by the Paddle Inference. There is no need to convert the model to ONNX format when deploying it, all you want can be found in this repository.

| Architectures | Frameworks | Components | Data Augmentation |

|

|

|

|

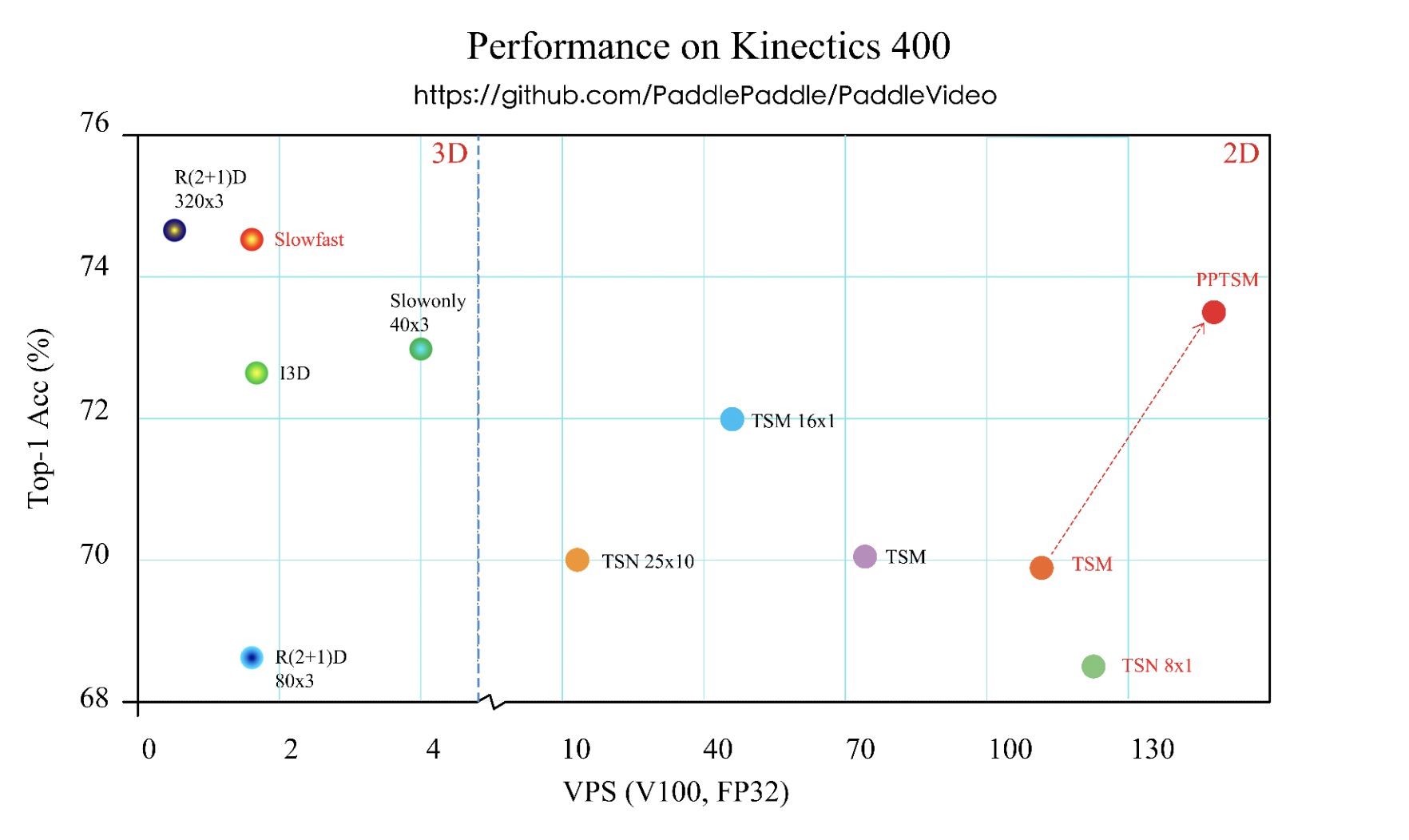

The chart below illustrates the performance of the video recognition models both 2D and 3D architectures, including our implementation and Pytorch version. It shows the relationship between Acc Top1 and VPS on the Kinectics400 dataset. (Tested on the NVIDIA® Tesla® GPU V100.)

Note:

- PP-TSM improves almost 3.5% Top1 accuracy from standard TSM.

- all these models described by RED color can be obtained in the Model Zoo, and others are Pytorch results.

- Scan the QR code below with your Wechat and reply "video", you can access to official technical exchange group. Look forward to your participation.

- VideoTag: 3k Large-Scale video classification model

- FootballAction: Football action detection model

- Quick Start

- Project design

- Model zoo

- recognition

- Localization

- Spatio temporal action detection

- Coming Soon!

- Practice

- Others

PaddleVideo is released under the Apache 2.0 license.

This poject welcomes contributions and suggestions. Please see our contribution guidelines.

- Many thanks to mohui37 for contributing the code for prediction.