BrainSleepNet: Learning Multivariate EEG Representation for Automatic Sleep Staging

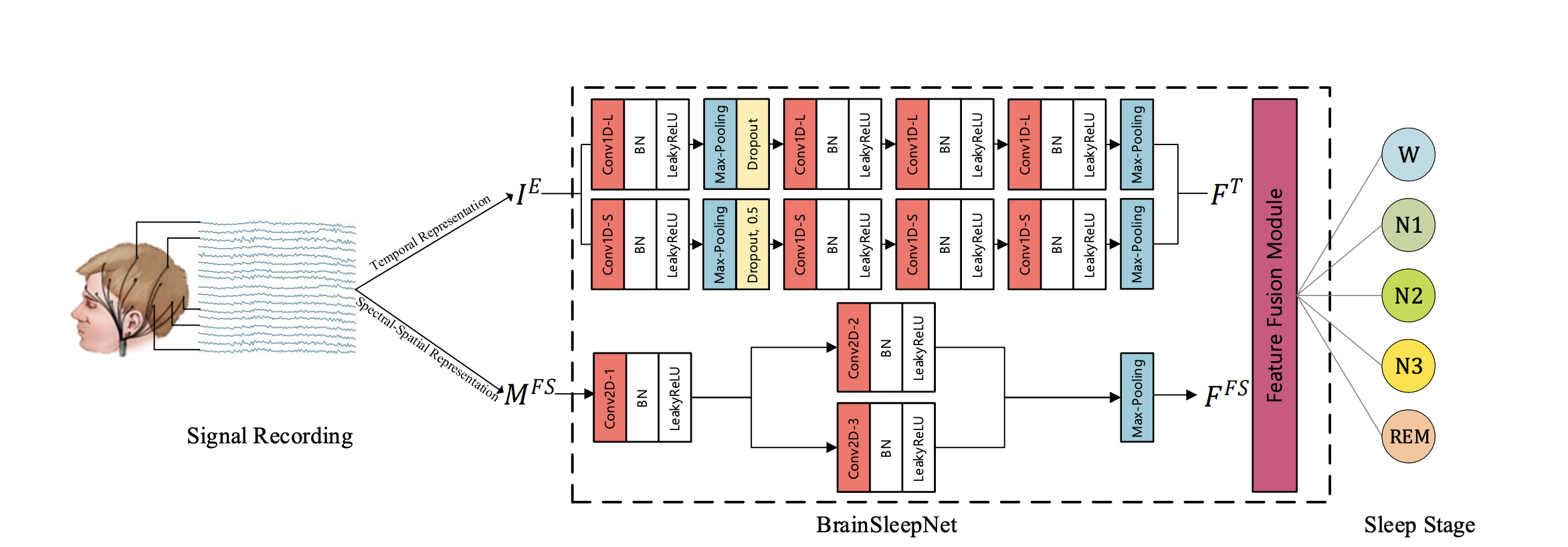

BrainSleepNet is designed to capture the comprehensive features of multivariant EEG signals for automatic sleep staging. The BrainSleepNet consists of an EEG temporal feature extraction module and an EEG spectral-spatial feature extraction module for the temporal-spectral-spatial representation of EEG signals.

These are the source code of BrainPrintNet.

We evaluate our model on the Montreal Archive of Sleep Studies (MASS) dataset Subset-3. The MASS is an open-access and collaborative database of laboratory-based polysomnography (PSG) recordings.

- Python 3.6.5

- CUDA 9.0

- CuDNN 7.5.1

- numpy==1.15.0

- sklearn==0.19.1

- tensorflow_gpu==1.8.0

- Keras==2.2.0

- matplotlib==3.0.3

-

Preprocess

- Prepare data (raw signals) in

data_dir- Files name: 01-03-00XX-Data.npy, where XX denotes subject ID.

- Tensor shape: [sample, channel=26, length], where channel 0 is ECG channel, 1-20 are EEG channels, 21-23 are EMG channels, 24-25 are EOG channels.

- Modify directory

- Modify

data_dirandlabel_dirinrun_preprocess.pyaccordingly. - (Optional) Modify

output_dirinrun_preprocess.py.

- Modify

- Run

cd preprocesspython run_preprocess.py

- Prepare data (raw signals) in

-

Command Line Parameters

- Training

-

--batch_size: Training batch size. -

--epoch: Number of training epochs. -

--num_fold: Number of folds. -

--save_model: Save the best model or not.

-

- Directory

-

--model_dir: The directory for saving best models of each fold. -

--data_dir1: The directory of the EEG signals. -

--data_dir2: The directory of the spectral-spatial representation of EEG signals. -

--result_dir: The directory for saving results.

-

- Training

-

Input Data Shape

- EEG_EOG

- DataSubID.npy : (numOfSamples, numOfChannels, timeLength) -> (numOfSamples, 6 + 2, 30 * 128 * 3)

- numOFChannels: 6 channels EEG signals and 2 channels EOG signals.

- timeLength: 30 (s) * 128 (Hz) * 3 (epochs) = 11520

- LabelSubID.npy: (numOfSamples, )

- DataSubID.npy : (numOfSamples, numOfChannels, timeLength) -> (numOfSamples, 6 + 2, 30 * 128 * 3)

- EMG

- DataSubID.npy : (numOfSamples, numOfChannels, timeLength) -> (numOfSamples, 3, 11520)

- numOFChannels: 3 channels EMG signals.

- timeLength: 30 (s) * 128 (Hz) * 3 (epochs) = 11520

- LabelSubID.npy: (numOfSamples, )

- DataSubID.npy : (numOfSamples, numOfChannels, timeLength) -> (numOfSamples, 3, 11520)

- fre_spa

- DataSubID.npy : (numOfSamples, numOfFreqBands, height, width, 1) -> (numOfSamples, 5, 16, 16, 1)

- numOfFreqBands: 5 frequency bands (

$\delta, \theta, \alpha, \beta, \gamma$ ) - height, width: 16 px

$\times$ 16 px

- numOfFreqBands: 5 frequency bands (

- LabelSubID.npy: (numOfSamples, )

- DataSubID.npy : (numOfSamples, numOfFreqBands, height, width, 1) -> (numOfSamples, 5, 16, 16, 1)

- EEG_EOG

-

Training

Run

run.pywith the command line parameters. By default, the model can be run with the following command:CUDA_VISIBLE_DEVICES=0 python run.py