English | 简体中文

DeepKE is a knowledge extraction toolkit supporting low-resource and document-level scenarios for entity, relation and attribute extraction. We provide comprehensive documents, Google Colab tutorials, and online demo for beginners.

- What's New

- Prediction Demo

- Model Framework

- Quick Start

- Notebook Tutorial

- Tips

- To do

- Citation

- Developers

- We have released a paper DeepKE: A Deep Learning Based Knowledge Extraction Toolkit for Knowledge Base Population

- We have added

dockerfileto create the enviroment automatically.

- The demo of DeepKE, supporting real-time extration without deploying and training, has been released.

- The documentation of DeepKE, containing the details of DeepKE such as source codes and datasets, has been released.

pip install deepke- The codes of deepke-v2.0 have been released.

- The codes of deepke-v1.0 have been released.

- The project DeepKE startup and codes of deepke-v0.1 have been released.

There is a demonstration of prediction.

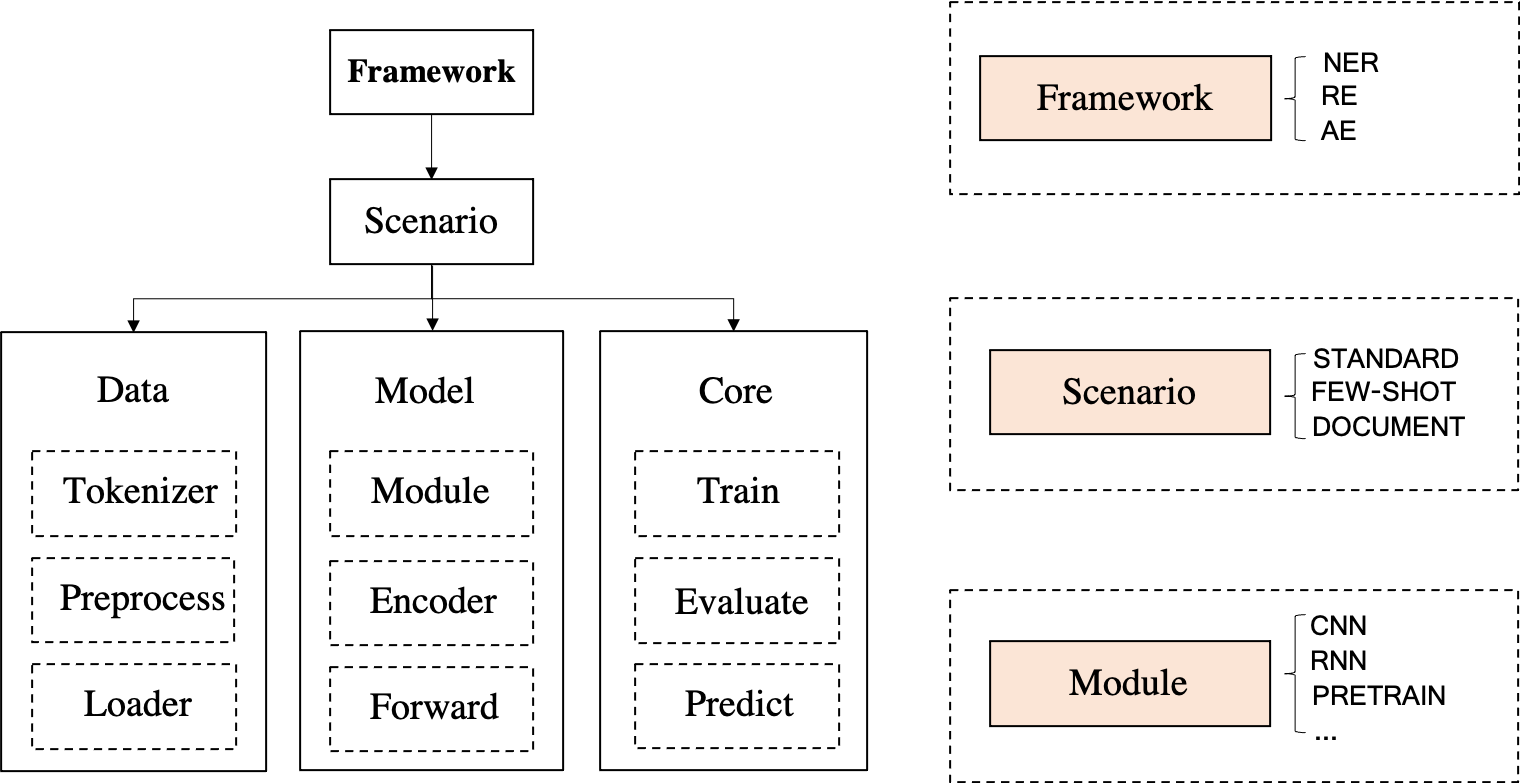

- DeepKE contains a unified framework for named entity recognition, relation extraction and attribute extraction, the three knowledge extraction functions.

- Each task can be implemented in different scenarios. For example, we can achieve relation extraction in standard, low-resource (few-shot), document-level and multimodal settings.

- Each application scenario comprises of three components: Data including Tokenizer, Preprocessor and Loader, Model including Module, Encoder and Forwarder, Core including Training, Evaluation and Prediction.

DeepKE supports pip install deepke.

Take the fully supervised relation extraction for example.

Step1 Download the basic code

git clone https://github.com/zjunlp/DeepKE.gitStep2 Create a virtual environment using Anaconda and enter it.

We also provide dockerfile source code, which is located in the docker folder, to help users create their own mirrors.

conda create -n deepke python=3.8

conda activate deepke-

Install DeepKE with source code

python setup.py install python setup.py develop

-

Install DeepKE with

pippip install deepke

Step3 Enter the task directory

cd DeepKE/example/re/standardStep4 Download the dataset

wget 120.27.214.45/Data/re/standard/data.tar.gz

tar -xzvf data.tar.gzStep5 Training (Parameters for training can be changed in the conf folder)

We support visual parameter tuning by using wandb.

python run.pyStep6 Prediction (Parameters for prediction can be changed in the conf folder)

Modify the path of the trained model in predict.yaml.

python predict.pypython == 3.8

- torch == 1.5

- hydra-core == 1.0.6

- tensorboard == 2.4.1

- matplotlib == 3.4.1

- transformers == 3.4.0

- jieba == 0.42.1

- scikit-learn == 0.24.1

- pytorch-transformers == 1.2.0

- seqeval == 1.2.2

- tqdm == 4.60.0

- opt-einsum==3.3.0

- wandb==0.12.7

- ujson

-

Named entity recognition seeks to locate and classify named entities mentioned in unstructured text into pre-defined categories such as person names, organizations, locations, organizations, etc.

-

The data is stored in

.txtfiles. Some instances as following:Sentence Person Location Organization 本报北京9月4日讯记者杨涌报道:部分省区人民日报宣传发行工作座谈会9月3日在4日在京举行。 杨涌 北京 人民日报 《红楼梦》是**电视台和**电视剧制作中心根据**古典文学名著《红楼梦》摄制于1987年的一部古装连续剧,由王扶林导演,周汝昌、王蒙、周岭等多位红学家参与制作。 王扶林,周汝昌,王蒙,周岭 ** **电视台,**电视剧制作中心 秦始皇兵马俑位于陕西省西安市,1961年被国务院公布为第一批全国重点文物保护单位,是世界八大奇迹之一。 秦始皇 陕西省,西安市 国务院 -

Read the detailed process in specific README

-

Step1 Enter

DeepKE/example/ner/standard. Download the dataset.wget 120.27.214.45/Data/ner/standard/data.tar.gz tar -xzvf data.tar.gz

Step2 Training

The dataset and parameters can be customized in the

datafolder andconffolder respectively.python run.py

Step3 Prediction

python predict.py

-

Step1 Enter

DeepKE/example/ner/few-shot. Download the dataset.wget 120.27.214.45/Data/ner/few_shot/data.tar.gz tar -xzvf data.tar.gz

Step2 Training in the low-resouce setting

The directory where the model is loaded and saved and the configuration parameters can be cusomized in the

conffolder.python run.py +train=few_shot

Users can modify

load_pathinconf/train/few_shot.yamlto use existing loaded model.Step3 Add

- predicttoconf/config.yaml, modifyloda_pathas the model path andwrite_pathas the path where the predicted results are saved inconf/predict.yaml, and then runpython predict.pypython predict.py

-

Step1 Enter

DeepKE/example/ner/multimodal. Download the dataset.wget 120.27.214.45/Data/ner/multimodal/data.tar.gz tar -xzvf data.tar.gz

We use RCNN detected objects and visual grounding objects from original images as visual local information, where RCNN via faster_rcnn and visual grounding via onestage_grounding.

Step2 Training in the multimodal setting

- The dataset and parameters can be customized in the

datafolder andconffolder respectively. - Start with the model trained last time: modify

load_pathinconf/train.yamlas the path where the model trained last time was saved. And the path saving logs generated in training can be customized bylog_dir.

python run.py

Step3 Prediction

python predict.py

- The dataset and parameters can be customized in the

-

-

Relationship extraction is the task of extracting semantic relations between entities from a unstructured text.

-

The data is stored in

.csvfiles. Some instances as following:Sentence Relation Head Head_offset Tail Tail_offset 《岳父也是爹》是王军执导的电视剧,由马恩然、范明主演。 导演 岳父也是爹 1 王军 8 《九玄珠》是在纵横中文网连载的一部小说,作者是龙马。 连载网站 九玄珠 1 纵横中文网 7 提起杭州的美景,西湖总是第一个映入脑海的词语。 所在城市 西湖 8 杭州 2 -

Read the detailed process in specific README

-

Step1 Enter the

DeepKE/example/re/standardfolder. Download the dataset.wget 120.27.214.45/Data/re/standard/data.tar.gz tar -xzvf data.tar.gz

Step2 Training

The dataset and parameters can be customized in the

datafolder andconffolder respectively.python run.py

Step3 Prediction

python predict.py

-

Step1 Enter

DeepKE/example/re/few-shot. Download the dataset.wget 120.27.214.45/Data/re/few_shot/data.tar.gz tar -xzvf data.tar.gz

Step 2 Training

- The dataset and parameters can be customized in the

datafolder andconffolder respectively. - Start with the model trained last time: modify

train_from_saved_modelinconf/train.yamlas the path where the model trained last time was saved. And the path saving logs generated in training can be customized bylog_dir.

python run.py

Step3 Prediction

python predict.py

- The dataset and parameters can be customized in the

-

Step1 Enter

DeepKE/example/re/document. Download the dataset.wget 120.27.214.45/Data/re/document/data.tar.gz tar -xzvf data.tar.gz

Step2 Training

- The dataset and parameters can be customized in the

datafolder andconffolder respectively. - Start with the model trained last time: modify

train_from_saved_modelinconf/train.yamlas the path where the model trained last time was saved. And the path saving logs generated in training can be customized bylog_dir.

python run.py

Step3 Prediction

python predict.py

- The dataset and parameters can be customized in the

-

Step1 Enter

DeepKE/example/re/multimodal. Download the dataset.wget 120.27.214.45/Data/re/multimodal/data.tar.gz tar -xzvf data.tar.gz

We use RCNN detected objects and visual grounding objects from original images as visual local information, where RCNN via faster_rcnn and visual grounding via onestage_grounding.

Step2 Training

- The dataset and parameters can be customized in the

datafolder andconffolder respectively. - Start with the model trained last time: modify

load_pathinconf/train.yamlas the path where the model trained last time was saved. And the path saving logs generated in training can be customized bylog_dir.

python run.py

Step3 Prediction

python predict.py

- The dataset and parameters can be customized in the

-

-

Attribute extraction is to extract attributes for entities in a unstructed text.

-

The data is stored in

.csvfiles. Some instances as following:Sentence Att Ent Ent_offset Val Val_offset 张冬梅,女,汉族,1968年2月生,河南淇县人 民族 张冬梅 0 汉族 6 诸葛亮,字孔明,三国时期杰出的军事家、文学家、发明家。 朝代 诸葛亮 0 三国时期 8 2014年10月1日许鞍华执导的电影《黄金时代》上映 上映时间 黄金时代 19 2014年10月1日 0 -

Read the detailed process in specific README

-

Step1 Enter the

DeepKE/example/ae/standardfolder. Download the dataset.wget 120.27.214.45/Data/ae/standard/data.tar.gz tar -xzvf data.tar.gz

Step2 Training

The dataset and parameters can be customized in the

datafolder andconffolder respectively.python run.py

Step3 Prediction

python predict.py

-

This toolkit provides many Jupyter Notebook and Google Colab tutorials. Users can study DeepKE with them.

-

Standard Setting

-

Low-resource

-

Document-level

-

Multimodal

- Using nearest mirror, like THU in China, will speed up the installation of Anaconda.

- Using nearest mirror, like aliyun in China, will speed up

pip install XXX. - When encountering

ModuleNotFoundError: No module named 'past',runpip install future. - It's slow to install the pretrained language models online. Recommend download pretrained models before use and save them in the

pretrainedfolder. ReadREADME.mdin every task directory to check the specific requirement for saving pretrained models. - The old version of DeepKE is in the deepke-v1.0 branch. Users can change the branch to use the old version. The old version has been totally transfered to the standard relation extraction (example/re/standard).

- It's recommended to install DeepKE with source codes. Because user may meet some problems in Windows system with 'pip'.

- More related low-resource knowledge extraction works can be found in Knowledge Extraction in Low-Resource Scenarios: Survey and Perspective.

- Make sure the exact versions of requirements in

requirements.txt.

In next version, we plan to add multi-modality knowledge extraction to the toolkit.

Meanwhile, we will offer long-term maintenance to fix bugs, solve issues and meet new requests. So if you have any problems, please put issues to us.

Please cite our paper if you use DeepKE in your work

@article{zhang2022deepke,

title={DeepKE: A Deep Learning Based Knowledge Extraction Toolkit for Knowledge Base Population},

author={Zhang, Ningyu and Xu, Xin and Tao, Liankuan and Yu, Haiyang and Ye, Hongbin and Xie, Xin and Chen, Xiang and Li, Zhoubo and Li, Lei and Liang, Xiaozhuan and others},

journal={arXiv preprint arXiv:2201.03335},

year={2022}

}Zhejiang University: Ningyu Zhang, Liankuan Tao, Xin Xu, Haiyang Yu, Hongbin Ye, Shuofei Qiao, Xin Xie, Xiang Chen, Zhoubo Li, Lei Li, Xiaozhuan Liang, Yunzhi Yao, Shumin Deng, Wen Zhang, Guozhou Zheng, Huajun Chen

Alibaba Group: Feiyu Xiong, Hui Chen, Qiang Chen

DAMO Academy: Zhenru Zhang, Chuanqi Tan, Fei Huang