FD-SOS: Vision-Language Open-Set Detectors for Bone Fenestration and Dehiscence Detection from Intraoral Images

Marawan Elbatel, Keyuan Liu, Yanqi Yang, Xiaomeng Li

This is the official implementation of the framework FD-SOS, for FD Screening utilizing Open-Set detectors in intraoral images.

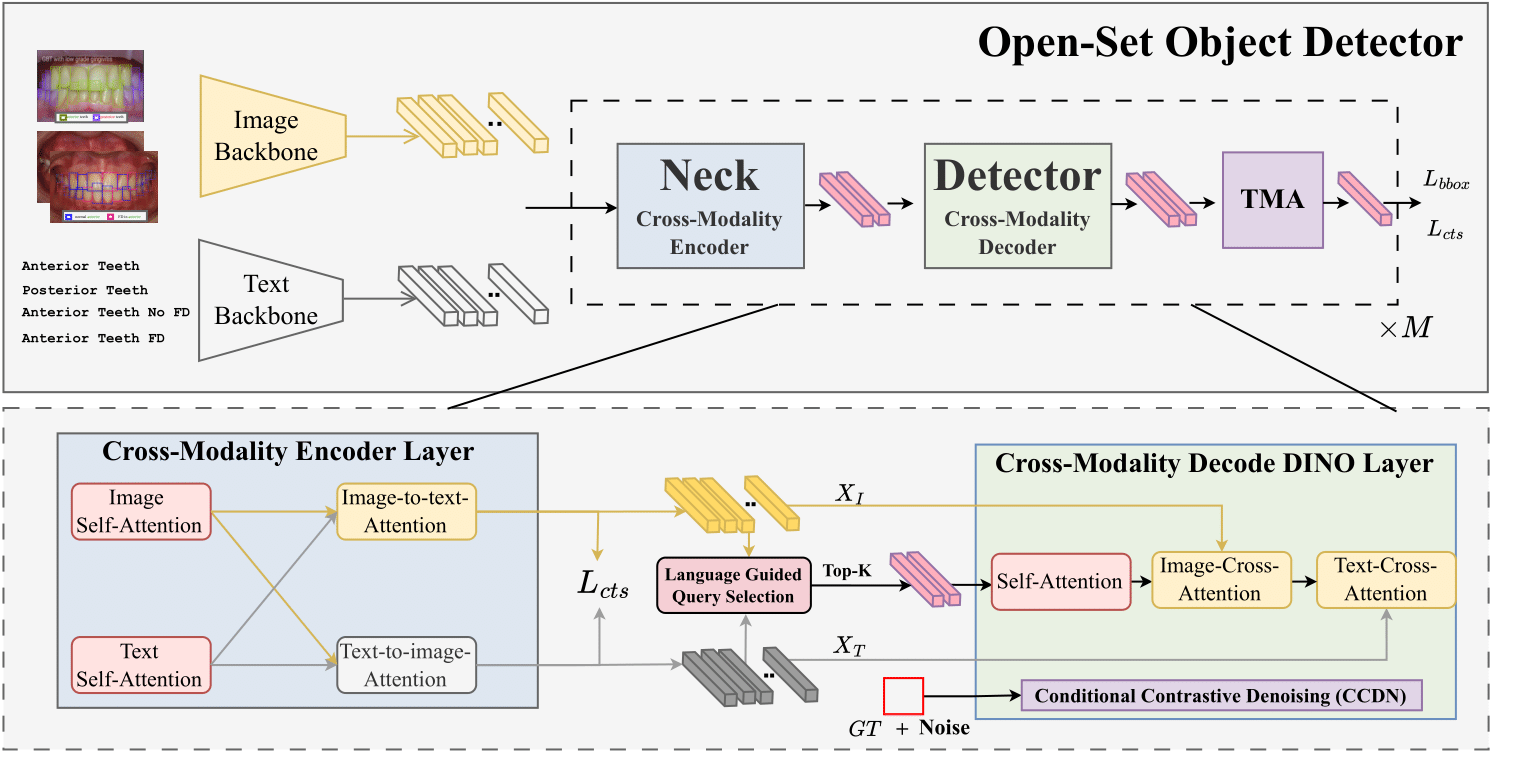

Accurate detection of bone fenestration and dehiscence (FD) is crucial for effective treatment planning in dentistry. While cone-beam computed tomography (CBCT) is the gold standard for evaluating FD, it comes with limitations such as radiation exposure, limited accessibility, and higher cost compared to intraoral images. In intraoral images, dentists face challenges in the differential diagnosis of FD. This paper presents a novel and clinically significant application of FD detection solely from intraoral images. To achieve this, we propose FD-SOS, a novel open-set object detector for FD detection from intraoral images. FD-SOS has two novel components: conditional contrastive denoising (CCDN) and teeth-specific matching assignment (TMA). These modules enable FD-SOS to effectively leverage external dental semantics. Experimental results showed that our method outperformed existing detection methods and surpassed dental professionals by 35% recall under the same level of precision.

-

Clone the repository:

git clone https://github.com/xmed-lab/FD-SOS cd FD-SOS -

Create a virtual environment:

conda create -n SOS python=3.8 conda activate SOS

-

Install PyTorch:

pip3 install torch==2.1.2+cu118 torchvision==0.16.2+cu118 torchaudio==2.1.2+cu118 --index-url https://download.pytorch.org/whl/cu118

-

Follow mmdet to install dependencies :

bash requirements.sh

- Download the images:

#download images gdown https://drive.google.com/uc?id=1Xm794_tzCh1TtIfJYJLFlmv013GTL_Uh unzip images_all.zip -d data/v1/

- Download the model weights, and configs

#download model weights gdown --folder https://drive.google.com/drive/folders/1zgNxQEXhGm3FTIQAKqkYd3YH0O5SHhm_

To generate predictions using the trained model/weights, make sure to download the images, model weights, and configs:

-

Run inference:

python inference.py

-

The model will infer into the evaluation folder. To evaluate COCO Metrics on the generated predictions:

python evaluate.py

We provide detailed results and model weights for reproducibility and further research.

| Methods | Multi-task | AP75FD | APFD | AP50FD | AP75 | AP | AP50 | Model Weights |

|---|---|---|---|---|---|---|---|---|

| Traditional Detectors* | ||||||||

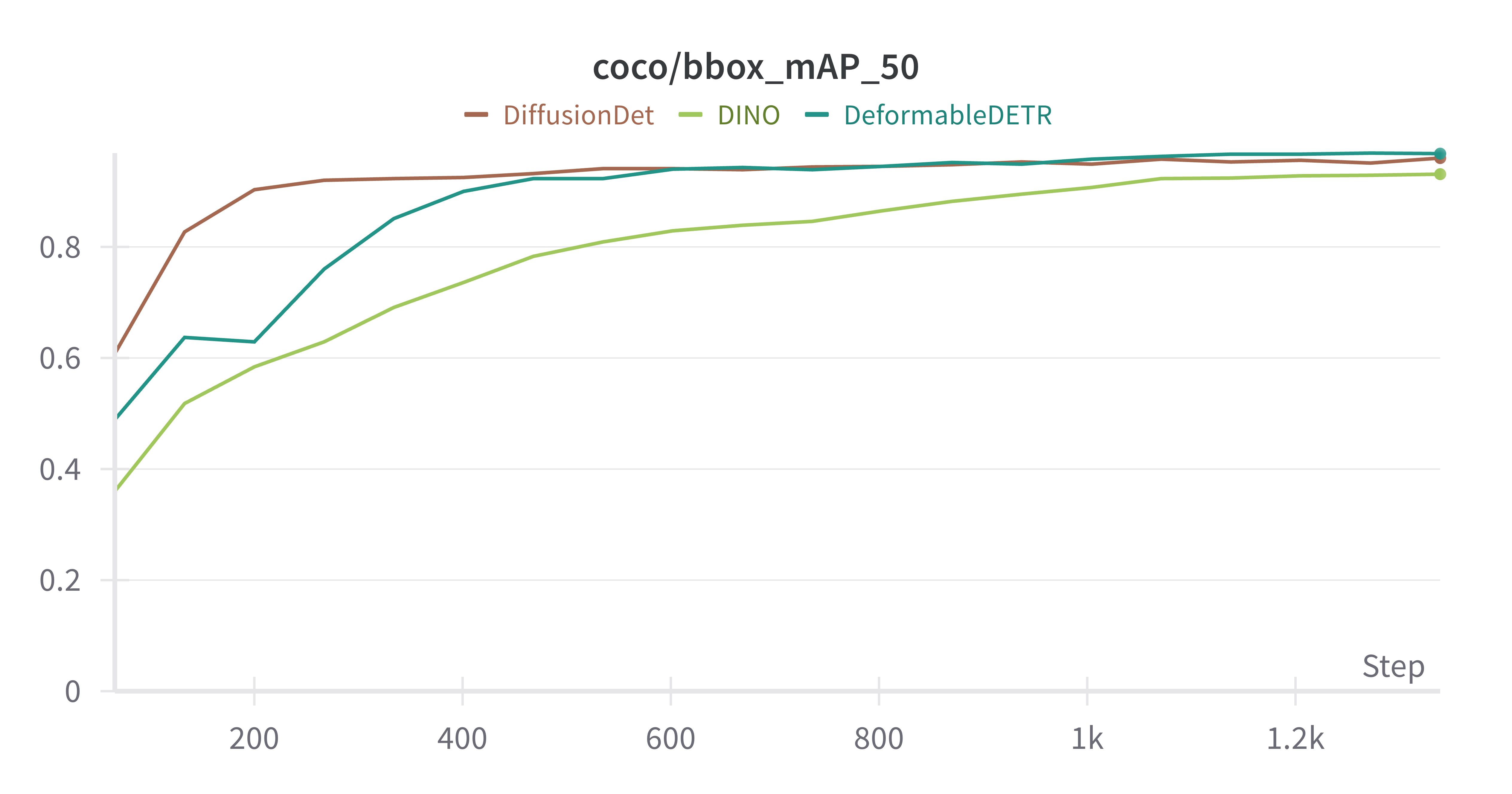

| Diffusion-DETR w/o pretraining | ✗ | 0.04 | 1.31 | 7.58 | 0.04 | 1.7 | 8.85 | Download |

| Diffusion-DETR | ✗ | 55.52 | 51.42 | 61.28 | 62.58 | 59.06 | 66.37 | Download |

| DDETR | ✗ | 56.92 | 50.41 | 60.51 | 62.68 | 57.44 | 65.48 | Download |

| DINO | ✗ | 54.03 | 49.68 | 57.94 | 55.13 | 51.65 | 57.65 | Download |

| Open-Set Detectors † | ||||||||

| GLIP | ✗ | 40.57 | 32.0 | 46.34 | 51.3 | 40.47 | 55.85 | Download |

| GDINO | ✗ | 58.32 | 56.59 | 61.07 | 63.69 | 62.59 | 65.89 | Download |

| GLIP | ✔️ | 41.78 | 33.68 | 47.09 | 51.97 | 42.73 | 56.7 | Download |

| GDINO (our baseline) | ✔️ | 55.55 | 54.75 | 59.99 | 62.6 | 62.08 | 65.81 | Download |

| FD-SOS (ours) | ✔️ | 62.45 | 60.84 | 66.01 | 67.07 | 65.97 | 69.67 | Download |

*requires pre-training on public dental dataset after initialization from ImageNet pre-trained weights.

Traditional object detectors fail without warmup on public dental datasets. We provide warmup models for traditional object detectors available here.

To train FD-SOS , please follow the instructions to get started and install dependencies.

All configs for all experiments are available in train_FD.sh.

To run FD-SOS benchmark, make sure all images are available in data/v1/images_all and run:

bash train.sh

Code is built on mmdet.

@article{elbatel2024fd,

title={FD-SOS: Vision-Language Open-Set Detectors for Bone Fenestration and Dehiscence Detection from Intraoral Images},

author={Elbatel, Marawan and Liu, Keyuan and Yang, Yanqi and Li, Xiaomeng},

journal={arXiv preprint arXiv:2407.09088},

year={2024}

}