This is the 'S-team' repository for capston project of Udacity Self-Driving Car Nanodegree. Team members are:

- arjuna sky kok (sky.kok@speaklikeaking.com)

- William Du (dm1299onion@gmail.com)

- Abhinav Deep Singh(abhinav1010.ads@gmail.com)

- Woojin jung(xmprise@gmail.com)

Thank you all.

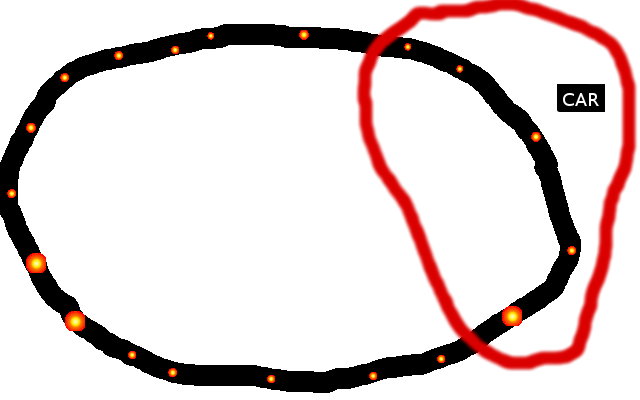

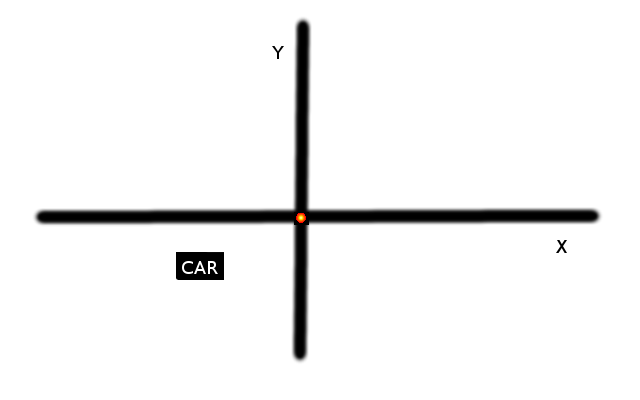

We are given the all waypoints in the circuit. We must find the nearest waypoints ahead of the car. All waypoints have x, y coordinates and yaw direction. The way we find whether this waypoint is ahead of the car or not by doing two steps:

-

Find the euclidian distance between the car and the waypoint and we throw out all waypoints that have distance bigger than 50.

-

For every waypoint that has been filtered, we transform the world coordinates to local coordinates (from waypoint centric point of view), then we check whether the car coordinate has negative x value or not. If it is, then the waypoint is ahead of the car.

After publishing the final waypoints (the nearest waypoints that are ahead of the car), the system will generate the velocity recommendation that we can receive in dbw node part. We got the current velocity as well. So we have speed difference or speed error. We can find throttle value from pid controller that accept this speed error. The steering value (so we can follow the curve of the road) can be taken from yaw controller. If the speed error is negative (meaning we exceed our speed recommendation), we set throttle to be 0 and the brake to be negative of speed error (which is negative) then multiplied by 1000.

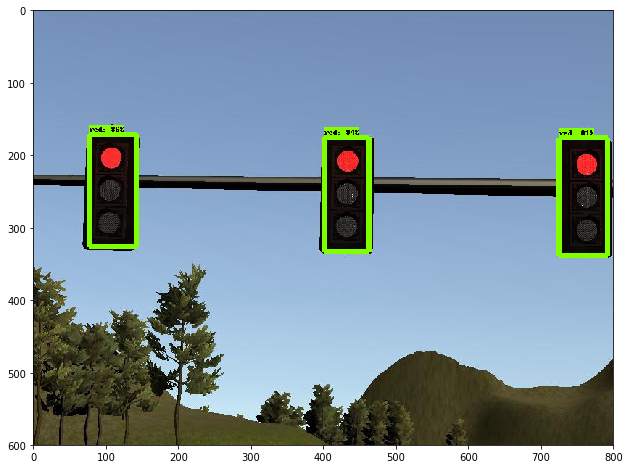

There are two models for Traffic Lights Detection, one for Simulator, another for Udacity Test Site.

If simulator is used, please change GRAPH_FILE='models/frozen_inference_graph_simulator.pb' in tl_classifier.py

If test site is used, please change GRAPH_FILE='models/frozen_inference_graph_test_site.pb' in tl_classifier.py

If there are mutiple traffic lights are detected, the class of bounding box with highest socres will return.

A pre-trained MobileNet on coco dateset was used. We also collected and annotated simulator and test site data.

- Export images, rosrun image_view extract_images image:=/image_color .

- Annotate images, please install

and saving as a YAML file maybe a good choice.

- Generate TensorFlow TFRecord files.

TensorFlow object detection API approach.

- Donwload Pre-Trained Models:

, as a reminder, MobileNet is the smallest in volume, which is about 20MB~30MB after squeezing graph.

- Prepare config file

- Train models and freeze models.

python models/research/object_detection/train.py

--logtostderr

--pipeline_config_path=ssd_mobilenet_v1_coco.config

--train_dir=train

python models/research/object_detection/export_inference_graph.py

--input_type image_tensor

--pipeline_config_path ssd_mobilenet_v1_coco.config

--trained_checkpoint_prefix train/model.ckpt-20000

--output_directory output_inference_graph.pb

Once we got the red traffic light waypoint, we could calculate how many waypoints the traffic light waypoint is ahead of our car. Then we can segment our speed recommendation based on how far the red traffic light is.

If it is very near, we can set the speed recommendation to 0 (stop completely). If it is very far, we can set it as 11 m / s. In between, we can take the value between 0 and 11.

| How far red light | Speed recommendation |

|---|---|

| 0 - 37 | 0 |

| 37 - 45 | 1 |

| 45 - 60 | 3 |

| 60 - 70 | 5 |

| 70 - 100 | 7 |

| 100 - 120 | 9 |

| 120 - 200 | 10 |

| > 200 | 11.11 |