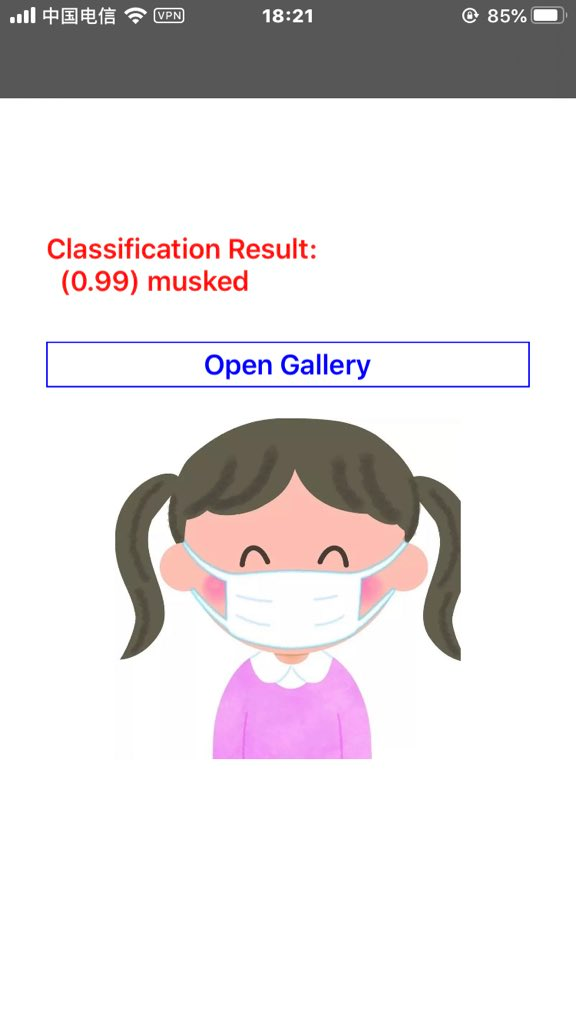

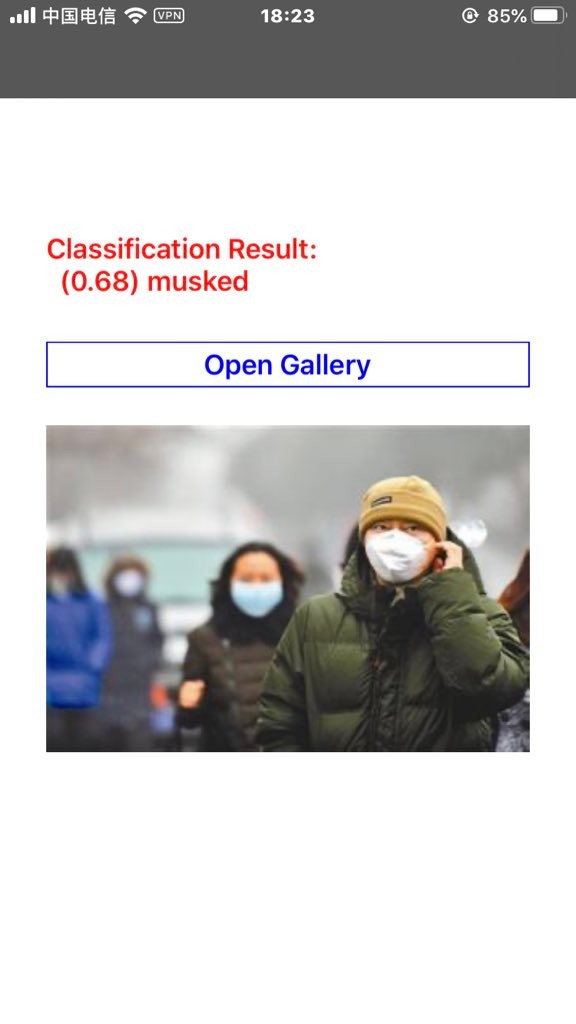

maskedClassification

A coreML test for machine learning focusing on distinguishing masked faces or not.

1. DataSource for Machine Learning.

ffmpeg -r 1 -i http://videoName.mov -r 1 "$filename%03d.png"

Record a video maybe 10s or 15s, then extract each key-frames as a picture at every second in ffmpeg

Take the pictures as the datasource for Machine Learning.

2. Trainning, Validating and Testing.

With the help in Xcode, we can create a client-side lite-coreML if we use [CreateML] in [File]--[Open Developer Tool]