ArXiv Preprint (arXiv 2304.09807)

Aug. 30th, 2023: We release an initial version of VMA.Aug. 9th, 2023: Code will be released in around 3 weeks.

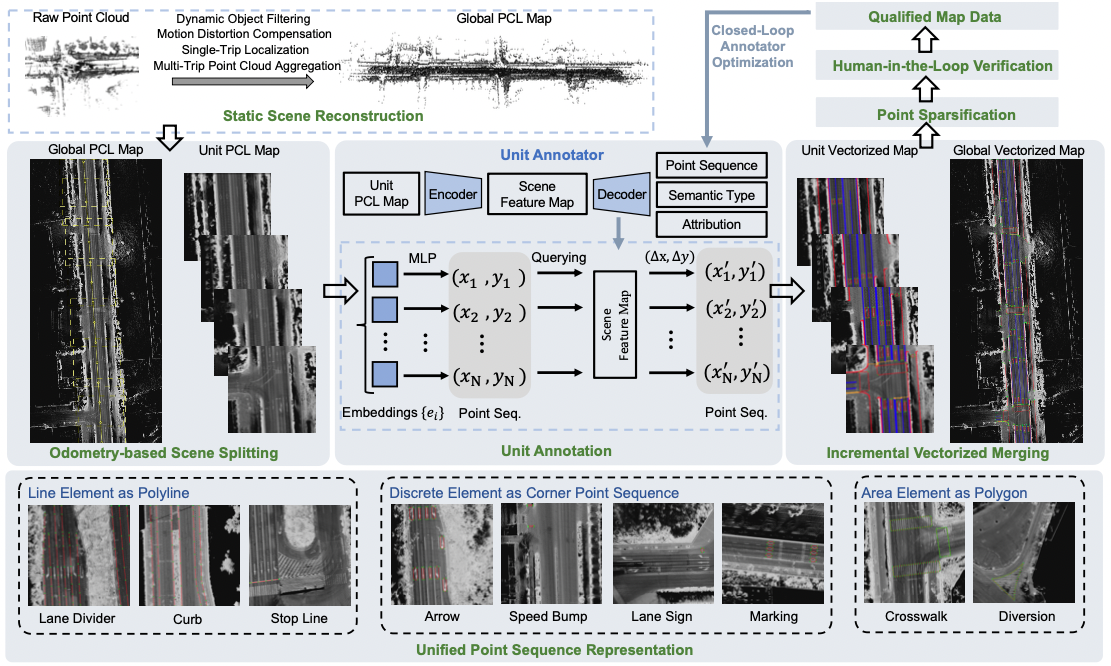

TL;DR VMA is a general map auto annotation framework based on MapTR, with high flexibility in terms of spatial scale and element type.

- Installation

- Prepare Dataset

- Inference on SD data (we only provide some samples of SD data for inference, since SD data is owned by Horizon)

- Train and Eval on NYC data

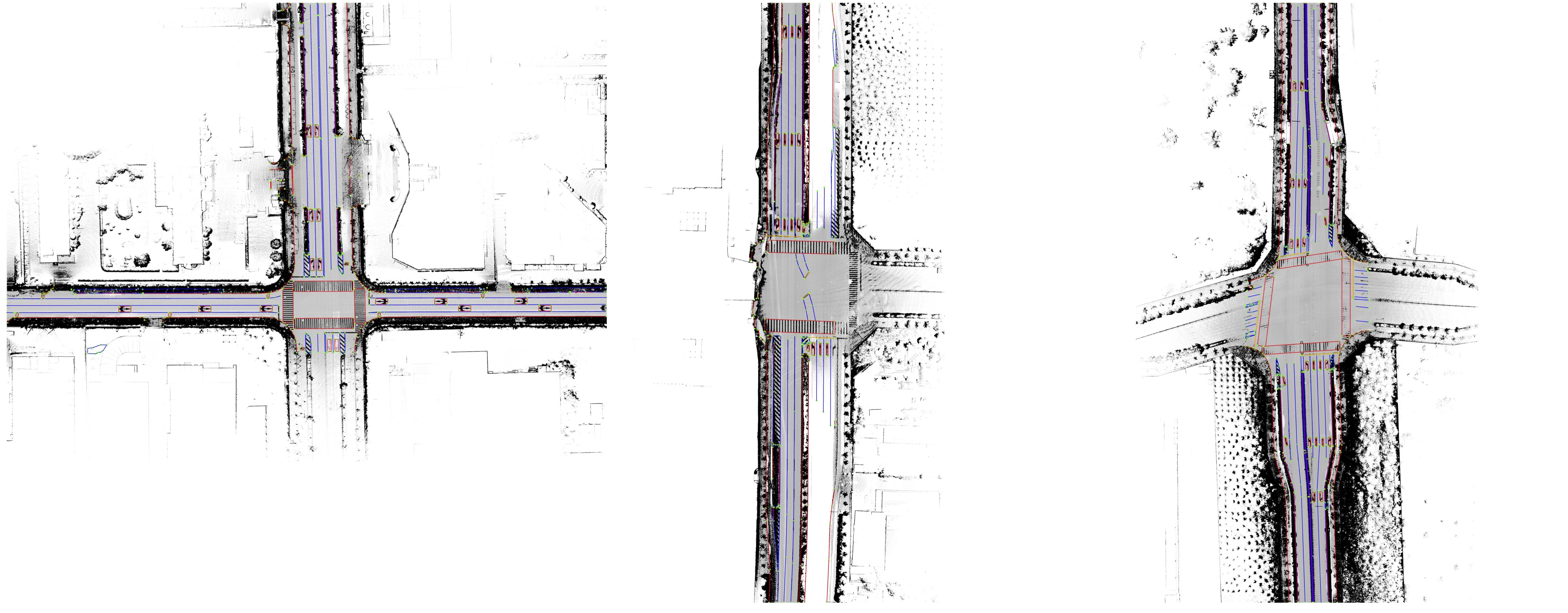

Remote sensing:

Urban scene:

Urban scene:

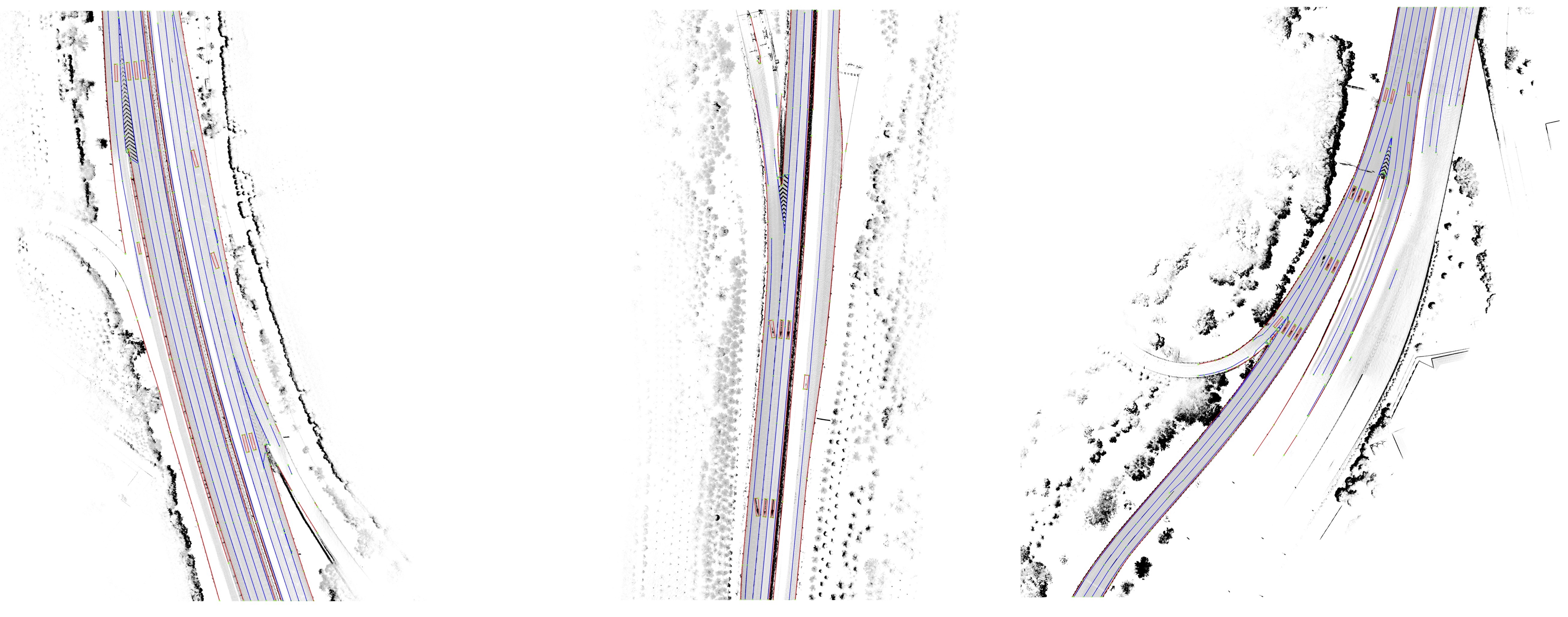

Highway scene:

Highway scene:

If you find VMA is useful in your research or applications, please consider giving us a star 🌟 and citing it by the following BibTeX entry.

@inproceedings{VMA,

title={VMA: Divide-and-Conquer Vectorized Map Annotation System for Large-Scale Driving Scene},

author={Chen, Shaoyu and Zhang, Yunchi and Liao, Bencheng, Xie, Jiafeng and Cheng, Tianheng and Sui, Wei and Zhang, Qian and Liu, Wenyu and Huang, Chang and Wang, Xinggang},

booktitle={arXiv preprint arXiv:2304.09807},

year={2023}

}