- XU Rui (Leader)

- HUANG Jianming

- HUANG Xiuqi

- ZHANG Zhiyuan

-

ipynb files are in the "ipynb IS HERE!!!!!" folder

-

Predicted Y Values file is in the home folder

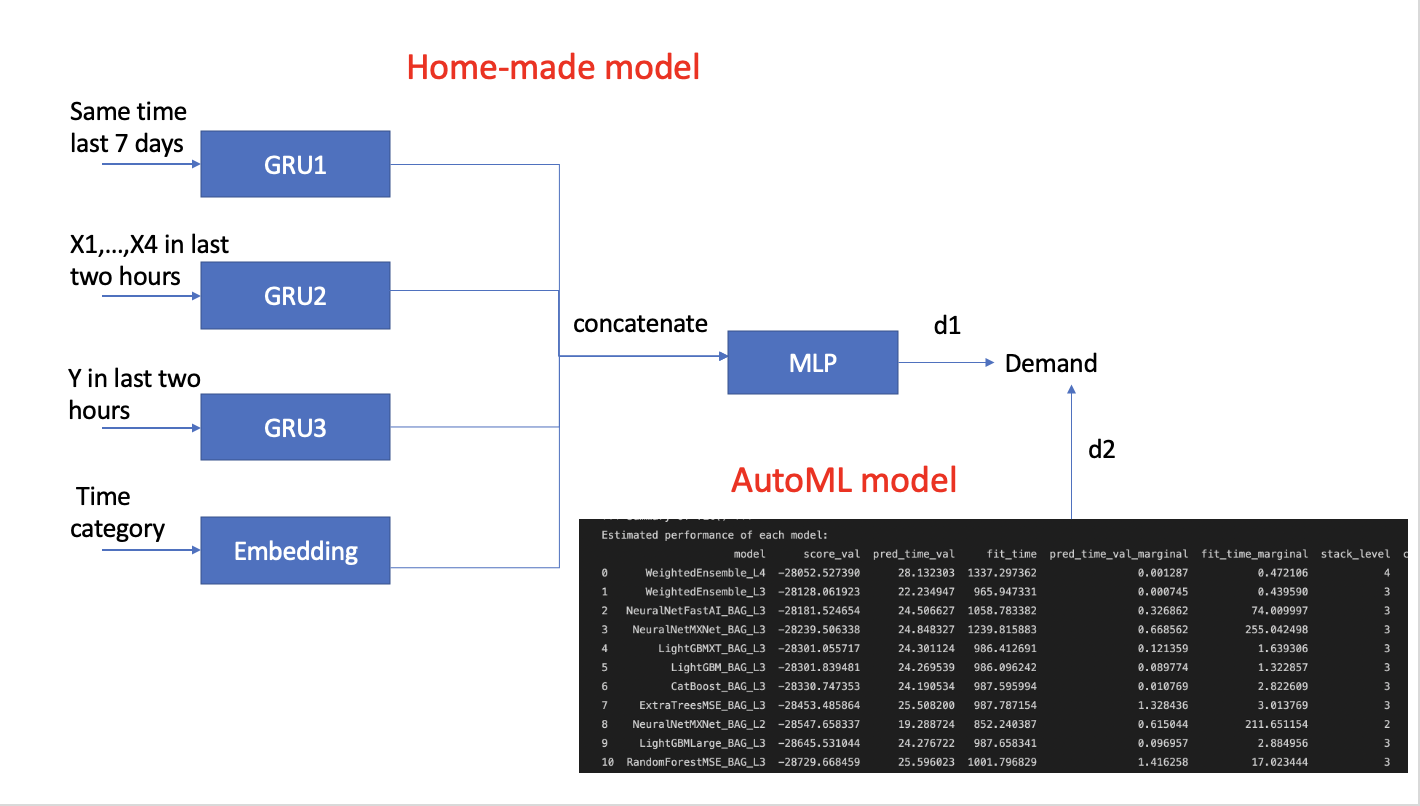

We use the recurrent neural network, to be more specific, GRU to handle the time-series data. In addition, our final prediction is the emsemble of the autoML and our home_made model, which make our model more accurate.

- For creators, particularly like journalist, Vlogger, commentator they need to keep up with the trend closely, but they need time to complete their works. So tight schedule it is. For example, it takes time for vlogger to write the scripts, direct the scenes and edit video, so if we can predict hot topics for them in advance, it saves time for them to create their work.

- Another issue is that they may face the drained creativity. What we are going to do is to make a ‘inspiration pool’ for them. We use our prediction model to conclude several hot topics in the future. Also, we can also predict the expected performance results, like views for customer’s selected topic.

- Because this ‘inspiration pool’ is make by predicted hot topics, so our customers (eg. Vlogger), they are very likely to be an internet sensation.

-

Feature Engineering

Each sample of train data provide only 5 values[time, x1, x2, x3, x4], this do not consider the time-series information. To solve this problem, we create the feature by ourselves. The first feature is the same time last 7 days. (for exmaple, the time is 01-30 11:10, then the feature are 01-23 11:10, 01-24 11:10, ... ,01-29 11:10). The second feature is the time catogory. (00:00 as 0, 00:10 as 1, ... , 23:50 as 143). The third feature is the X1 to X4 in last two hours and the fourth feature is the demand in last two hours.

-

Preprocessing

As the predicted value is demand, we use the log scale as we care about the percentage of difference. We also normalize the first, third, fourth feature using this formula (x-mean)/(max-min) and convert time catogory to one-hot vector.

-

Training

We use cosineAnnealing as scheduler, adam as optimizor, early stop, drop-out and weight decay to avoid overfitting. We split the training dataset to train/test/val for our evaluation and training for 35 epoches.

-

Ensembling

For the final prediction, we ensemble the autoML model and the home-made model to achieve better performance.

-

Flask Framework

Flask is applied in our project to give a better experience to the users. It utilizes the functions of werkzurg and jinja and allows other extensions to develop the website much more easily. It contains the structure of a static folder (for storing images and other miscellaneous files), a template folder (for rendering HTML files), and several back-end functions which allow front-end and back-end interaction.

-

Bootstrap

It is a good plugin to make the composing of the web pages more decent.

-

Ajax

A convenient tool for asynchronous data transmission.

-

Highcharts

The Highcharts library contains many tools needed to create fancy and reliable data visualizations.