A VTube Studio plugin that bridging facial tracking from iFacialMocap (IOS), enabling full apple ARKit facial tracking features.

This is a version implemented in Python, there is also a version implemented in C#

|

|

|---|

Note: The program is developed on Python 3.8.10

- Clone the repository.

- Run command

pip install -r requirements.txtto restore all the dependencies. - Make sure both your iPhone and PC are on the same network.

- Launch both VtubeStudio on your PC and iFacialMocap on your iPhone.

- Make sure the

VTube Studio APIis enabled, where the port should be 8001. - Launch the Bridging Plugin by command

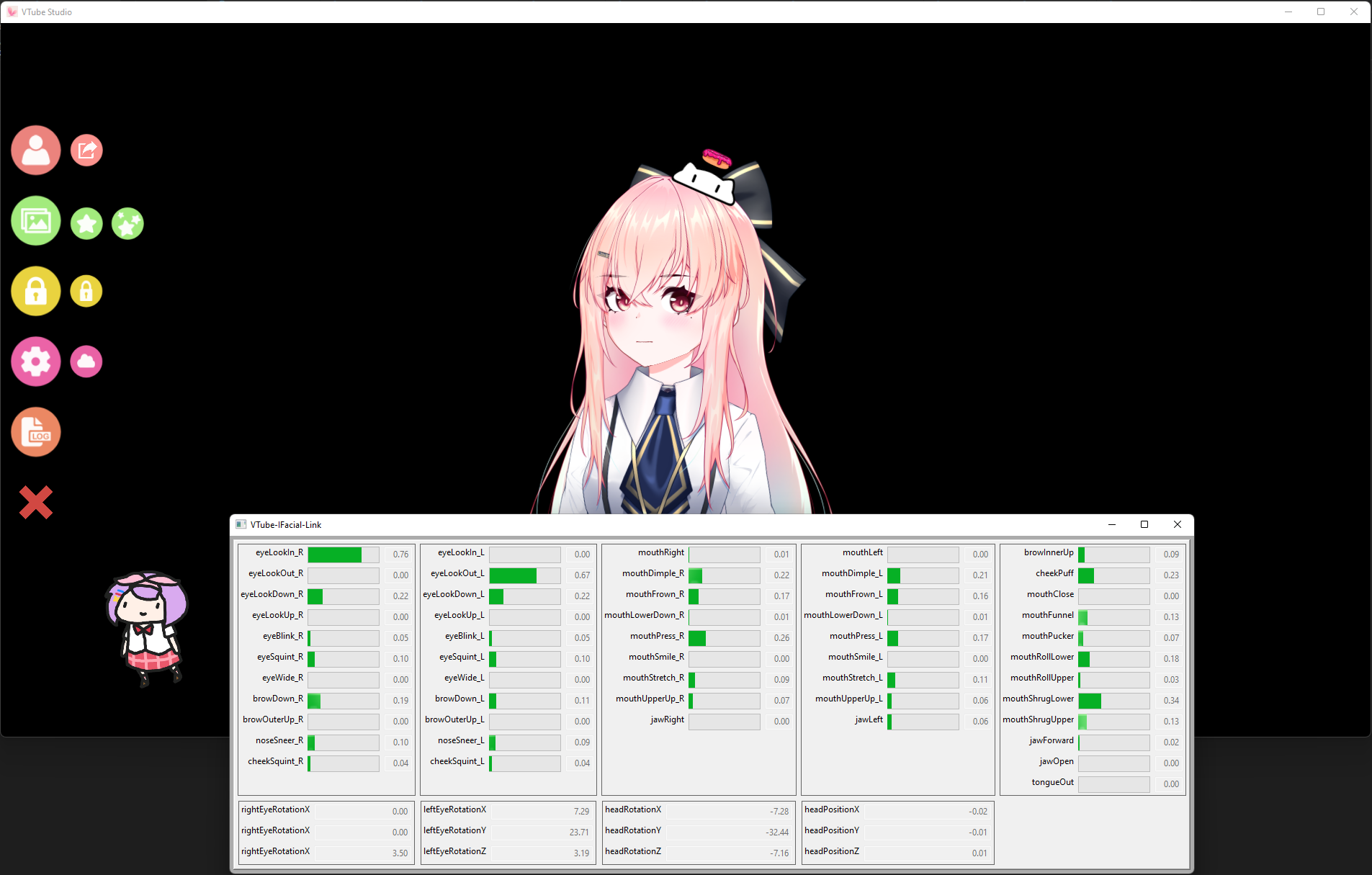

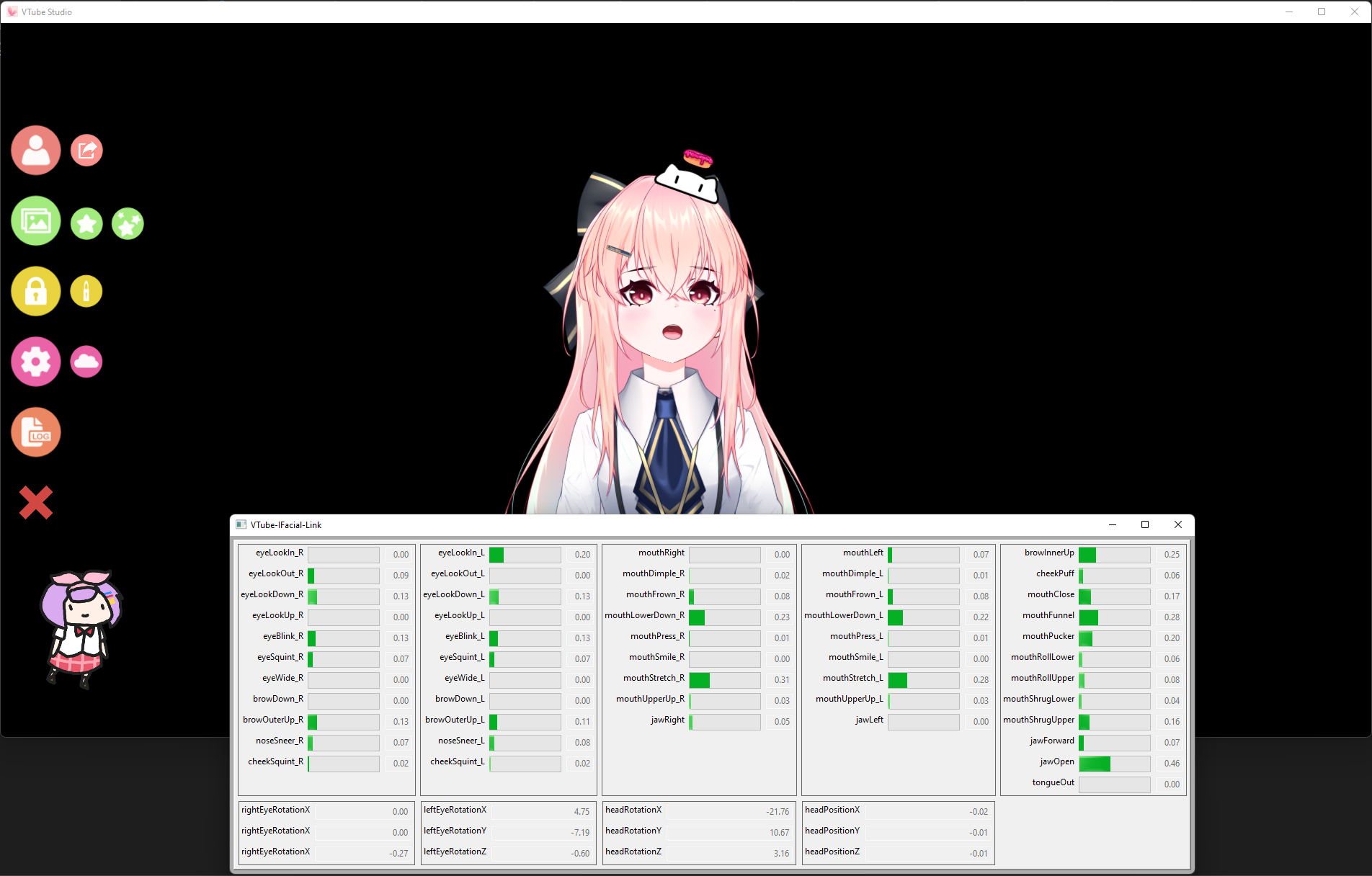

python main.py -c <iPhone's IP Address>, where<iPhone's IP Address>is the address shown in the iFacialMocap. - You should see a window showing all of the captured parameters and VtubeStudio should detect the plugin.

- FacePositionX

- FacePositionY

- FacePositionZ

- FaceAngleX

- FaceAngleY

- FaceAngleZ

- MouthSmile

- MouthOpen

- Brows

- TongueOut

- EyeOpenLeft

- EyeOpenRight

- EyeLeftX

- EyeLeftY

- EyeRightX

- EyeRightY

- CheekPuff

- FaceAngry

- BrowLeftY

- BrowRightY

- MouthX

- EyeBlinkLeft

- EyeLookDownLeft

- EyeLookInLeft

- EyeLookOutLeft

- EyeLookUpLeft

- EyeSquintLeft

- EyeWideLeft

- EyeBlinkRight

- EyeLookDownRight

- EyeLookInRight

- EyeLookOutRight

- EyeLookUpRight

- EyeSquintRight

- EyeWideRight

- JawForward

- JawLeft

- JawRight

- JawOpen

- MouthClose

- MouthFunnel

- MouthPucker

- MouthLeft

- MouthRight

- MouthSmileLeft

- MouthSmileRight

- MouthFrownLeft

- MouthFrownRight

- MouthDimpleLeft

- MouthDimpleRight

- MouthStretchLeft

- MouthStretchRight

- MouthRollLower

- MouthRollUpper

- MouthShrugLower

- MouthShrugUpper

- MouthPressLeft

- MouthPressRight

- MouthLowerDownLeft

- MouthLowerDownRight

- MouthUpperUpLeft

- MouthUpperUpRight

- BrowDownLeft

- BrowDownRight

- BrowInnerUp

- BrowOuterUpLeft

- BrowOuterUpRight

- CheekPuff

- CheekSquintLeft

- CheekSquintRight

- NoseSneerLeft

- NoseSneerRight

- TongueOut

- Use ML model to detect smiling and anger from multiple parameters.

- Build a standalone face tracking link app on IOS. ref

- Hand tracking feature ref-1 ref-2

The model in screenshots is created by 猫旦那