Project Page | Paper | Data

Official Pytorch implemntation of the paper "NeuDA: Neural Deformable Anchor for High-Fidelity Implicit Surface Reconstruction", accepted to CVPR 2023.

We presents Deformable Anchors representation approach and a simple hierarchical position encoding strategy. The former maintains learnable anchor points at verities to enhance the capability of neural implicit model in handling complicated geometric structures, and the latter explores complementaries of high-frequency and low-frequency geometry properties in the multi-level anchor grid structure.

neuda_video.mp4

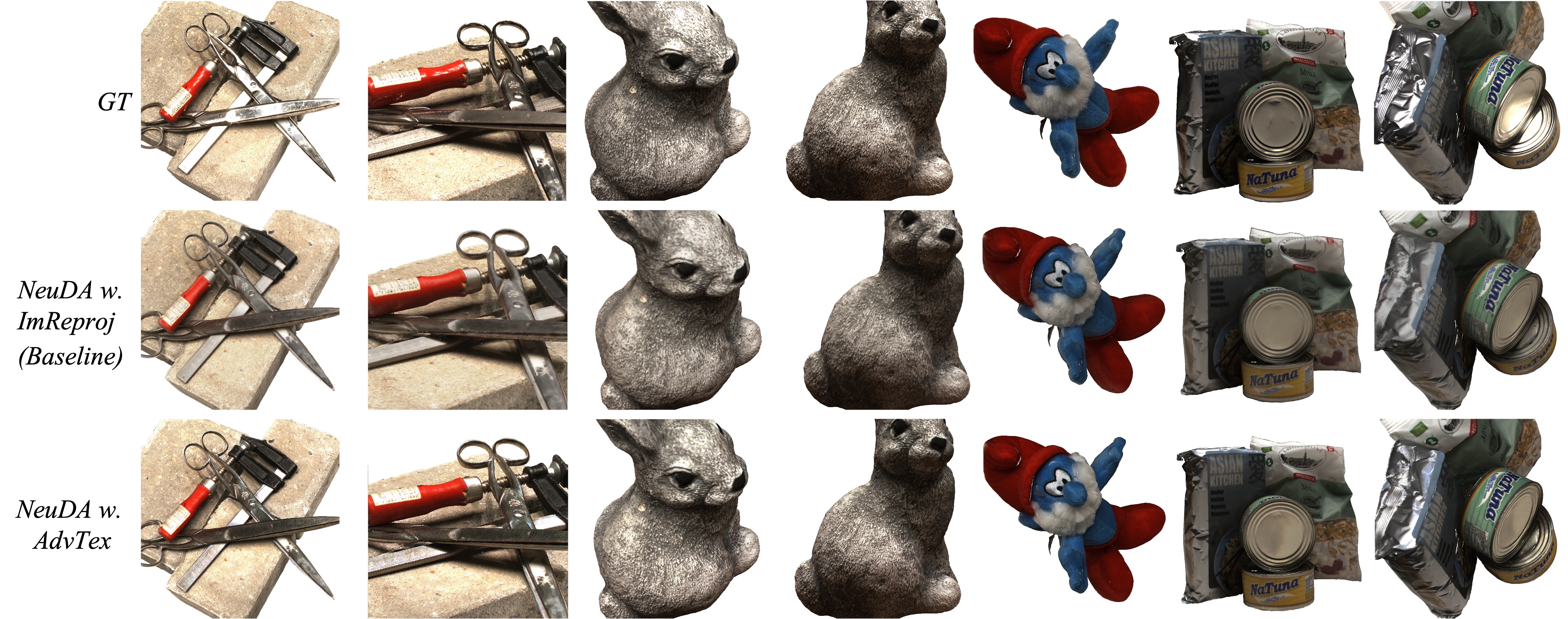

This repository implements the training / evaluation pipeline of our paper and provide a script to generate a textured mesh. Besides, based on reconstructed surface by NeuDA, we further adopt an adversarial texture optimization method to recover fine-detail texture.

Clone this repository, and install environment.

$ git clone https://github.com/3D-FRONT-FUTURE/NeuDA.git

$ cd NeuDA

$ pip install -r requirements.txtFor adversarial texture optimization, you need to install Blender-3.4 that will be used to generate initial UVMap and texture. And make sure that blender-3.4 has been correctly set in your environment variables.

Compile the cuda rendering and cpp libraries.

$ sudo apt-get install libglm-dev libopencv-dev

$ cd NeuDA/models/texture/Rasterizer

$ ./compile.sh

$ cd ../CudaRender

$ ./compile.sh- DTU: data_DTU.zip for training, DTU Point Clouds for evaluation.

- Please install COLMAP.

- Following 1st step General step-by-step usage to recover camera poses in LLFF.

- Using script

tools/preprocess_llff.pyto generatecameras_sphere.npz.

The data should be organized as follows:

<case_name>

|-- cameras_sphere.npz # camera parameters

|-- image

|-- 000.png # target image for each view

|-- 001.png

...

|-- mask

|-- 000.png # target mask each view (For unmasked setting, set all pixels as 255)

|-- 001.png

...

|-- sparse

|-- points3D.bin # sparse point clouds

...

|-- poses_bounds.npy # camera extrinsic & intrinsic params, details seen in LLFF

Here the cameras_sphere.npz follows the data format in IDR, where world_mat_xx denotes the world to image projection matrix, and scale_mat_xx denotes the normalization matrix.

- Training and evaluation with mask

$ ./traineval.sh $case_name $conf $gpu_idFor examples, ./traineval.sh dtu_scan24 ./conf/neuda_wmask.conf 1

- Training and evaluation without mask

$ ./traineval.sh $case_name $conf $gpu_idFor examples, ./traineval.sh dtu_scan24 ./conf/neuda_womask.conf 1

The corresponding log can be found in exp/<case_name>/<exp_name>/.

- Evaluation with pretrained models.

$ python extract_mesh.py --case $case --conf $conf --eval_metricFor examples, python extract_mesh.py --case dtu_scan24 --conf ./conf/neuda_womask.conf --eval_metric

- [Optional] Given the reconstructed surface by NeuDA, training with adversarial texture optimization:

$ ./texture_opt.sh $case_name $conf $gpu_id [$in_mesh_path] [$out_mesh_path]For examples, ./texture_opt.sh dtu_scan24 ./conf/texture_opt.conf 1

If you find the code useful for your research, please cite our paper.

@inproceedings{cai2023neuda,

title={NeuDA: Neural Deformable Anchor for High-Fidelity Implicit Surface Reconstruction},

author={Cai, Bowen and Huang, Jinchi and Jia, Rongfei and Lv, Chengfei and Fu, Huan},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

year={2023}

}

Our code is heavily based on NeuS. Some of the evaluation and cuda rendering code is borrowed from NeuralWarp and AdversarialTexture with respectively. Thanks to the authors for their great work. Please check out their papers for more details.