This repository contains a PyTorch implementation of the Paper 'Learning Deep Models for Face Anti-Spoofing: Binary or Auxiliary Supervision' - Yaojie Liu, Amin Jourabloo, Xiaoming Liu from Michigan State University.

This model is able to discriminate between sequences showing live and spoof faces and can therefore be applied to biometric security systems.

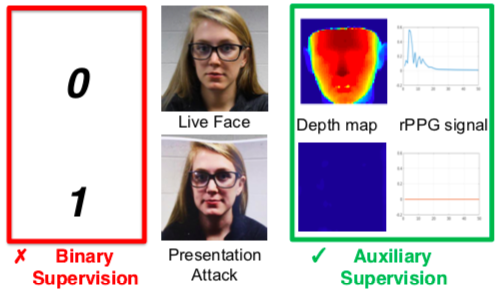

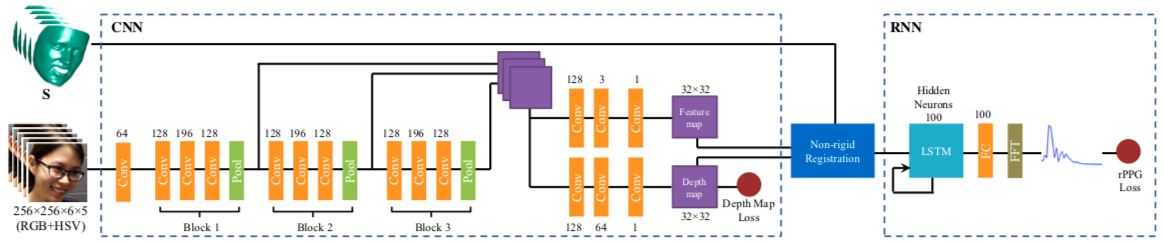

The innovative aspect of this approach is that the decision is made based on two explicit criteria: the depth map and the estimated rPPG signal.

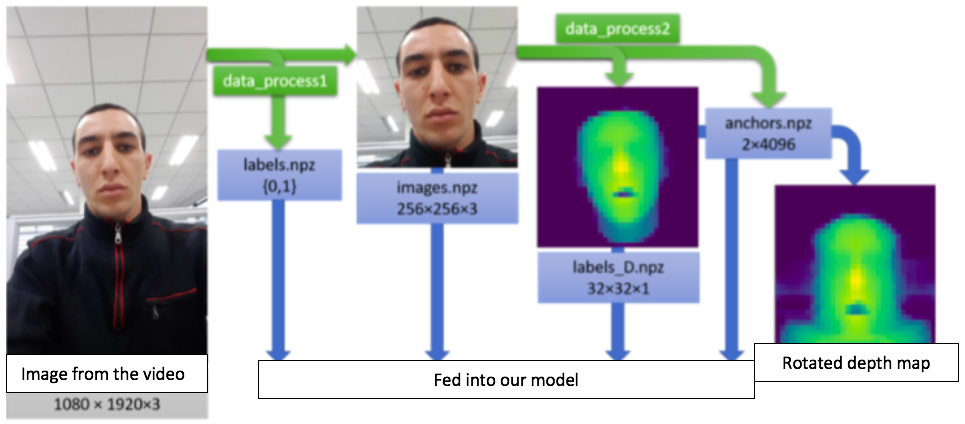

Using the OULU dataset as training examples, the labels have been generated using Face Alignment in Full Pose Range: A 3D Total Solution for the depth map and Heartbeat: Measuring heart rate using remote photoplethysmography (rPPG) for the heartbeat signal.

Instead of using the depth map labels as coordinates for the rotation of the face (in the non-rigid registration layer), we train a multi layer perceptron, called 'Anti_spoof_net_rotateur', to perform it. With this amelioration, the system if fully autonomous.