Paper • Website • Video • Dataset • Citation

-

This is the official implementation of "Phishpedia: A Hybrid Deep Learning Based Approach to Visually Identify Phishing Webpages" USENIX'21 link to paper, link to our website, link to our dataset.

-

Existing reference-based phishing detectors:

- ❌ Lack of interpretability, only give binary decision (legit or phish)

- ❌ Not robust against distribution shift, because the classifier is biased towards the phishing training set

- ❌ Lack of a large-scale phishing benchmark dataset

-

The contributions of our paper:

- ✅ We propose a phishing identification system Phishpedia, which has high identification accuracy and low runtime overhead, outperforming the relevant state-of-the-art identification approaches.

- ✅ We are the first to propose to use consistency-based method for phishing detection, in place of the traditional classification-based method. We investigate the consistency between the webpage domain and its brand intention. The detected brand intention provides a visual explanation for phishing decision.

- ✅ Phishpedia is NOT trained on any phishing dataset, addressing the potential test-time distribution shift problem.

- ✅ We release a 30k phishing benchmark dataset, each website is annotated with its URL, HTML, screenshot, and target brand: https://drive.google.com/file/d/12ypEMPRQ43zGRqHGut0Esq2z5en0DH4g/view?usp=drive_link.

- ✅ We set up a phishing monitoring system, investigating emerging domains fed from CertStream, and we have discovered 1,704 real phishing, out of which 1133 are zero-days not reported by industrial antivirus engine (Virustotal).

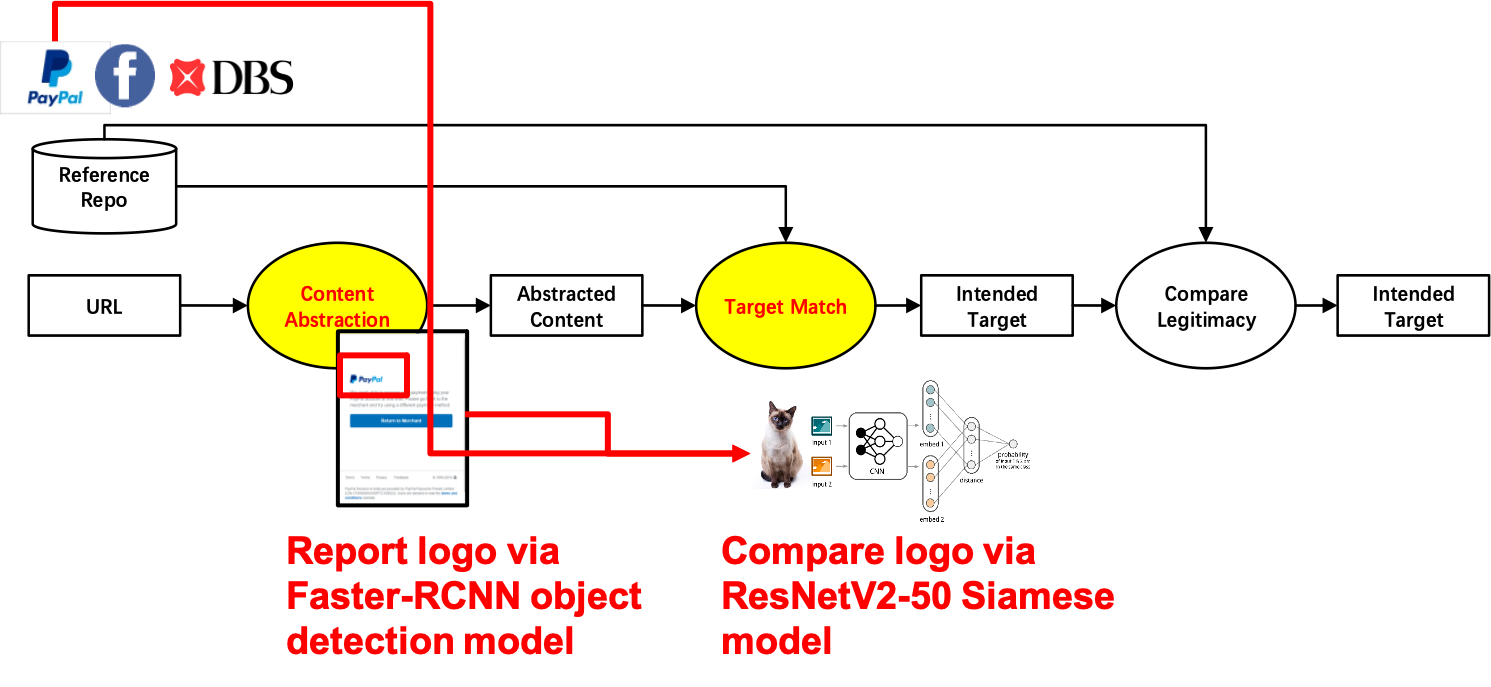

Input: A URL and its screenshot Output: Phish/Benign, Phishing target

-

Step 1: Enter Deep Object Detection Model, get predicted logos and inputs (inputs are not used for later prediction, just for explanation)

-

Step 2: Enter Deep Siamese Model

- If Siamese report no target,

Return Benign, None - Else Siamese report a target,

Return Phish, Phishing target

- If Siamese report no target,

📌 We need to move everything under expand_targetlist/expand_targetlist to expand_targetlist/ so that there are no nested directories.

- models/

|___ rcnn_bet365.pth

|___ faster_rcnn.yaml

|___ resnetv2_rgb_new.pth.tar

|___ expand_targetlist/

|___ Adobe/

|___ Amazon/

|___ ......

|___ domain_map.pkl

- logo_recog.py: Deep Object Detection Model

- logo_matching.py: Deep Siamese Model

- configs.yaml: Configuration file

- phishpedia.py: Main script

Requirements:

- Anaconda installed, please refer to the official installation guide: https://docs.anaconda.com/free/anaconda/install/index.html

- Create a local clone of Phishpedia

git clone https://github.com/lindsey98/Phishpedia.git- Setup the phishpedia conda environment. In this step, we would be installing the core dependencies of Phishpedia such as pytorch, and detectron2. In addition, we would also download the model checkpoints and brand reference list. This step may take some time.

chmod +x ./setup.sh

export ENV_NAME="phishpedia"

./setup.shconda activate phishpedia- Run in bash

python phishpedia.py --folder <folder you want to test e.g. ./datasets/test_sites>The testing folder should be in the structure of:

test_site_1

|__ info.txt (Write the URL)

|__ shot.png (Save the screenshot)

test_site_2

|__ info.txt (Write the URL)

|__ shot.png (Save the screenshot)

......

- In our paper, we also implement several phishing detection and identification baselines, see here

- The logo targetlist described in our paper includes 181 brands, we have further expanded the targetlist to include 277 brands in this code repository

- For the phish discovery experiment, we obtain feed from Certstream phish_catcher, we lower the score threshold to be 40 to process more suspicious websites, readers can refer to their repo for details

- We use Scrapy for website crawling

If you find our work useful in your research, please consider citing our paper by:

@inproceedings{lin2021phishpedia,

title={Phishpedia: A Hybrid Deep Learning Based Approach to Visually Identify Phishing Webpages},

author={Lin, Yun and Liu, Ruofan and Divakaran, Dinil Mon and Ng, Jun Yang and Chan, Qing Zhou and Lu, Yiwen and Si, Yuxuan and Zhang, Fan and Dong, Jin Song},

booktitle={30th $\{$USENIX$\}$ Security Symposium ($\{$USENIX$\}$ Security 21)},

year={2021}

}If you have any issues running our code, you can raise an issue or send an email to liu.ruofan16@u.nus.edu, lin_yun@sjtu.edu.cn, and dcsdjs@nus.edu.sg