This repo was forked and modified from hollance/YOLO-CoreML-MPSNNGraph. Some changes I made:

- Only keep CoreML since that is the only part I am interested at

- Use YOLO2 pre-trained model instead of TinyYOLO. YOLO2 pre-trained model provides more classes and more accurate than Tiny-YOLO. It is slower, but it can recognizes more stuff.

- Drop yad2k converter. I use darkflow to convert YOLO pre-trained models from darknet format to tensorflow. And use tf-coreml to convert from tensorflow to CoreML.

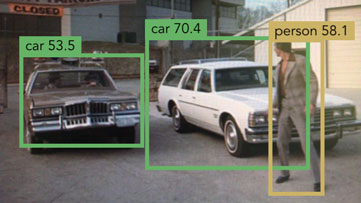

YOLO is an object detection network. It can detect multiple objects in an image and puts bounding boxes around these objects. Read hollance's blog post about YOLO to learn more about how it works.

In this repo you'll find:

- YOLO-CoreML: A demo app that runs the YOLO neural network on Core ML.

- Converter: The scripts needed to convert the original DarkNet YOLO model to Core ML.

To run the app:

- execute download.sh to download the pre-trained model

% sh download.sh - open the xcodeproj file in Xcode 9 and run it on a device with iOS 11 or better installed.

The reported "elapsed" time is how long it takes the YOLO neural net to process a single image. The FPS is the actual throughput achieved by the app.

NOTE: Running these kinds of neural networks eats up a lot of battery power. The app can put a limit on the number of times per second it runs the neural net. You can change this in

setUpCamera()by changing the linevideoCapture.fps = 50to a smaller number.

NOTE: You don't need to convert the models yourself. Everything you need to run the demo apps is included in the Xcode projects already.

If you're interested in how the conversion was done, check the instructions.