Declaration This repo contains data and code for the paper Task-Aware Machine Unlearning with Application to Load Forecasting. The paper has been accepted by IEEE Trans on Power System and the copyright may be preserved by IEEE. The authors are from Control and Power Research Group, Department of EEE, Imperial College London. You can find the latest version at arxiv preprint and IEEE early access.

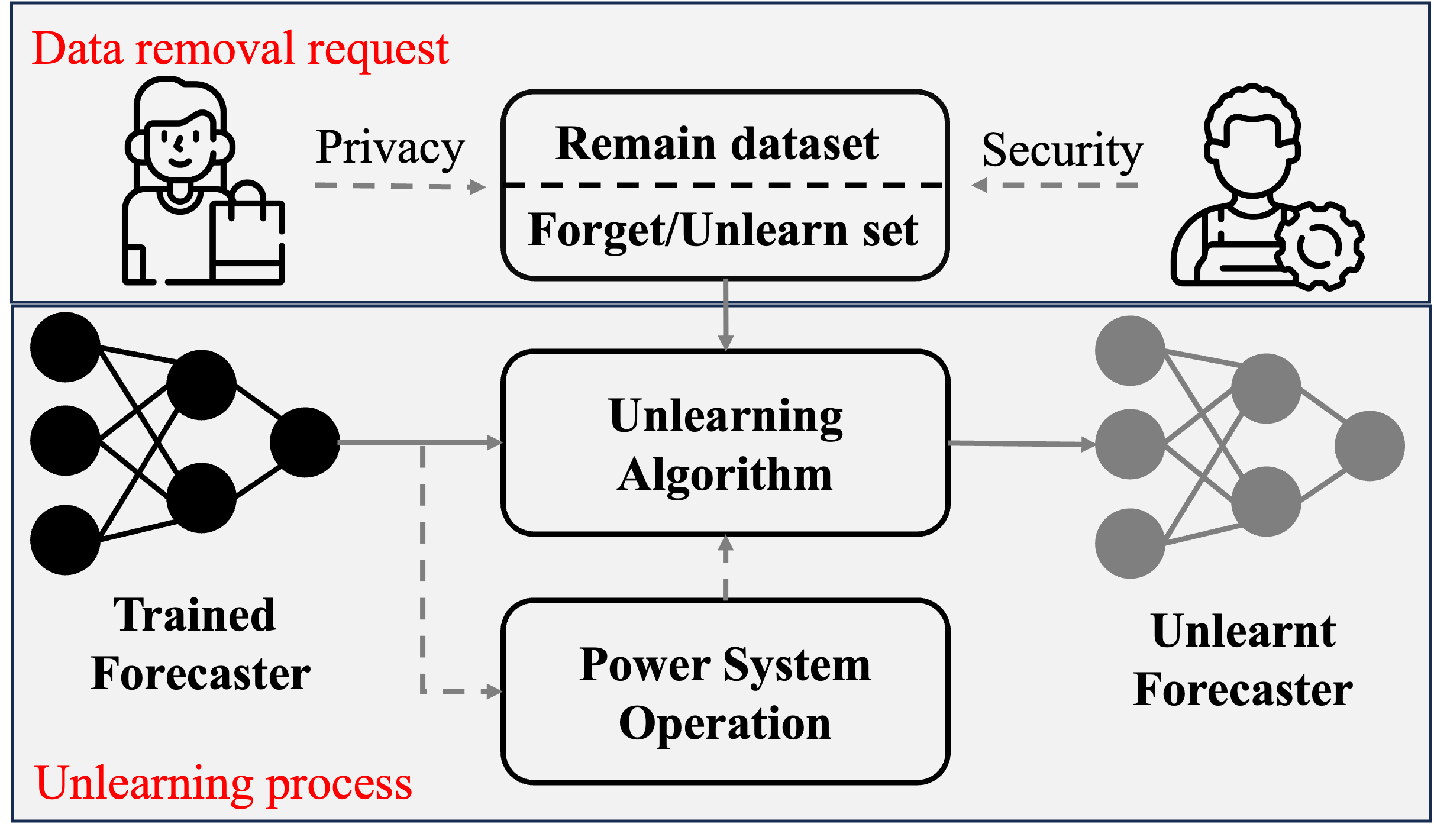

Abstract Data privacy and security have become a non-negligible factor in load forecasting. Previous researches mainly focus on training stage enhancement. However, once the model is trained and deployed, it may need to `forget' (i.e., remove the impact of) part of training data if the these data are found to be malicious or as requested by the data owner. This paper introduces the concept of machine unlearning which is specifically designed to remove the influence of part of the dataset on an already trained forecaster. However, direct unlearning inevitably degrades the model generalization ability. To balance between unlearning completeness and model performance, a performance-aware algorithm is proposed by evaluating the sensitivity of local model parameter change using influence function and sample re-weighting. Furthermore, we observe that the statistical criterion such as mean squared error, cannot fully reflect the operation cost of the downstream tasks in power system. Therefore, a task-aware machine unlearning is proposed whose objective is a trilevel optimization with dispatch and redispatch problems considered. We theoretically prove the existence of the gradient of such an objective, which is key to re-weighting the remaining samples. We tested the unlearning algorithms on linear, CNN, and MLP-Mixer based load forecasters with a realistic load dataset. The simulation demonstrates the balance between unlearning completeness and operational cost.

Please cite our paper if it helps your research:

@ARTICLE{xu202task,

author={Xu, Wangkun and Teng, Fei},

journal={IEEE Transactions on Power Systems},

title={Task-Aware Machine Unlearning and Its Application in Load Forecasting},

year={2024},

volume={},

number={},

pages={1-12},

keywords={Training;Load modeling;Load forecasting;Power systems;Data models;Security;Task analysis;Data privacy and security;load forecasting;machine unlearning;end-to-end learning;power system operation;influence function},

doi={10.1109/TPWRS.2024.3376828}}

The key packages used in this repo include:

torch==2.0.1+cpufor automatic differentiation.cvxpy==1.2.3for formulating convex optimization problem, refering to here.cvxpylayer==0.1.5for differentiating the convex layer, refering here.- a modified torch-influence to calculate the influence function. Our implementation can be found at `torch_influence/'.

gurobipy==11.0.0(optional) for solving optimization problems.

We note that some higher versions of cvxpy and cvxpylayers may not work. All other dependencies can be easy to handle. All the experiments are on cpu.

We use open source dataset from A Synthetic Texas Backbone Power System with Climate-Dependent Spatio-Temporal Correlated Profiles. You can download/read the descriptions of the dataset from the official webpage. Please cite/recogenize the original authors if you use the dataset.

After download the the .zip file into the data/ and change the name into raw_data.zip, unzip the file by

unzip data/raw_data.zip -d data/This will give a new folder data/Data_public/

The configurations of all power grid, network, and training are summarized in conf/. We use python package hydra to organize the experiments.

To generate the data for IEEE bus-14 system, run this by default (this may take several minutes)

python clean_data.pyIt will generate 14 .cxv files in data/data_case14 folder. We run a simple algorithm to find the most uncorrelated 14 loads from the original dataset. The algorithm is implemented in clean_data.py. Each .csv file contains the features and target load for one bus. Note that the calendric features are represented by the sine and cosine functions with different periods. The meteorological features are normalized by the mean and the variance. More detailed preprocessing can be found in the paper.

In addition, the raw load data in the Texas Backbone Power System cannot be directly used for arbitrary IEEE test system. Therefore, we first preprocess the load data to match the load level of system under test.

We have made data preprocessing flexible. You can also modify the clean_data.py and the case_modifier() function in utils/dataaset/ to match your system. In the future, we will update the data generation procedure to support more power grid.

The linear load forecaster can be automatically trained when any unlearning algorithms are called. However, you need to train the neural network baseds forecaster on the core data. We provide two NN structure, one is naive convolutional nn and another is MLP-Mixer.

To train the NN forecaster on the core dataset, you can run

python train_nn.py model=convand

python train_nn.py model=mlpmixerOur code contains a simple check for unlearning the linear models using

- Analytic method which calculates the gradient and Hessian analytically

- Call

torch-influencefunctions to calculate the gradient, Hessian, and inverse Hessian vector product (iHVP) usingdirectandcg. - Different unlearning formulations based on our paper.

To check the all the implementations are correct, run

python check.py unlearn_prop={a float number} model={linear or conv or mlpmixer}To highlight the significance of the unlearning performance, we choose the samples that can significantly improve the expected test set performance to unlearn.

To find the impact of each training sample on the expected test performance, run

python gen_index.py model={linear, conv, mlpmixer}To run the influence function based unlearning (as baseline)

python eval_unlearn.py model={linear, conv, mlpmixer} unlearn_prop={a float number}Set the criteria property to unchange the performance of mse, mape, or cost.

python eval_unchange.py unlearn_prop={a float number} model={linear, conv, mlpmixer} criteria={mse, mape, cost}

All the figures in the paper can be generated/visualized in analysis.ipynb.