🔥🔥Latest Release Version:V0.0.1

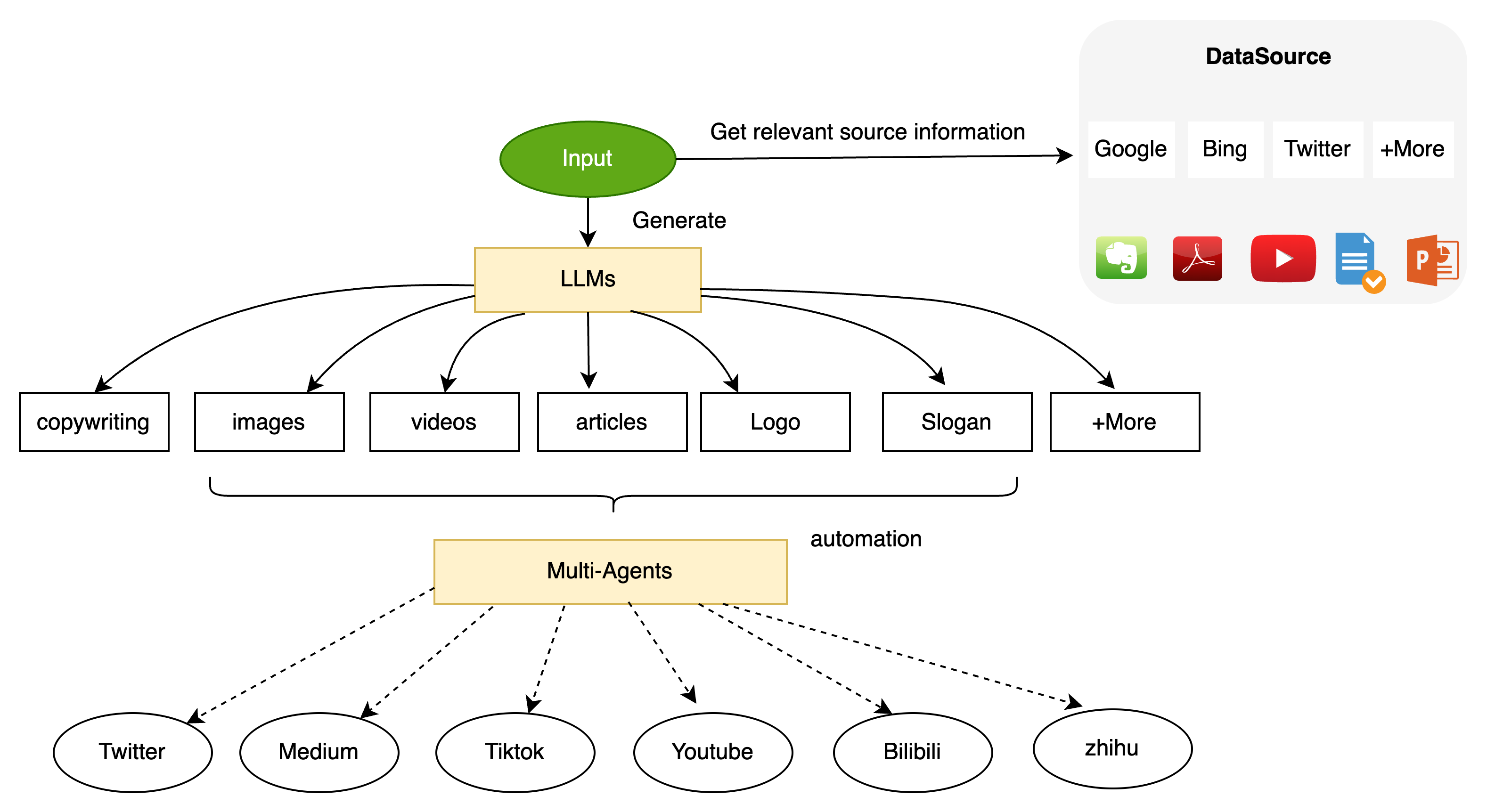

Using large language models and multi-agent technology, a single request can automatically generate marketing copy, images, and videos, and with one click, can be sent to multiple platforms, achieving a rapid transformation in marketing operations.

| LLM | Supported | Model Type | Notes |

|---|---|---|---|

| ChatGPT | ✅ | Proxy | Default |

| Bard | ✅ | Proxy | |

| Vicuna-13b | ✅ | Local Model | |

| Vicuna-13b-v1.5 | ✅ | Local Model | |

| Vicuna-7b | ✅ | Local Model | |

| Vicuna-7b-v1.5 | ✅ | Local Model | |

| ChatGLM-6B | ✅ | Local Model | |

| ChatGLM2-6B | ✅ | Local Model | |

| baichuan-13b | ✅ | Local Model | |

| baichuan2-13b | ✅ | Local Model | |

| baichuan-7b | ✅ | Local Model | |

| baichuan2-7b | ✅ | Local Model | |

| Qwen-7b-Chat | Coming soon | Local Model |

| LLM | Supported | Notes |

|---|---|---|

| sentence-transformers | ✅ | Default |

| text2vec-large-chinese | ✅ | |

| m3e-large | ✅ | |

| bge-large-en | ✅ | |

| bge-large-zh | ✅ |

Firstly, download and install the relevant LLMs.

mkdir models & cd models

# Size: 522 MB

git lfs install

git clone https://huggingface.co/sentence-transformers/all-MiniLM-L6-v2

# [Optional]

# Size: 94 GB, supported run in cpu model(RAM>14 GB). stablediffusion-proxy service is recommended, https://github.com/xuyuan23/stablediffusion-proxy

git lfs install

git clone https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0

# [Optional]

# Size: 16 GB, supported run in cpu model(RAM>16 GB). Text2Video service is recommended. https://github.com/xuyuan23/Text2Video

git lfs install

git clone https://huggingface.co/cerspense/zeroscope_v2_576w

Then, download dependencies and launch your project.

yum install gcc-c++

pip install -r requirements.txt

# copy file `.env.template` to new file `.env`, and modify the params in `.env`.

cp .env.template .env

[Options]

# deploy stablediffusion service, if StableDiffusion proxy is used, no need to execute it!

python operategpt/providers/stablediffusion.py

[Options]

# deploy Text2Video service, if Text2Video proxy server is used, no need to execute it!

python operategpt/providers/text2video.py

# Quick trial: two params: idea and language, `en is default`, also supported zh(chinese).

python main.py "Prepare a travel plan to Australia" "en"

- By default, ChatGPT is used as the LLM, and you need to configure the

OPEN_AI_KEYin.env

OPEN_AI_KEY=sk-xxx

# If you don't deploy stable diffusion service, no image will be generated.

SD_PROXY_URL=127.0.0.1:7860

# If you don't deploy Text2Video service, no videos will be generated.

T2V_PROXY_URL=127.0.0.1:7861- More Details see file

.env.template