Trained on 60+ Gigs of data to identify:

drawings- safe for work drawings (including anime)hentai- hentai and pornographic drawingsneutral- safe for work neutral imagesporn- pornographic images, sexual actssexy- sexually explicit images, not pornography

This model powers NSFW JS - More Info

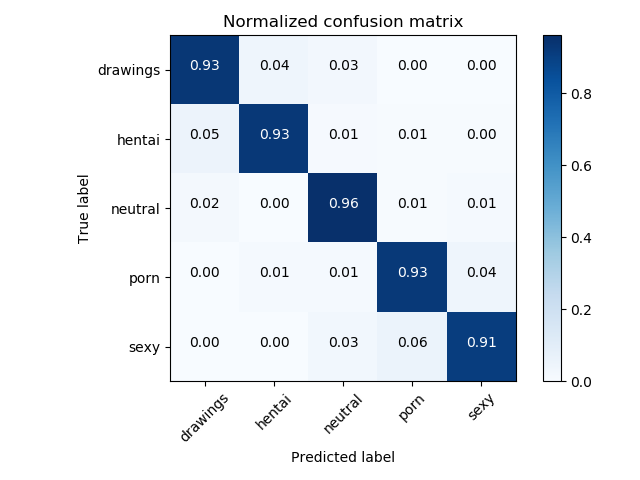

93% Accuracy with the following confusion matrix, based on Inception V3.

Review the _art folder for previous incarnations of this model.

keras (tested with versions > 2.0.0)

tensorflow (Not specified in setup.py)

from nsfw_detector import NSFWDetector

detector = NSFWDetector('./nsfw.299x299.h5')

# Predict single image

detector.predict('2.jpg')

# {'2.jpg': {'sexy': 4.3454722e-05, 'neutral': 0.00026579265, 'porn': 0.0007733492, 'hentai': 0.14751932, 'drawings': 0.85139805}}

# Predict multiple images at once using Keras batch prediction

detector.predict(['/Users/bedapudi/Desktop/2.jpg', '/Users/bedapudi/Desktop/6.jpg'], batch_size=32)

{'2.jpg': {'sexy': 4.3454795e-05, 'neutral': 0.00026579312, 'porn': 0.0007733498, 'hentai': 0.14751942, 'drawings': 0.8513979}, '6.jpg': {'drawings': 0.004214506, 'hentai': 0.013342537, 'neutral': 0.01834045, 'porn': 0.4431829, 'sexy': 0.5209196}}Please feel free to use this model to help your products!

If you'd like to say thanks for creating this, I'll take a donation for hosting costs.

- Keras 299x299 Image Model

- TensorflowJS 299x299 Image Model

- TensorflowJS Quantized 299x299 Image Model

- Tensorflow 299x299 Image Model - Graph if Needed

- Keras 224x224 Image Model

- TensorflowJS 224x224 Image Model

- TensorflowJS Quantized 224x224 Image Model

- Tensorflow 224x224 Image Model - Graph if Needed

- Tensorflow Quantized 224x224 Image Model - Graph if Needed

Simple description of the scripts used to create this model:

inceptionv3_transfer/- Folder with all the code to train the Keras based Inception v3 transfer learning model. Includesconstants.pyfor configuration, and two scripts for actual training/refinement.mobilenetv2_transfer/- Folder with all the code to train the Keras based Mobilenet v2 transfer learning model.visuals.py- The code to create the confusion matrix graphicself_clense.py- If the training data has significant inaccuracy,self_clensehelps cross validate errors in the training data in reasonable time. The better the model gets, the better you can use it to clean the training data manually.

e.g.

cd training

# Start with all locked transfer of Inception v3

python inceptionv3_transfer/train_initialization.py

# Continue training on model with fine-tuning

python inceptionv3_transfer/train_fine_tune.py

# Create a confusion matrix of the model

python visuals.pyThere's no easy way to distribute the training data, but if you'd like to help with this model or train other models, get in touch with me and we can work together.

Advancements in this model power the quantized TFJS module on https://nsfwjs.com/

My twitter is @GantLaborde - I'm a School Of AI Wizard New Orleans. I run the twitter account @FunMachineLearn

Learn more about me and the company I work for.

Special thanks to the nsfw_data_scraper for the training data. If you're interested in a more detailed analysis of types of NSFW images, you could probably use this repo code with this data.

If you need React Native, Elixir, AI, or Machine Learning work, check in with us at Infinite Red, who make all these experiments possible. We're an amazing software consultancy worldwide!

Thanks goes to these wonderful people (emoji key):

Gant Laborde 💻 📖 🤔 |

Bedapudi Praneeth 💻 🤔 |

|---|

This project follows the all-contributors specification. Contributions of any kind welcome!