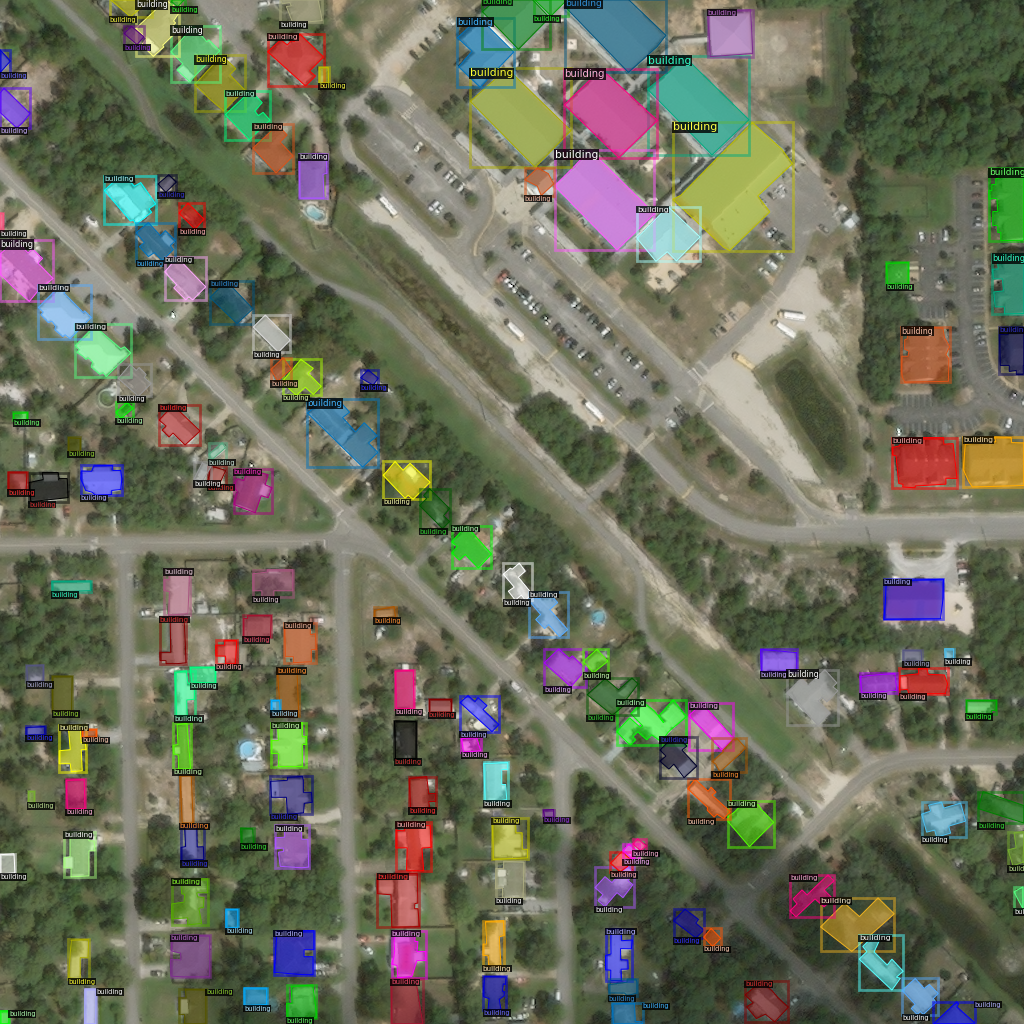

- In the xView2 challenge, competitors are asked to identify damaged buildings in satellite imagery following a natural disaster.

- Two images: before and after.

- Find building footprints using the 'before' image.

- Classify each of those footprints into one of four damage categories based on the 'after' image.

| Before | After |

|---|---|

|

|

| Description | Image |

|---|---|

| Before target |  |

| After target |  |

| Alignment |  |

- This problem is an analytical bottleneck in disaster response, and solving it has imminent real world benefits.

- It's feasible: there is sufficient high quality annotated data.

- It's really interesting from a technical perspective: at first glance it seems simple, but there are a lot of potentially valid approaches.

- It's very well supported and positioned to be implemented after the competition.

- Cf.: Understanding Clouds from Satellite Images.

- Poor annotator agreement.

- Indirectly applicable.

- Annotation requires lots of finicky manual processing.

- Before/after image.

- Resolution: output is 1024x1024 (small batch sizes).

- Above + metric = stability issues.

- Example solution: semantic segmentation and patch-classification.

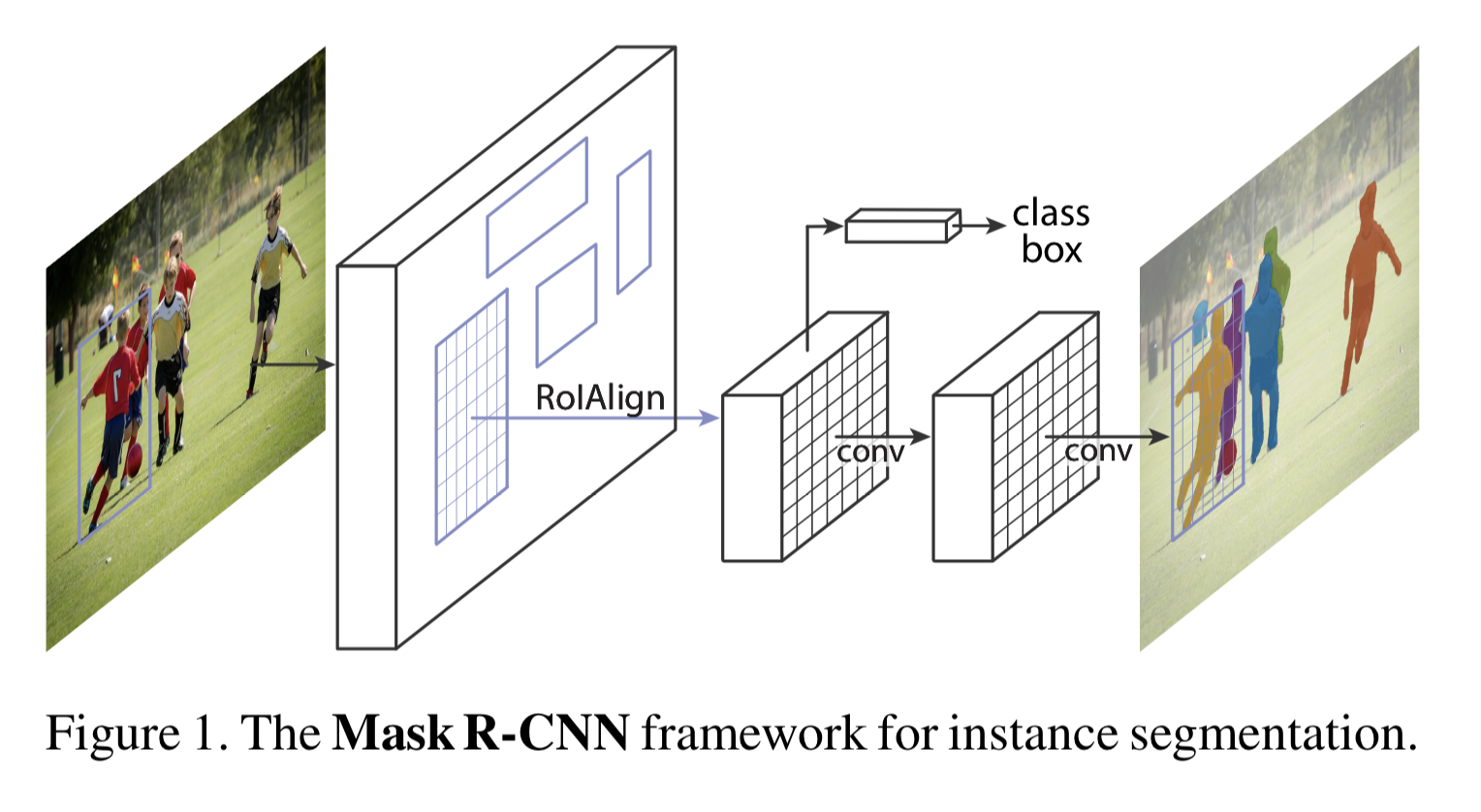

- This is in the spirit of 'R-CNN' e.g. regional cnn.

| Semantic | Instance |

|---|---|

|

|

- Semantic segmentation: some tricks with upscaling and pooling across layers, but mostly pretty simple – encoder + decoder setup.

- Instance segmentation: this came out of object detection (bounding boxes), and is a little crazy. 1000s of overlapping potential bounding boxes are proposed by one RoI (region-of-interest) network, before each one is pooled into a fixed size representation and classified/adjusted. Lots of post-processing required to handle the overlapping boxes, and an absurd number of hyperparams. There are much nicer solutions (e.g. [Objects as points].)

- Semantic segmentation on 'before' image to define regions of interest.

- RoI-pooling + classification on 'after' images.

- Why semantic segmentation on 'before' image? Much better recall: instance segmentation comes from the world of e.g. CoCo – where evaluation is measured by the number of 'good enough' masks. This task is evaluated on the pixel level.

- In addition, we can – one of the issues with instance segmentation is going from mask -> individual instances. We're evaluated at pixel level so this isn't essential.

- Why RoI pooling/object detection approach on the second image? This corresponds with the way that the images are labeled e.g. each polygon is classified into one of several categories.

- It also addresses the labeling drift – bounding boxes are much closer than the masks.

- Alternative: segmentation. A little more finicky to set-up.

- Heavy data augmentation, half-precision training, Linknet segmentation with efficientnet backbone.

- All experiments managed using Wandb.

- Config management.

- Models saved to cloud.

- Experimental management.

- Recreating runs.

- Easy to setup.

- Try and move from big to little picture. E.g. architecture -> loss -> model, etc.

- Be aware of metric – f-score is sensitive to threshold and noise – loss is more stable, but less interpretable.

- Find minimum experimental setup: e.g. performance @ 512x512 resolution generalizes to 1024x1024 (and trains 4x faster).

- Notebook + lean codebase (frequently re-iterate).

- Building segmentation

- Semantic segmentation outperforms instance segmentation.

- Segmentation architectures: LinkNet and UNet have better performance profiles vs. FPN and PSPNet.

- Pretraining: static pretrained architecture vs hand-rolled.

- Train models separately or together? Joint training/models don't seem to outperform individually trained models.

- Data augmentation: applying 25%, 50%, 75% of the time.

- Different losses: Focal vs. Focal + IoU.

- Half precision training using AMP in the default mode works best. See: full precision lower batch size, half-precision (default) 82% mem@batch8, half-precision (alternative) 86% mem@batch8, more variance.

- Much more possible work on the damage classification component.

- Deformable convolutions.

- Class-context concatenation.

- Different batch norms – frozen BN, group BN.

- Explicit edge categorisation in a separate CNN module.

- Wandb.

- Apex – half-precision/mixed-precision training from NVIDIA.

- Segmentation Models Pytorch: lightweight, nice API for segmentation.

- Detectron2: heavy-weight, more deeply integrated, harder to use – far more features and state of the art models. Complexity partly required by much more complicated approach.

- Prioritising single metric over maintainability, readability, performance, generalisability etc.

- Great for learning new skills.

- Solving the right problem.

- In the right way.

- Lean experimental setup.

- Lightweight codebase – the right balance between saving time now or saving time later.