This is the reference PyTorch implementation for training and testing single-shot object detection and oriented bounding boxes models using the method described in

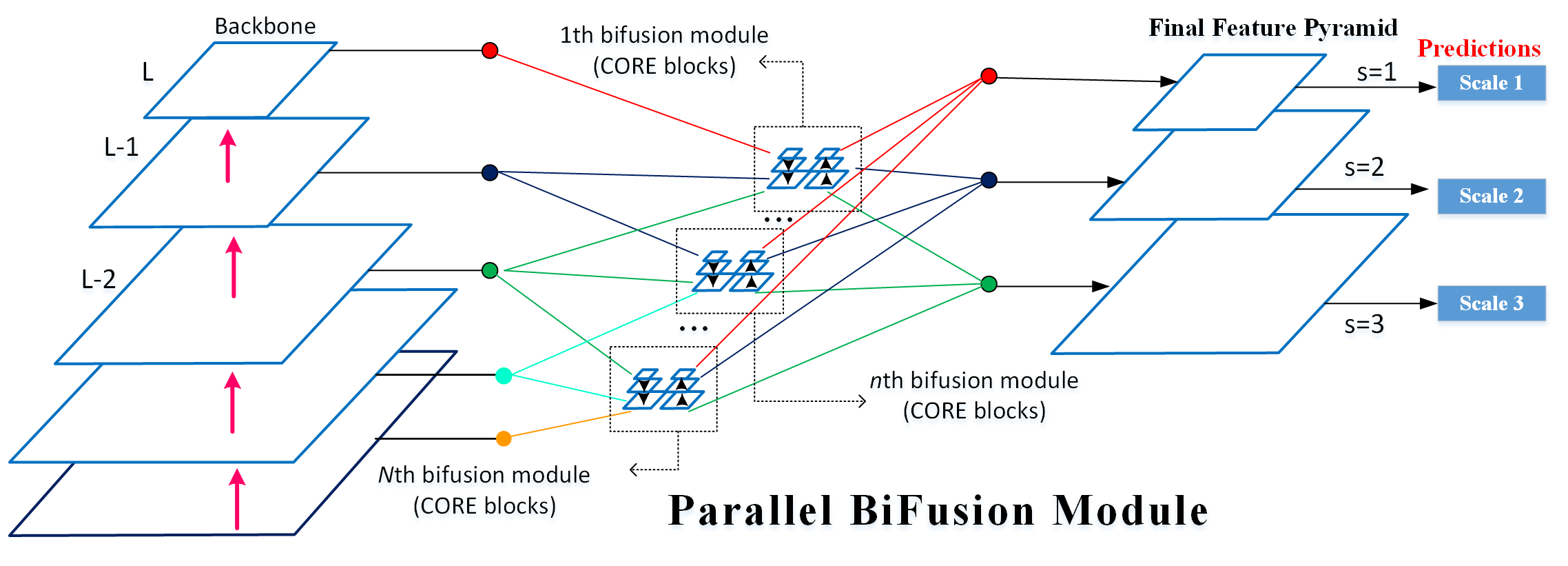

Parallel Residual Bi-Fusion Feature Pyramid Network for Accurate Single-Shot Object Detection

Ping-Yang, Chen, Ming-Ching Chang, Jun-Wei Hsieh, and Yong-Sheng Chen

| Model | Test Size | APtest | AP50test | AP75test | APstest | FPS |

|---|---|---|---|---|---|---|

| YOLOv7-x | 640 | 53.1% | 71.2% | 57.8% | 33.8% | 114 |

| PRB-FPN-CSP | 640 | 51.8% | 70.0% | 56.7% | 32.6% | 113 |

| PRB-FPN-MSP | 640 | 53.3% | 71.1% | 58.3% | 34.1% | 94 |

| PRB-FPN-ELAN | 640 | 52.5% | 70.4% | 57.2% | 33.4% | 70 |

| Model | Test Size | APtest | AP50test | AP75test | FPS |

|---|---|---|---|---|---|

| YOLOv7-E6E | 1280 | 56.8% | 74.4% | 62.1% | 36 |

| PRB-FPN6 | 1280 | 56.9% | 74.1% | 62.3% | 31 |

If you find our work useful in your research please consider citing our paper:

@ARTICLE{9603994,

author={Chen, Ping-Yang and Chang, Ming-Ching and Hsieh, Jun-Wei and Chen, Yong-Sheng},

journal={IEEE Transactions on Image Processing},

title={Parallel Residual Bi-Fusion Feature Pyramid Network for Accurate Single-Shot Object Detection},

year={2021},

volume={30},

number={},

pages={9099-9111},

doi={10.1109/TIP.2021.3118953}}

If you find the backbone also well-done in your research, please consider citing the CSPNet. Most of the credit goes to Dr. Wang:

@inproceedings{wang2020cspnet,

title={{CSPNet}: A New Backbone That Can Enhance Learning Capability of {CNN}},

author={Wang, Chien-Yao and Mark Liao, Hong-Yuan and Wu, Yueh-Hua and Chen, Ping-Yang and Hsieh, Jun-Wei and Yeh, I-Hau},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops},

pages={390--391},

year={2020}

}

Without the guidance of Dr. Mark Liao and a discussion with Dr. Wang, PRBNet would not have been published quickly in TIP and open-sourced to the community. Many of the code is borrowed from YOLOv4, YOLOv5_obb, and YOLOv7. Many thanks for their fantastic work: