Shocher, Assaf, Amil Dravid, Yossi Gandelsman, Inbar Mosseri, Michael Rubinstein, and Alexei A. Efros. "Idempotent Generative Network." arXiv preprint arXiv:2311.01462 (2023).

Unofficial implementation of Idempotent Generative Network.

Clone this repo:

git clone https://github.com/xyfJASON/IGN-pytorch.git

cd IGN-pytorchCreate and activate a conda environment:

conda create -n ign python=3.11

conda activate ignInstall dependencies:

pip install -r requirements.txtFirst, compute FFT statistics if you want to use FFT as initial noise instead of standard Gaussian.

python compute_freq_stats.py -c CONFIG [-n NUM] --save_file SAVE_FILE- By default, the script will use the first 1000 images from dataset to compute the statistics. Use

-n NUMto change the number of images. - After computing, set

noise_statsin the configuration file to the path of your computed statistics file. - Statistics on some datasets are already computed by me and uploaded in

./freq_stats.

Now, we can start training!

accelerate-launch train.py -c CONFIG [-e EXP_DIR] [--xxx.yyy zzz ...]- This repo uses the 🤗 Accelerate library for multi-GPUs/fp16 supports. Please read the documentation on how to launch the scripts on different platforms.

- Results (logs, checkpoints, tensorboard, etc.) of each run will be saved to

EXP_DIR. IfEXP_DIRis not specified, they will be saved toruns/exp-{current time}/. - To modify some configuration items without creating a new configuration file, you can pass

--key valuepairs to the script.

For example, to train the model on MNIST with default setting:

accelerate-launch train.py -c ./configs/ign_mnist.yamlOr if you want to try out Gaussian noise:

accelerate-launch train.py -c ./configs/ign_mnist.yaml --train.noise_type gaussianaccelerate-launch sample.py -c CONFIG \

--weights WEIGHTS \

--n_samples N_SAMPLES \

--save_dir SAVE_DIR \

[--repeat REPEAT]

[--batch_size BATCH_SIZE]- Use

--repeat REPEATto specify the number of times to applying the model repeatedly. For example,--repeat 2means$f(f(z))$ . - Adjust the batch size on each device by

--batch_size BATCH_SIZE.

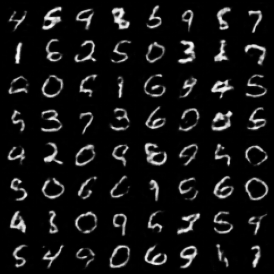

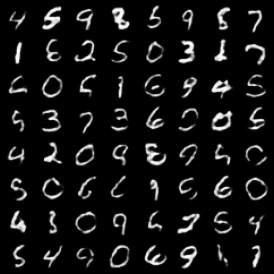

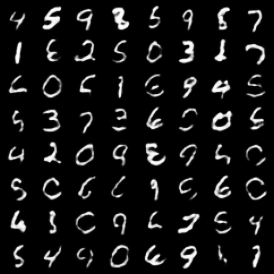

| z | f(z) | f(f(z)) | f(f(f(z))) |

|---|---|---|---|

|

|

|

|