Liu, Guilin, Fitsum A. Reda, Kevin J. Shih, Ting-Chun Wang, Andrew Tao, and Bryan Catanzaro. "Image inpainting for irregular holes using partial convolutions." In Proceedings of the European conference on computer vision (ECCV), pp. 85-100. 2018.

Unofficial implementation of Partial Convolution.

Clone this repo:

git clone https://github.com/xyfJASON/PartialConv-Inpainting-pytorch.git

cd PartialConv-Inpainting-pytorchCreate and activate a conda environment:

conda create -n partialconv python=3.9

conda activate partialconvInstall dependencies:

pip install -r requirements.txtThe code will use pretrained VGG16, which can be downloaded by:

wget https://download.pytorch.org/models/vgg16-397923af.pth -o ~/.cache/torch/hub/checkpoints/vgg16-397923af.pthPlease refer to doc.

accelerate-launch train.py [--finetune] [-c CONFIG] [-e EXP_DIR] [--xxx.yyy zzz ...]-

This repo uses the 🤗 Accelerate library for multi-GPUs/fp16 supports. Please read the documentation on how to launch the scripts on different platforms.

-

Results (logs, checkpoints, tensorboard, etc.) of each run will be saved to

EXP_DIR. IfEXP_DIRis not specified, they will be saved toruns/exp-{current time}/. -

To modify some configuration items without creating a new configuration file, you can pass

--key valuepairs to the script.

For example, to train the model on CelebA-HQ (256x256) using mask images from a specified directory:

# training

python train.py -c ./configs/celebahq-256-maskdir.yaml

# fine-tuning

python train.py --finetune -c ./configs/celebahq-256-maskdir-finetune.yaml --train.pretrained ./runs/exp-xxx/ckpt/step199999/model.ptTo evaluate L1 Error, PSNR and SSIM:

accelerate-launch evaluate.py -c CONFIG \

--model_path MODEL_PATH \

[--n_eval N_EVAL] \

[--micro_batch MICRO_BATCH]- This repo uses the 🤗 Accelerate library for multi-GPUs/fp16 supports. Please read the documentation on how to launch the scripts on different platforms.

- You can adjust the batch size on each device by

--micro_batch MICRO_BATCH. - All of the metrics are evaluated on the composited output, i.e., pixels outside the masked area are replaced by the ground-truth.

To calculate Inception Score, please sample images following the next section with argument --for_evaluation, and then use tools like torch-fidelity.

accelerate-launch sample.py -c CONFIG \

--model_path MODEL_PATH \

--n_samples N_SAMPLES \

--save_dir SAVE_DIR \

[--micro_batch MICRO_BATCH] \

[--for_evaluation]- This repo uses the 🤗 Accelerate library for multi-GPUs/fp16 supports. Please read the documentation on how to launch the scripts on different platforms.

- You can adjust the batch size on each device by

--micro_batch MICRO_BATCH. The sampling speed depends on your system and larger batch size doesn't necessarily result in faster sampling.

Quantitative results:

Evaluated on CelebA-HQ test set (2824 images).

| Mask type and ratio | L1 | PSNR | SSIM | IScore |

|---|---|---|---|---|

| irregular (0.01, 0.1] | 0.0019 | 39.2560 | 0.9840 | 3.6035 ± 0.0927 |

| irregular (0.1, 0.2] | 0.0055 | 33.0008 | 0.9558 | 3.5556 ± 0.1290 |

| irregular (0.2, 0.3] | 0.0105 | 29.5313 | 0.9184 | 3.4656 ± 0.1437 |

| irregular (0.3, 0.4] | 0.0165 | 26.9910 | 0.8774 | 3.3983 ± 0.1238 |

| irregular (0.4, 0.5] | 0.0234 | 25.0421 | 0.8335 | 3.2684 ± 0.0665 |

| irregular (0.5, 0.6] | 0.0419 | 21.5027 | 0.7514 | 3.0239 ± 0.1464 |

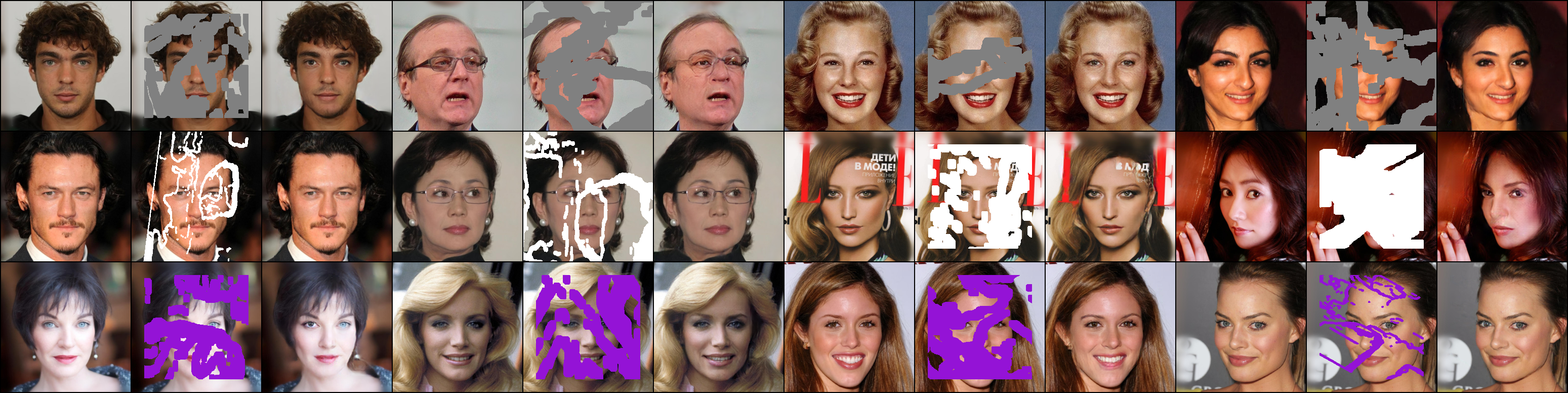

Visualization:

The content in the masked area doesn't affect the output, which verifies the effectiveness of partial convolution.