This is a distributed, fault-tolerant, reliable, simplified and easy-to-learn MapReduce framework written in Rust.

- The user runtime is using

tokio, an asynchronous runtime for Rust, make it efficient under I/O-Bound scenario like MapReduce - The RPC framework is using

tarpc, nodes will communicate throughTCPconnection - You may start the MapReduce process with one

Coordinator& multipleWorker(s), the number of worker processes can be specified by users - The default application is a word count application, which will use the provided

pg-*.txtfiles under theresourcedirectory to count the total words and the corresponding frequency - You can define your own map reduce function in

src/mr/function.rs&src/mr/worker.rs, see the comment for details - You may want to change the

server_addressto adapt to your environment, checksrc/bin/mrcoordinator.rs&src/bin/mrworker.rsfor details - If one or more

Worker(s)crash(es) during the MapReduce process, thefault-tolerantmechanism (By maintaining the heartbeat-like, leases of each tasks to allow theCoordinatordetect if any of theWorkerhas gone offline) will allow the MapReduce continue progressing, also ensure the correctness - If the

Coordinatorcrashes during the MapReduce process, theWorker(s)will wait for the restart of theCoordinator, and the recovery process will be triggered by the underlyingWrite Ahead Logon theCoordinatorside

- Please make sure you are in the

srcdirectory, if not (Assumes you are in the root directory), runcd src - If you want to start the MapReduce in default version, just run

bash start.shorzsh start.shor other command to run the script, depending on your own environment. To stop the MapReduce process, run thestop.shin the same way. If permission is denied, runchmod -x <script name>to grant permission - You can start the

Coordinatorby runningcargo run --bin mrcoordinator -- <map tasks number> <reduce tasks number> <worker number> - Then start one or more

Worker(s)in different terminal windows by runningcargo run --bin mrworker <map tasks number> <reduce tasks number>, please make sure the server has been started before you starts theWorker - The number of

Worker(s)should exactly match with the specified number when you started the server, otherwise the MapReduce process either will hang (TheWorker(s)is not enough) or will panic (TheWorker(s)exceed the preset limit) - To check the final result, first run

make clean, this will clean all intermediate files, then runningmake generateto generate the sortedfinal.txt, feel free to change anything in the providedMakefileto best fit your taste!

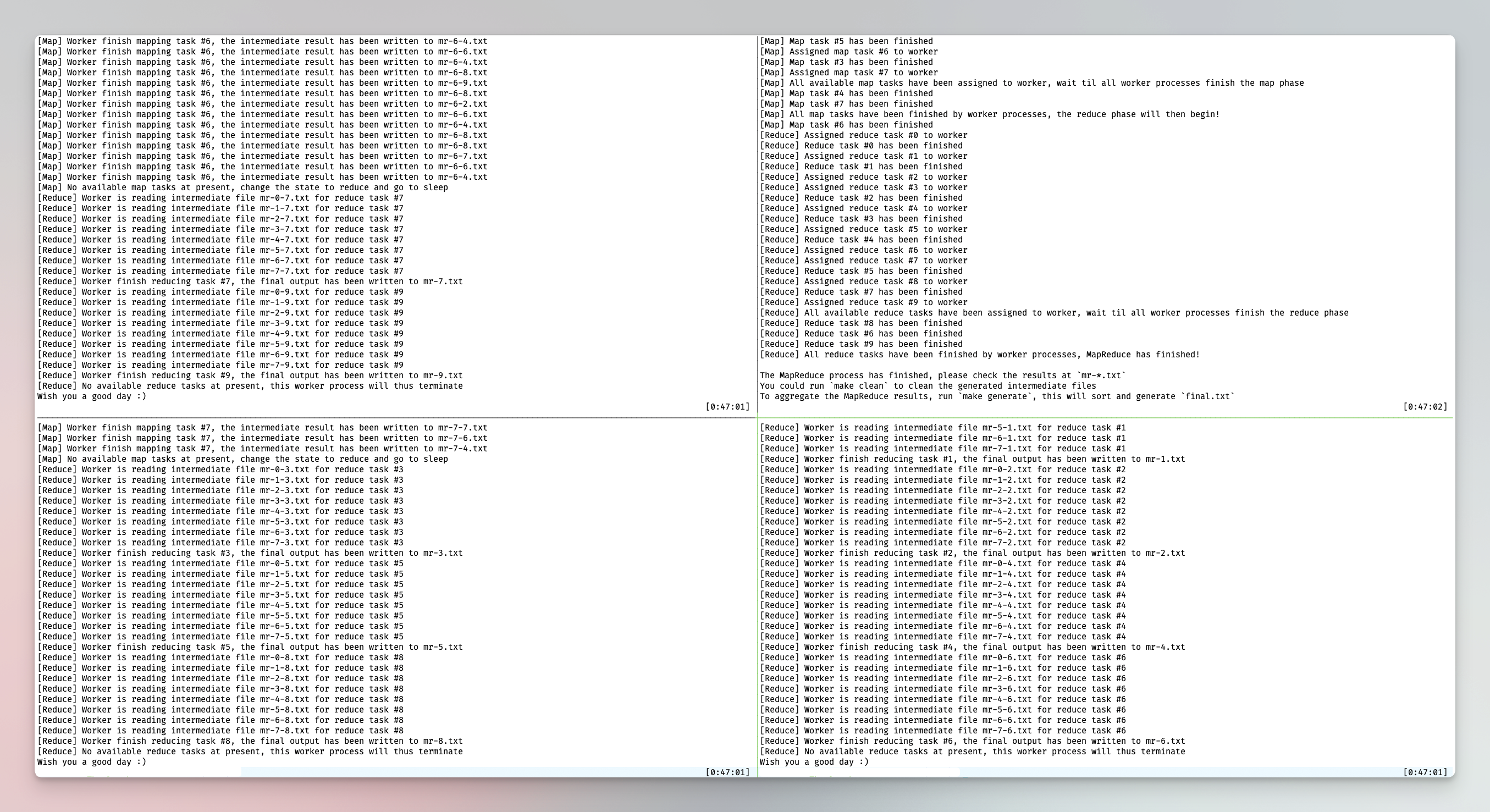

A typical MapReduce result will probably look like the following image, the Coordinator (The upper right one) is running with cargo run --bin mrcoordinator -- 8 10 3 and three other Workers with cargo run --bin mrworker -- 8 10, respectively.

- Garbage collector

- Improve logging method, add different logging level

- Parallel processing for each worker, i.e., utilize

rayonto achieve parallel data processing - Better serialization & deserialization format especially for intermediate files

- More test cases to ensure the overall correctness & the test cases coverage