This is the official implementation of "DiJiang: Efficient Large Language Models through Compact Kernelization", a novel Frequency Domain Kernelization approach to linear attention. DiJiang is a mythical monster in Chinese tradition. It has six legs and four wings , and it is known for its capability to move quickly.

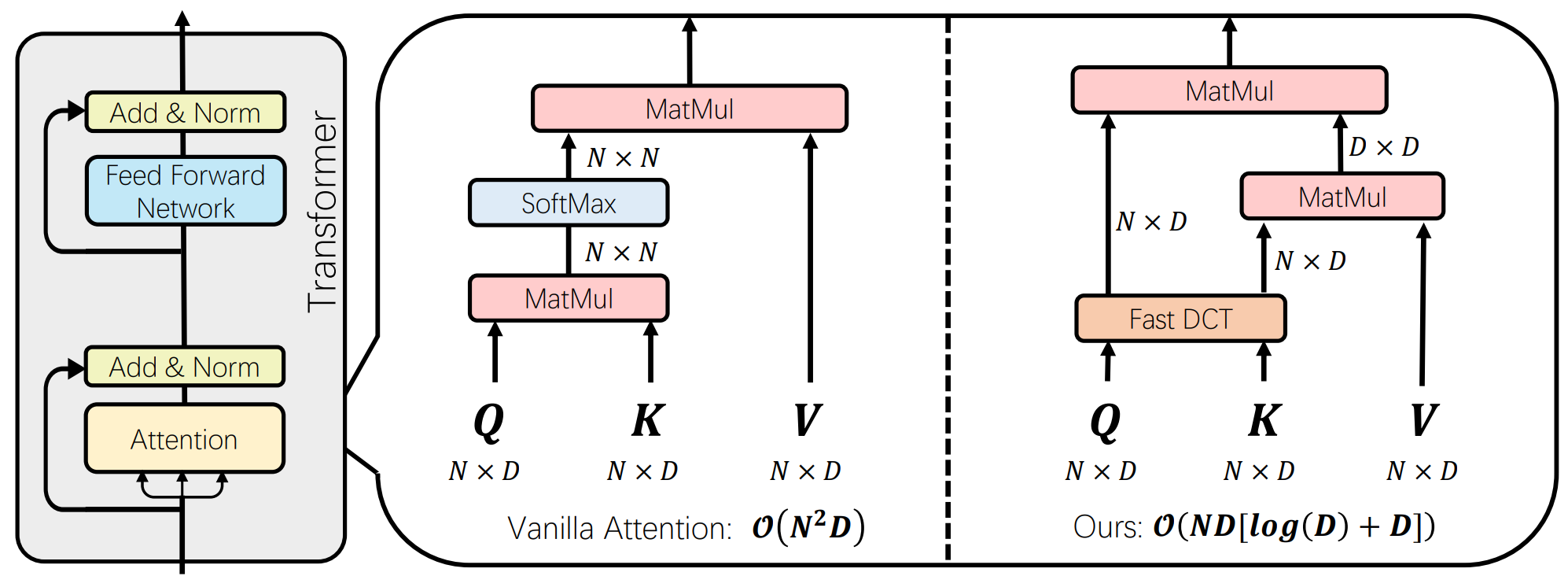

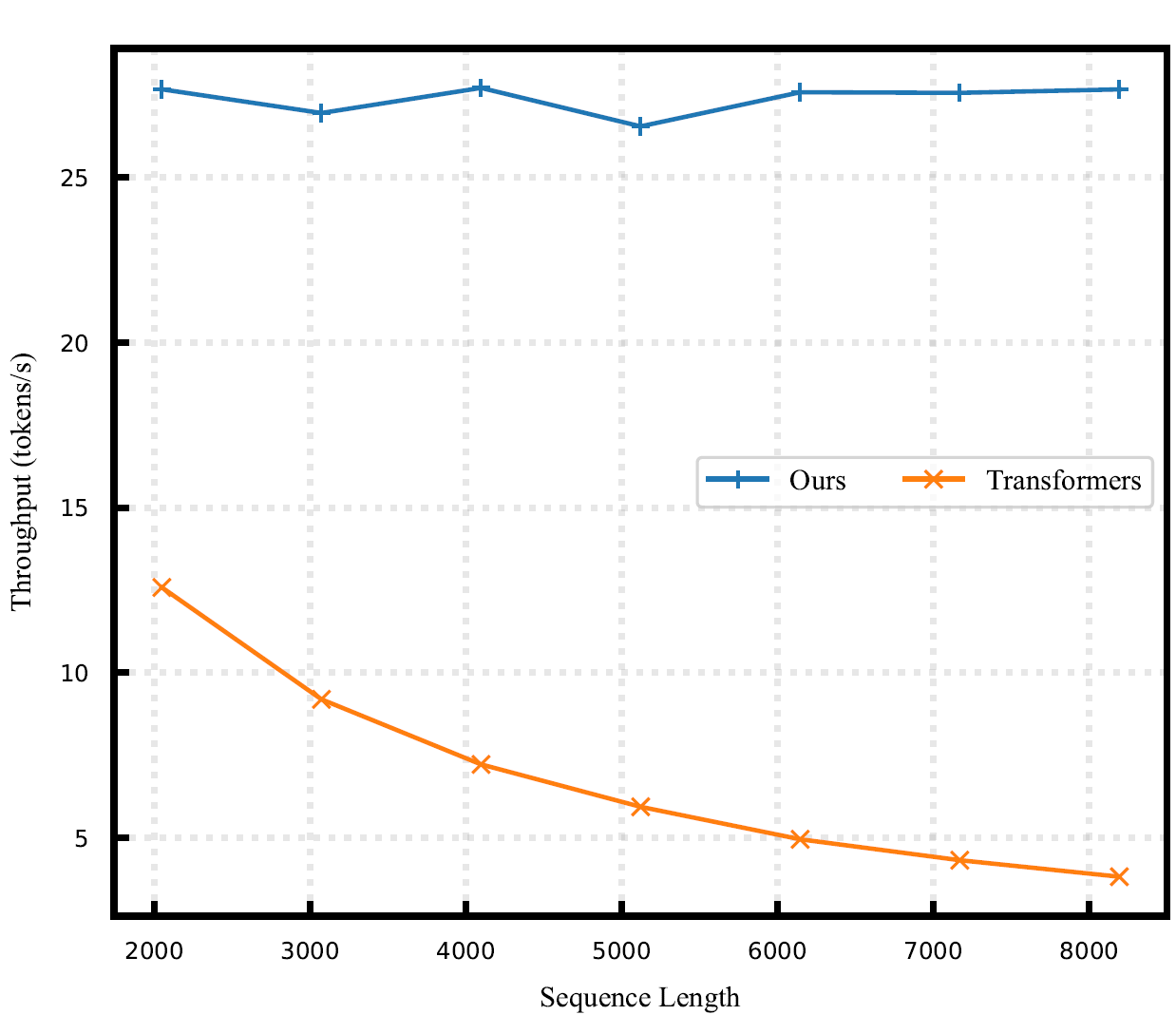

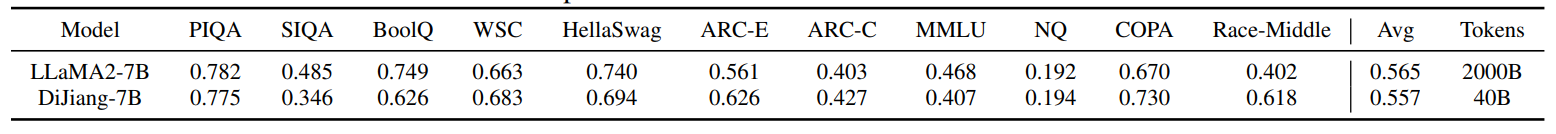

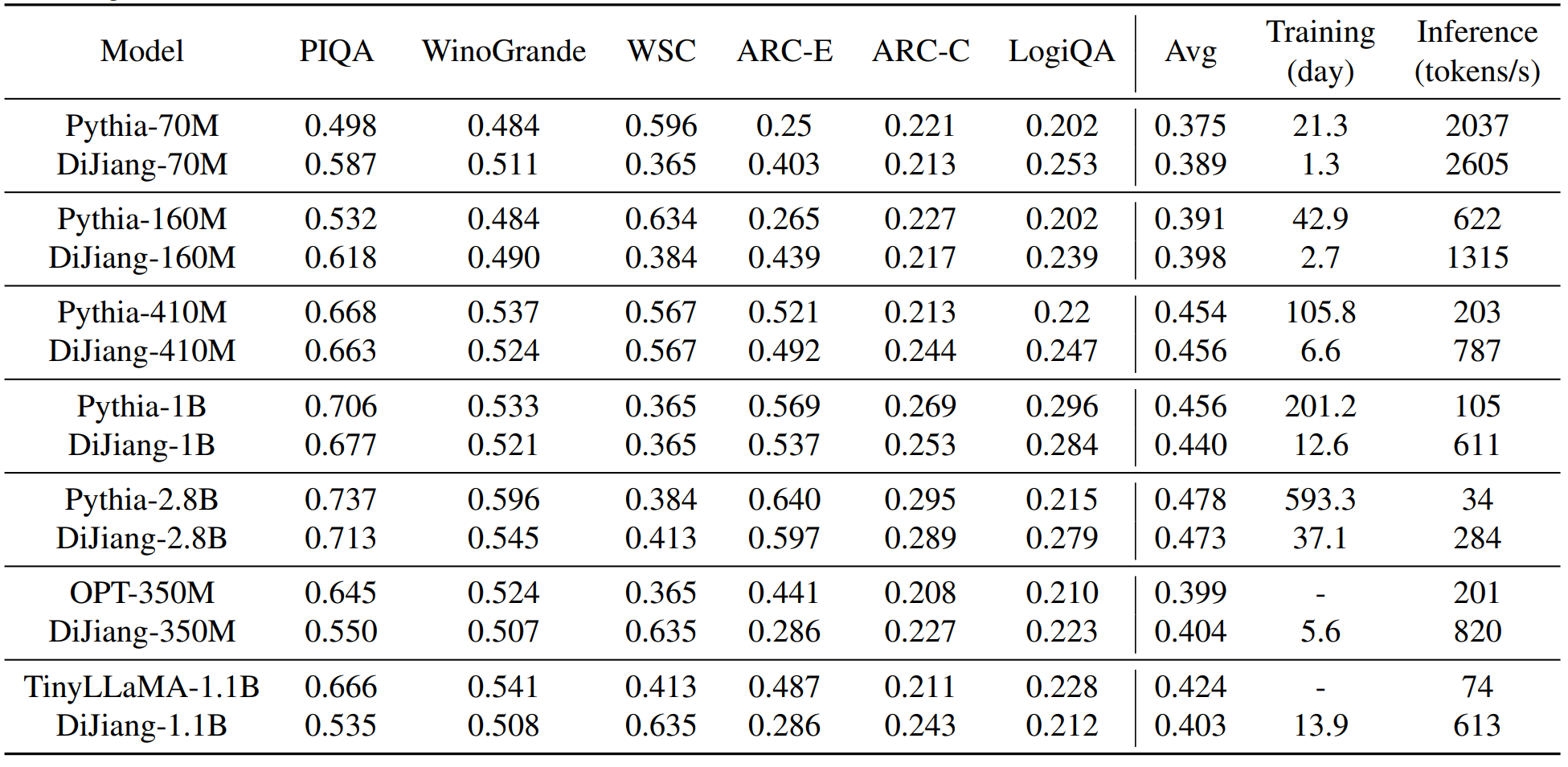

🚀 In our work "DiJiang", we propose a fast linear attention approach that leverages the power of Fast Discrete Cosine Transform (Fast DCT). Theoretical analyses and extensive experiments demonstrate the speed advantage of our method.

-

Download Dataset: We utilized the Pile dataset for training The Pile: An 800GB Dataset of Diverse Text for Language Modeling (arxiv.org)

-

Check CUDA version: suggested cuda>=11.7

-

Install required packages:

pip install -r requirements.txt

A training shell is provided at bash run_dijiang.sh for your reference.

Important arguments to take care of:

--model_name_or_path <path to the HuggingFace model>

--dataset_dir <path to the original PILE dataset>

--data_cache_dir <path to the cached dataset (if cached); cache stored at this dir>

--per_device_train_batch_size <global_batchsize // device_count>

--output_dir <dir to store the trained model>

--logging_dir <dir to store tensorboard>

- Training code for DiJiang

- Better inference code

- Inference models

@misc{chen2024dijiang,

title={DiJiang: Efficient Large Language Models through Compact Kernelization},

author={Hanting Chen and Zhicheng Liu and Xutao Wang and Yuchuan Tian and Yunhe Wang},

year={2024},

eprint={2403.19928},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

We acknowledge the authors of the following repos: