This project implements and deploys several AI course Recommender Systems using Streamlit. The final deployment can be seen here:

https://ai-course-recommender-demo.herokuapp.com/

The application is the final/capstone project of the IBM Machine Learning Professional Certificate offered by IBM & Coursera; check my class notes for more information.

All in all, the following models are created:

- Course Similarity Model

- User Profile Model

- Clustering Model

- Clustering with PCA

- KNN Model with Course Ratings

- NMF Model

- Neural Network (NN)

- Regression with NN Embedding Features

- Classification with NN Embedding Features

Table of contents:

- Deployment of an AI Course Recommender System Using Streamlit

The directory of the project consists of the following files:

.

├── .slugignore # Files to ignore in the Heroku deployment

├── Procfile # Heroku deployment: container/service command

├── README.md # This file

├── assets/ # Images and additional material

├── backend.py # Model implementations

├── conda.yaml # Conda environment file

├── data/ # Dataset files

├── notebooks # Research notebooks

│ ├── 01_EDA.ipynb

│ ├── 02_FE.ipynb

│ ├── 03_Content_RecSys.ipynb

│ ├── 04_Collaborative_RecSys.ipynb

│ ├── 05_Collaborative_RecSys_ANN.ipynb

│ └── README.md # Explanations of the notebooks

├── recommender_app.py # Streamlit app, UI

├── requirements.txt # Dependencies for deployment

├── runtime.txt # Python version for the Heroku deployment

└── setup.sh # Setup file for Streamlit, executed in Procfile

└── tests # Tests for the app; TBD

The model implementation is two places:

- In the notebooks, where research and manual tests are carried out.

- In the files

backend.pyandrecommender_app.pys, where the code from the notebooks has been refactored to fit a Streamlit app. The functionality (i.e., models, etc.) is in the first, whereas the UI is defined in the second.

There are at least 3 ways you can explore the project:

- Check the app deployed on Heroku. The URL might need a bit of time until the app awakens; additionally, note that the ANN-based models are disabled on the deployed app because the network exceeds the memory capacity of the eco dyno.

- Run the app locally. To that end, you need to install the

requirements.txtand runstreamlit, as explained in the next subsection. - Check the notebooks; they contain all model definitions and tests and a summary of their contents is provided in

notebooks/README.md. Theconda.yamlfile contains all packages necessary to set up an environment, as explained in the next subsection. Alternatively, you can open the notebooks on Google Colab by clicking on the dedicated icon in the header of each of them.

If you'd like to work with this repository locally, you need to create a custom environment and install the required dependencies. A quick recipe which sets everything up with conda is the following:

# Create environment with YAML, incl. packages

conda env create -f conda.yaml

conda activate course-recommenderIf you have a Mac with an M1/2 chip and you are having issues with the Tensorflow package, please check this link.

The requirements.txt file is for the deployment; if we want to try the app locally on a minimum environment, we could do it as follows:

# Install dependencies

pip install -r requirements.txt

# Run Streamlit app

streamlit run recommender_app.pyAlso, as mentioned, note that the notebooks can be opened in Google Colab by clicking on the icon provided in each of them.

The dataset used in the project is composed of the following files, located in data/, and which can be downloaded from the following links:

The course catalogue is contained in course_genre.csv, which consists of 307 course entries, each with 16 features:

COURSE_IDTITLE- 14 (binary) topic or genre fields:

'Database', 'Python', 'CloudComputing', 'DataAnalysis', 'Containers', 'MachineLearning', 'ComputerVision', 'DataScience', 'BigData', 'Chatbot', 'R', 'BackendDev', 'FrontendDev', 'Blockchain'.

The table course_processed.csv complements course_genre.csv by adding one new field/column associated with each course: DESCRIPTION.

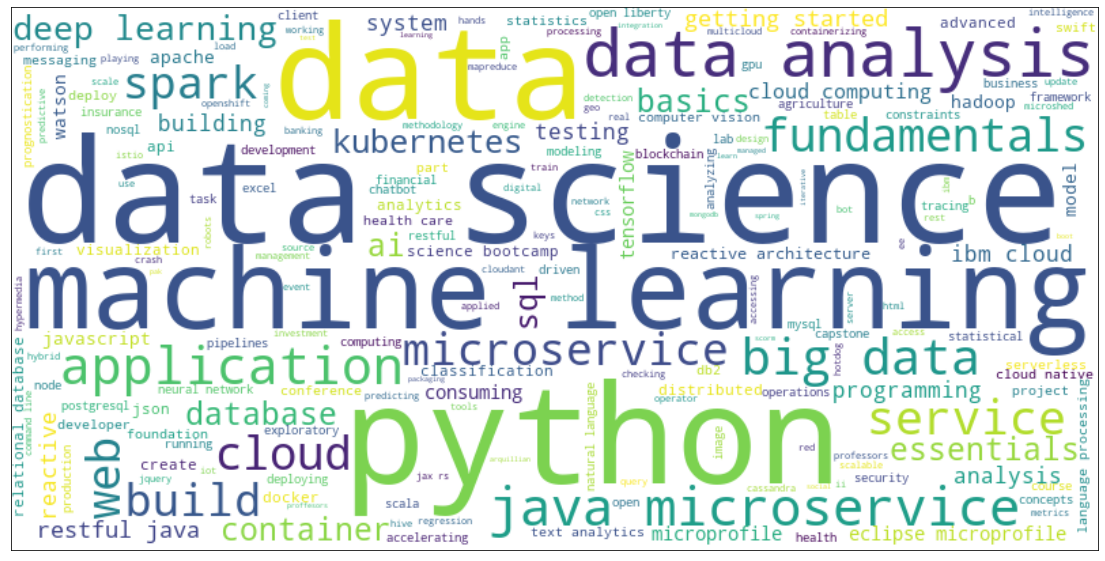

A wordcloud generated from the course titles.

A wordcloud generated from the course titles.

The ratings table is contained in ratings.csv, which consists of 233,306 rating entries, each with 3 features:

user: student iditem: course id, equivalent toCOURSE_IDincourse_genre.csvrating: two possible values:2: the user just audited the course without completing it.3: the user completed the course and earned a certificate.- Other possible values, not present in the dataset:

0orNA(no exposure),1(student browser course).

The user profiles are contained user_profile.csv, which consists of 33,901 user entries, each with 14 feature weights each. The weights span from 0 to 63, so I understand they are summed/aggregated values for each student, i.e., the accumulated ratings (2 or 3) of the students for each course feature. In other words, these weights seem not to be normalized.

Some test user rating data is provided in rs_content_test.csv. In total, the table has 9,402 entries with values 3 values each: user (student id), item (COURSE_ID), rating. Altogether 1000 unique users are contained, so some users have rated some courses.

Clearly, the datasets ratings.csv and course_genre.csv are the most important ones; with them, we can

- build user profiles,

- compute user and course similarities,

- infer latent user and course features,

- build recommender models, both content-based and with collaborative filtering.

Altogether, eight recommender systems have been created and deployed; these can be classified in two groups:

- Content-based: systems in which user and course features (i.e., genres/topics) are known and used.

- Collaborative Filtering: systems in which user and course features (i.e., genres/topics) are not known, or they are inferred.

In the following, a few notes are provided on the implemented models.

Course similarities are built from course text descriptions using Bags-of-Words (BoW). A similarity value is the projection of a course descriptor vector in the form of a BoW on another, i.e., the cosine similarity between both. Given the selected courses, the set of courses with the highest similarity value are found.

Courses have a genre descriptor vector which encodes all the topics covered by them. User profiles can be built by summing the user course descriptors scaled by the ratings given by the user. Then, for a target user profile, the unselected courses that are most aligned with it can be found using the cosine similarity (i.e., dot product) between the profile and the courses. Finally, the courses with the highest scores are provided.

Courses have a genre descriptor vector which encodes all the topics covered by them. User profiles can be built by summing the user course descriptors scaled by the ratings given by the users. Then, those users can be clustered according to their profile. This approach provides with the courses most popular within the user cluster.

Additionally, user profile descriptors can be transformed to their principal components, taking only a subset of them, enough to cover a percentage of the total variance, selected by the user.

Given the ratings dataframe, course columns are treated as course descriptors, i.e., each course is defined by all the ratings provided by the users. With that, a course similarity matrix is built using the cosine similarity. Then, for the set of selected courses, the most similar ones are suggested.

Non-Negative Matrix Factorization is performed: given the ratings dataset which contains the rating of each user for each course (sparse notation), the matrix is factorized as the multiplication of two lower rank matrices. That lower rank is the size of a latent space which represents discovered inherent features (e.g., genres). With the factorization, the ratings of unselected courses are predicted by multiplying the lower rank matrices, which yields the approximate but complete user-course rating table. For more information, check my handwritten explanation on the topic.

An Artificial Neural Network (ANN) which maps users and courses to ratings is defined and trained. If the user is in the training set, the ratings for unselected courses can be predicted. However, the most interesting part of this approach consists in extracting the user and course embeddings from the ANN for later use. An embedding vector is a continuous N-dimensional representation of a discrete object (e.g., a user).

The user and item embeddings extracted from the ANN are used to build a linear regression model and a random forest classifier which predict the rating given the embedding of a user and a course.

As mentioned, the app is fully contained in these two files:

backend.py: model definition, training and prediction functions.recommender_app.py: Streamlit UI and usage ofbackend.pyfunctionalities.

The recommender_app.py file instantiates all UI widgets in sequence and it converges to two important functions: train)() and predict(). These call their homologue in backend.py, which bifurcate the execution depending on the selected model (a parameter caught by the UI). All main and auxiliary functions to train the models and produce results are contained in backend.py.

I took this structure definition from the IBM template, however, it has some shortcomings; in summary, the architecture from backend.py is fine for a demo consisting of one/two simple models, but very messy for nine. Some improvements are listed in the section Next Steps, Improvements.

Heroku is a cloud service which enables very easy (continuous) deployments. In this case, we need to create the following files in the repository:

Procfile.slugignoreruntime.txtrequirements.txtsetup.sh

Additionally, an app needs to be created in Heroku, via the CLI or the Heroku web interface. In the case of the web interface, the deployment is realized by simply linking the Github repository to the app. More information on that can be found here.

After the deployment, if anything goes wrong, we can check the logs with the CLI as follows:

# Replace app-name, e.g., with ai-course-recommender-demo

heroku logs --tail --app app-nameContinuous Integration and Continuous Deployment (CI/CD) are realized as follows:

- There is a Github action defined in

.github/workflows/python-app.ymlwhich runs the tests and is triggered every time we perform a push to the remote repository. - The option Continuous deployment is active in the Heroku app (web interface), so that the code is deployed every time Github runs all tests successfully.

-

Content-based systems work efficiently and provide similar results, but they require (manual) genre characterization for users and courses/items.

-

Collaborative Filtering systems are based on the assumption that there is a relationship between users and items, so that we can discover the latent features that reveal user preferences.

-

The collaborative system which seems to best predict the ratings is the random forest classifier that maps user and course embeddings (from the ANN) to rating classes (2 or 3). However:

- The training time is the longest.

- The new users for whom we want to predict (and their example ratings) must be trained with the system so that they have an embedding representation.

As I mention in the introduction, I used the starter code/template to implement the app, but I think it has many architectural/design issues that need to be tackled. Here, I list some solutions I'd implement if I had time:

- Create a library/package and move the code from

backend.pythere; thus,backend.pywould be the interface which calls the machine learning functionalities andrecommender_app.pythe GUI/app definition. - Transform code into OOP-style; each model/approach could be a class derived from a base interface and we could pack them in different files which are used in a main library/package file.

- Fix the fact that datasets are being loaded every time we click on Train. This is Ok for a small demo of different machine learning approaches, but not for a final version, closer to a production setting.

- Unify the pipeline for all models: the approaches based on neural networks have a different data flow, because they need the selected courses in the training set in order to create the embeddings correctly. Additionally, the neural network model cannot be hashed into a dictionary. The data flow and the in/out interfaces should be the same for all models.

- The ANN training should consider not only the 2 & 3 ratings.

- Find out a way to deploy the models which use the ANN to Heroku or a similar cloud service. Currently, the ANN model is too large for the Heroku slug memory.

- Implement more tests to cover at least the major functionalities and the

train()andpredict()functions in thebackend.py.

- My guide on how to use Streamlit: streamlit_guide

- My notes on deployments in general (e.g., to Heroku, AWS, Render): mlops_udacity/deployment

- My notes on the IBM Machine Learning Specialization: machine_learning_ibm

- My notes on the lectures by Andrew Ng about Recommender Systems: machine_learning_coursera

- My blog post on how to reach production-level code: From Jupyter Notebooks to Production-Level Code

Mikel Sagardia, 2022.

No guarantees.

If you find this repository useful, you're free to use it, but please link back to the original source.

This is the final capstone project of the IBM Machine Learning Professional Certificate; check my class notes for more information. I used the starter code from IBM.