💥 Colab Demo | InternGPT Free Online Demo | Local Deployment

An out-of-box online demo is integrated in InternGPT - a super cool pointing-language-driven visual interactive system. Enjoy for free.:lollipop:

Note for Colab, remember to select a GPU via

Runtime/Change runtime type(代码执行程序/更改运行时类型).

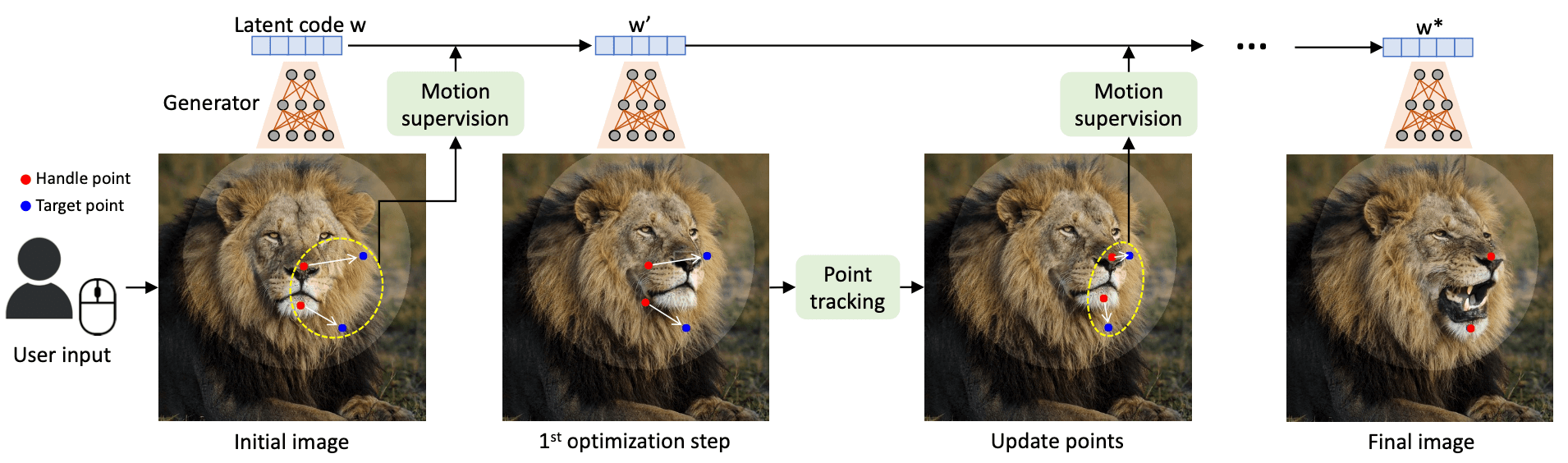

Implementation of Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold

Here is a simple tutorial video showing how to use our implementation.

demo.mp4

Check out the original paper for the backend algorithm and math.

🌟 What's New

- [2023/5/25] DragGAN is on PyPI, simple install via

pip install draggan. Also addressed the common CUDA problems OpenGVLab#38 OpenGVLab#12 - [2023/5/25] We now support StyleGAN2-ada with much higher quality and more types of images. Try it by selecting models started with "ada".

- [2023/5/24] Custom Image with GAN inversion is supported, but it is possible that your custom images are distorted due to the limitation of GAN inversion. Besides, it is also possible the manipulations fail due to the limitation of our implementation.

🌟 Changelog

- Tweak performance.

- Improving installation experience, DragGAN is now on PyPI.

- Automatically determining the number of iterations.

- Support StyleGAN2-ada.

- Integrate into InternGPT

- Custom Image with GAN inversion.

- Download generated image and generation trajectory.

- Controlling generation process with GUI.

- Automatically download stylegan2 checkpoint.

- Support movable region, multiple handle points.

- Gradio and Colab Demo.

This project is now a sub-project of InternGPT for interactive image editing. Future updates of more cool tools beyond DragGAN would be added in InternGPT.

We recommend to use Conda to install requirements.

conda create -n draggan python=3.7

conda activate dragganInstall PyTorch following the official instructions

conda install pytorch torchvision torchaudio pytorch-cuda=11.7 -c pytorch -c nvidia Install DragGAN

pip install dragganLaunch the Gradio demo

python -m draggan.web

# running on cpu

python -m draggan.web --device cpuEnsure you have a GPU and CUDA installed. We use Python 3.7 for testing, other versions (>= 3.7) of Python should work too, but not tested. We recommend to use Conda to prepare all the requirements.

For Windows users, you might encounter some issues caused by StyleGAN custom ops, youd could find some solutions from the issues pannel. We are also working on a more friendly package without setup.

git clone https://github.com/Zeqiang-Lai/DragGAN.git

cd DragGAN

conda create -n draggan python=3.7

conda activate draggan

pip install -r requirements.txtLaunch the Gradio demo

python gradio_app.py

# running on cpu

python gradio_app.py --device cpuIf you have any issue for downloading the checkpoint, you could manually download it from here and put it into the folder

checkpoints.

@inproceedings{pan2023draggan,

title={Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold},

author={Pan, Xingang and Tewari, Ayush, and Leimk{\"u}hler, Thomas and Liu, Lingjie and Meka, Abhimitra and Theobalt, Christian},

booktitle = {ACM SIGGRAPH 2023 Conference Proceedings},

year={2023}

}Official DragGAN StyleGAN2 StyleGAN2-pytorch StyleGAN2-Ada

Welcome to discuss with us and continuously improve the user experience of DragGAN. Reach us with this WeChat QR Code.