In recent years, the widespread use of NPUs has provided more training and usage resources for LLMs, especially MLLMs. However, the current use of NPUs still has more or less adaptation issues. Therefore, we provide a framework that can flexibly select different visual encoders, adapters, LLMs, and corresponding generation components to form MLLMs for training, inferring, and image generation.

For example, we give an implementation of a high-performance MLLM (i.e., SEED-X) using this framework. Of course, you can also choose different modules in this framework to build your own MLLM.

-

MLLM: the standard multimodal large language models for multimodal comprehension.

-

SEED-X: a unified and versatile foundation model which is capable of responding to a variety of user needs through unifying multi-granularity comprehension and generation.

-

modular design: this project is flexible and it's easy to change the large language models or vision encoders with configs.

-

training recipe: this project provides the complete code for pre-training or superivsed finetuning the multimodal large language models on (Ascend) NPUs.

-

acceleration: this project provides an existing GPU-accelerated component replacement scheme for NPUs.

-

2024-07-24 🔥 We release 7 Chinese and English pure text and multi-modal evaluation benchmarks.

-

2024-07-08 🔥 We release NPU-based multi-modal inference and pre-training code, and various ways to use SEED-X.

This project is under active development, please stay tuned ☕️!

- Model zoo on NPU.

- Multimodal benchmarks.

-

Dependencies & Environment

-

python >= 3.8 (Recommend to use Anaconda)

-

ASCEND NPU (Recommend to use 910B) + CANN

- CANN version

> cat /usr/local/Ascend/ascend-toolkit/latest/x86_64-linux/ascend_toolkit_install.info package_name=Ascend-cann-toolkit version=8.0.T6 innerversion=V100R001C17B214 compatible_version=[V100R001C15,V100R001C18],[V100R001C30],[V100R001C13],[V100R003C11],[V100R001C29],[V100R001C10] arch=x86_64 os=linux path=/usr/local/Ascend/ascend-toolkit/8.0.T6/x86_64-linux

-

-

Installation

- Clone the repo and install dependent packages

git clone https://github.com/TencentARC/mllm-npu.git cd mllm-npu pip install -r requirements.txt

To quickly try out this framework, you can execute the following script.

# For image comprehension

python ./demo/img2txt_inference.py

# For image generation

python ./demo/txt2img_generation.pyTo launch a Gradio demo locally, please run the following commands one by one. If you plan to launch multiple model workers to compare between different checkpoints, you only need to launch the controller and the web server ONCE.

-

Launch a contoller

python mllm_npu/serve/controller.py --host 0.0.0.0 --port 10000

-

Launch a model worker

python mllm_npu/serve/worker.py --host 0.0.0.0 --controller http://localhost:10000 --port 40000 --worker http://localhost:40000

-

Launch a gradio web app

python mllm_npu/serve/gradio_app.py

-

You can also use this service through API, see demo for the format.

{ "input_text": "put your input text here", "image": "put your input image (base64)", "image_gen": False or True }

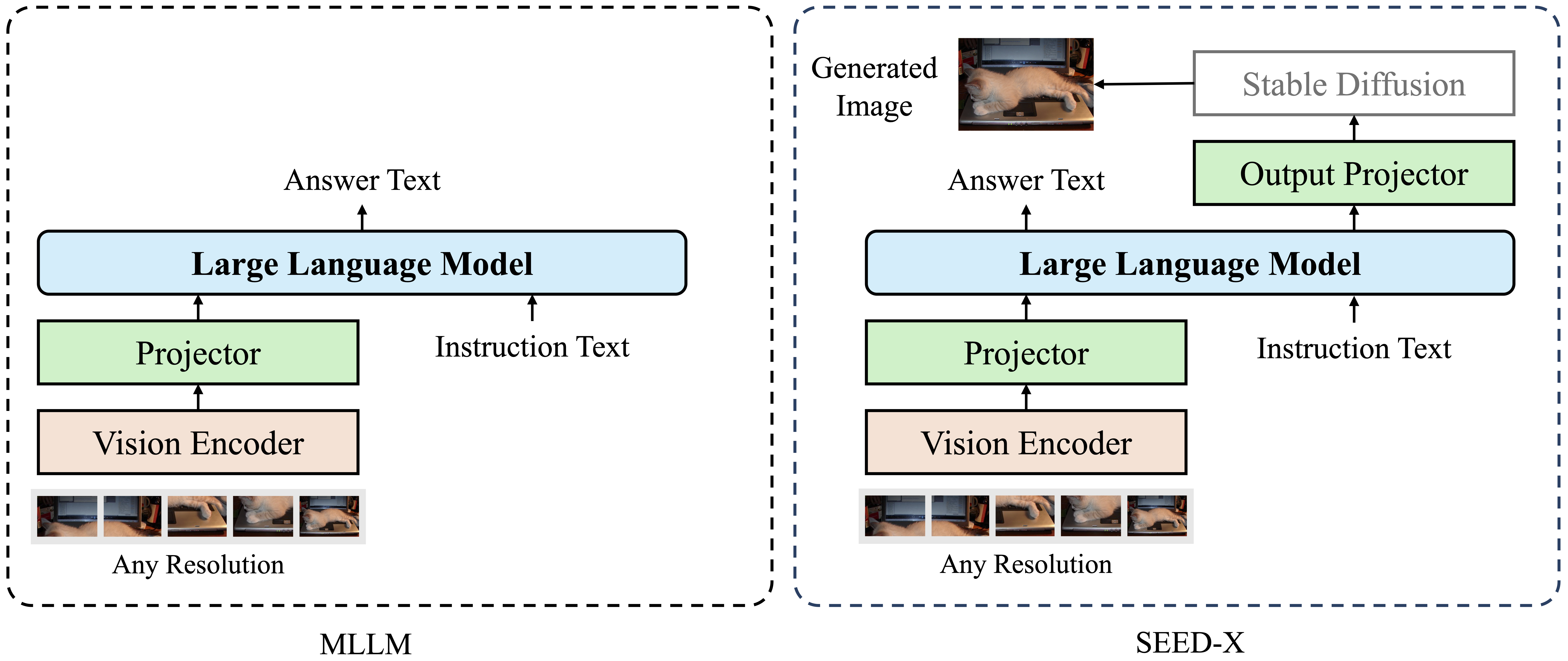

We mainly adopt the GeneraliazedMultimodalModels in mllm.py as the general architecture of multimodal large language models, such as LLaVA, which contains three basic modules:

- (1) a language model, e.g., LLaMA-2.

- (2) a projector to project image features into language embeddings.

- (3) a vision encoder, e.g., ViT.

The MLLM is built according to the model config with hydra.utils.instantiate, and you can find some samples in models.

Specifically, we support two mainstream architectures now:

-

standard multimodal models (

GeneraliazedMultimodalModels): aim for multimodal comprehension, containing a vision encoder, a vision-language projector, and a Large Lagnguage Model. -

SEED-X (

SEED): the versatile multimodal model for comprehension and generation, extends the standard multimodal model with a output projector for generating images with the stable diffusion.Architecture Any Resolution Comprehension Generation MLLM ✔️ ✔️ ✖️ SEED-X ✔️ ✔️ ✔️

You can prepare your own data to pre-train or fine-tune your model. Specifically, we provide four different tasks and corresponding formats (please refer to the examples). In order to use the data more efficiently, we use webdataset to organize the data. Besides, please refer to data.yaml for the index of the data. You can adjust the data sampling rate and other settings by setting it in this file.

Please refer to dataset for more data information.

For multimodal comprehension, we need to add special tokens to the tokenizers, such as <img> or <patch>, you can specify the path of the tokenizer in scripts/tools/add_special_tokens_to_tokenizer.py and directly run this scripts to obtain the updated tokenizer.

You need to specify the model config and data config in the training scripts, such as scripts/mllm_llama3_8b_siglip_vit_pretrain.sh.

bash scripts/mllm_llama3_8b_siglip_vit_pretrain.shFor supervised finetuning, you can keep most settings unchanged and:

- specify the initial weights of SFT through the "pretrained_model_name_path" in the model configuration file.

- adjust the SFT data and its instruction format.

- follow the pre-training script for the rest.

We collected some popular English/Chinese plain text and multi-modal benchmarks (e.g., mmlu, cmmlu, etc.), see here for details.

On the GPU, there are some common acceleration components that can significantly improve the model calculation speed, such as flash-attn and xformers. Since there is currently no direct implementation on the NPU, we now provide some optional acceleration implementations, please see acceleration for details.

If you find the work helpful, please consider citing:

-

mllm-npu

@misc{mllm_npu title={mllm-npu}, author={Li, Chen and Cheng, Tianheng and Ge, Yuying and Wang, Teng and Ge, Yixiao}, howpublished={\url{https://github.com/TencentARC/mllm-npu}}, year={2024}, }

-

SEED-X

@article{ge2024seed, title={SEED-X: Multimodal Models with Unified Multi-granularity Comprehension and Generation}, author={Ge, Yuying and Zhao, Sijie and Zhu, Jinguo and Ge, Yixiao and Yi, Kun and Song, Lin and Li, Chen and Ding, Xiaohan and Shan, Ying}, journal={arXiv preprint arXiv:2404.14396}, year={2024} }

This project is under the Apache-2.0 License. For models built with LLaMA or Qwen models, please also adhere to their licenses!

This project is developed based on the source code of SEED-X.