Seshat is an advanced LLMOps (Large Language Model Operations) system designed to streamline and enhance the workflow for LLM developers and prompt engineers. This project leverages a modular interface to manage various components such as batch processing, embeddings, meta-prompts, FAQ summaries, and system configurations. Seshat aims to provide a comprehensive and user-friendly environment for prompts and data processing with LLM.

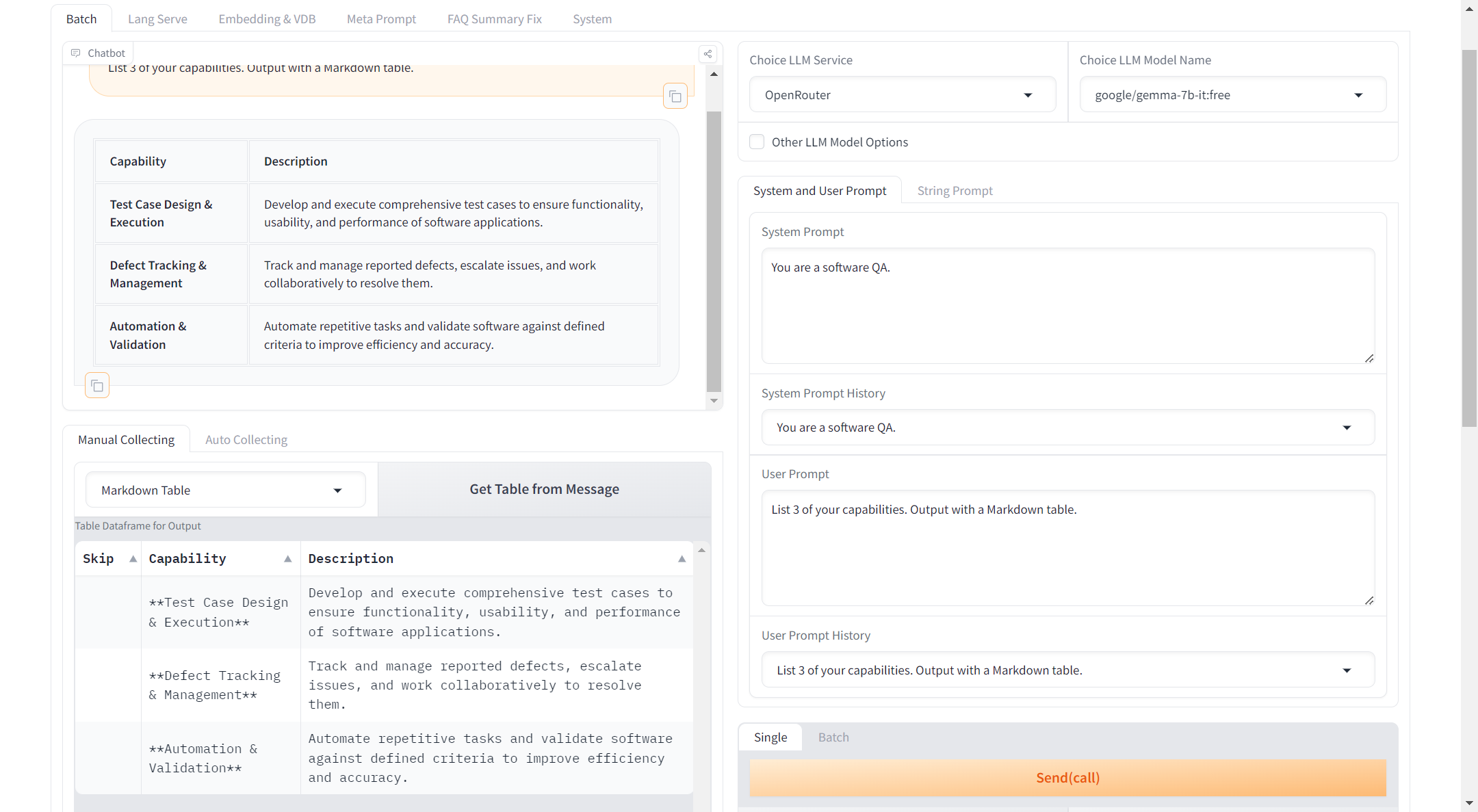

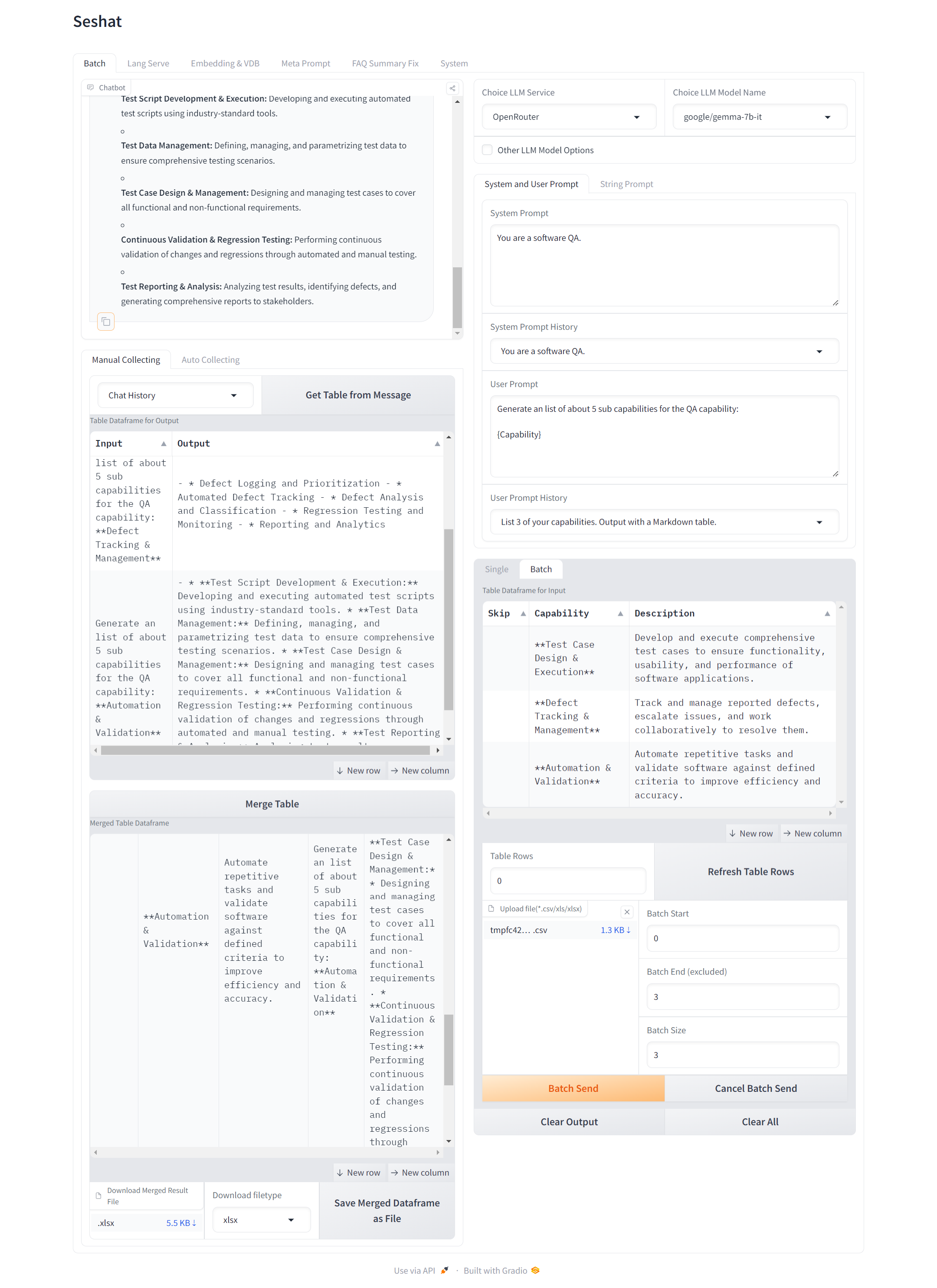

- Batch Processing: Efficiently handle large batches of data with LLM.

- Lang Serve Client: Interface for interacting with LangServe applications.

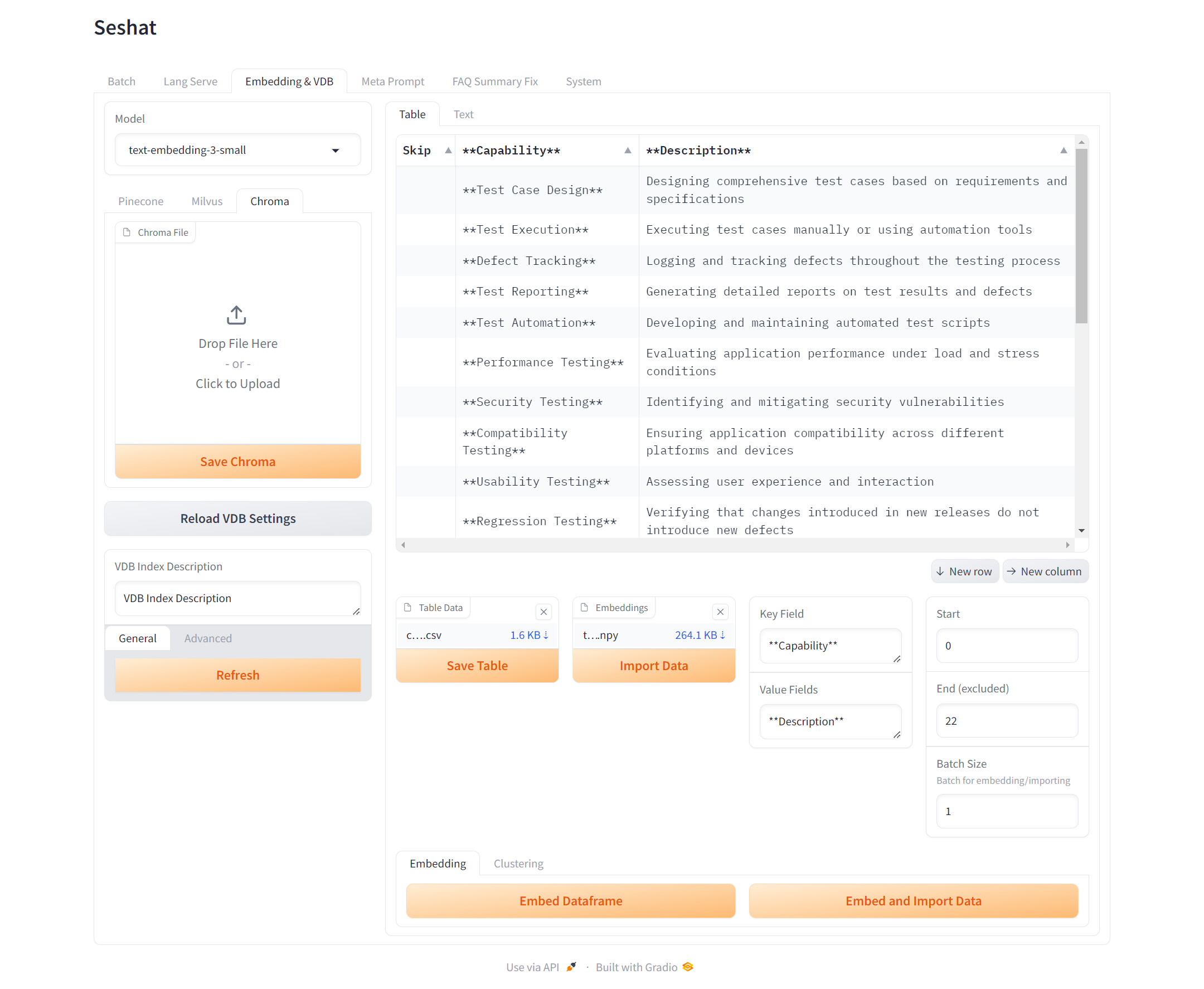

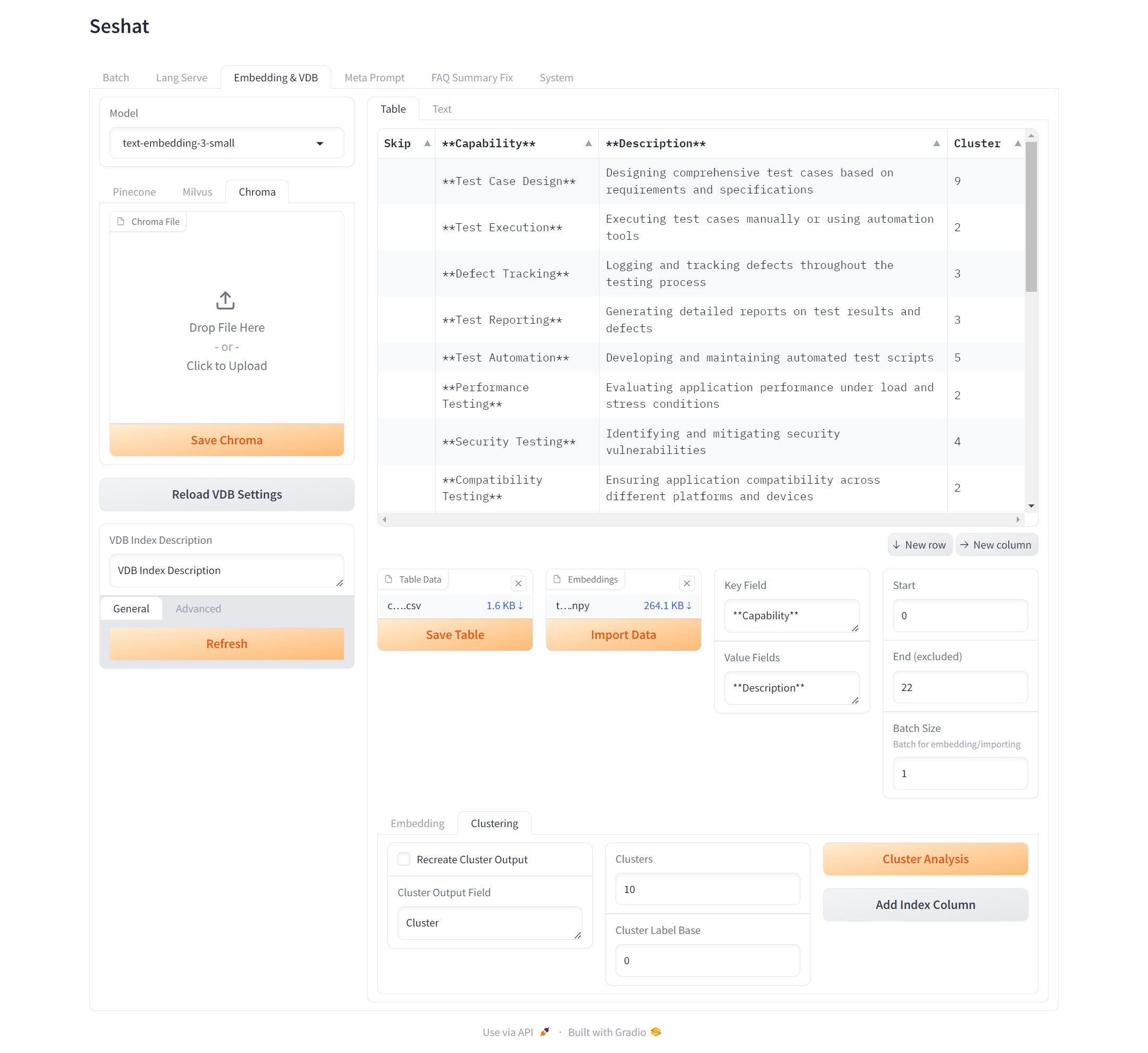

- Embedding & VDB: Embed strings/sheets, import embeddings to vector databases, and cluster embeddings.

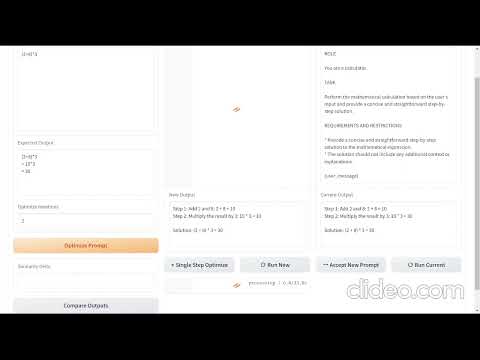

- Meta Prompt Management: Create prompts with LLM, based on meta-prompt.

- FAQ Summary Fix: Preprocess FAQ data, convert Q&A sheets into

faq_textandrdb_text.faq_textis used by VDB and LLM for inference, whilerdb_textis used for output. - System Configuration: Comprehensive system management and configuration, including updating models of OpenRouter.

To install Seshat, clone the repository and install the necessary dependencies:

git clone https://github.com/yourusername/seshat.git

cd seshat

poetry installSeshat uses a configuration file (config.yaml) for setting up various parameters. You can specify the path to your configuration file using the --config_file argument:

python main.py --config_file path/to/your/config.yamlSome API keys are required to use certain features of Seshat. You can set these keys in the configuration file:

- embedding.text-embedding-3-small.openai_api_key

- embedding.azure-text-embedding-3-small.openai_api_key

- llm.llm_services.OpenAI.args.openai_api_key

- llm.llm_services.OpenRouter.args.openai_api_key

- llm.llm_services.Cloudflare.args.openai_api_key

- llm.llm_services.Azure_OpenAI.args.azure_openai_api_key

- llm.llm_services.Replicate.args.replicate_api_key

- llm.llm_services.HuggingFace.args.huggingface_api_key

To launch the Seshat application, run the following command:

python main.pyBy default, the application will look for a config.yaml file in the current directory. You can customize the configuration by modifying this file or by providing a different configuration file.

Docker image is also available for Seshat. To run Seshat in a Docker container, use the following command:

docker run -p 7860:7860 -v /path/to/your/config.yaml:/app/config.yaml ghcr.io/yaleh/seshat:mainSeshat provides a tabbed interface with the following sections:

- Batch: Batch processing management.

- Lang Serve: Language server interaction.

- Embedding & VDB: Embeddings and vector database management.

- Meta Prompt: Meta-prompt creation and management.

- FAQ Summary Fix: FAQ summarization and fixing tools.

- System: System configuration and management.

To contribute to Seshat, follow these steps:

- Fork the repository.

- Create a new branch for your feature or bug fix.

- Make your changes and commit them with a descriptive message.

- Push your changes to your forked repository.

- Create a pull request to the main repository.

Poetry is used for managing dependencies in this project. To install the dependencies, run the following command:

poetry install

You can create a new virtual environment using Poetry by running:

python -m venv venv

source venv/bin/activate

pip install -U poetry

poetry install

- Gradio is known to freeze with multiple concurrent requests. If this happens, try restarting the service.

- If you are using a Docker instance with the

restartpolicy set toalways, you can click theExitbutton on theSystemtab to restart the service.

- If you are using a Docker instance with the

Seshat is licensed under the MIT License. See the LICENSE file for more details.

We would like to thank the contributors and the open-source community for their invaluable support and contributions to this project.

For any questions or inquiries, please contact the project maintainer at [calvino.huang@gmail.com].