This repository aims to create a YoloV3 detector in Pytorch and Jupyter Notebook. I'm trying to take a more "oop" approach compared to other existing implementations which constructs the architecture iteratively by reading the config file at Pjreddie's repo. The notebook is intended for study and practice purpose, many ideas and code snippets are taken from various papers and blogs. I will try to comment as much as possible. You should be able to just Shift-Enter to the end of the notebook and see the results.

- Python 3.6.4

- Pytorch 0.4.1

- Jupyter Notebook 5.4.0

- OpenCV 3.4.0

- imgaug 0.2.6

- Pycocotools

- Cuda Support

$ git clone https://github.com/ydixon/yolo_v3

$ cd yolo_v3

$ wget https://pjreddie.com/media/files/yolov3.weights

$ wget https://pjreddie.com/media/files/darknet53.conv.74

$ cd data/

$ bash get_coco_dataset.sh

These notebooks are intended to be self-sustained as possible as they could be, so you can just step through each cell and see the results. However, for the later notebooks, they will import classes that were built before. It's recommended to go through the notebooks in order.

yolo_detect.ipynb view

This notebook takes you through the steps of building the darknet53 backbone network, yolo detection layer and all the way up to objection detection from scratch.

• Conv-bn-Relu Blocks • Residual Blocks • Darknet53 • Upsample Blocks • Yolo Detection Layer • Letterbox Transforms • Weight Loading • Bounding Box Drawing • IOU - Jaccard Overlap • Non-max suppression (NMS)

Data_Augmentation.ipynb view

Show case augmentations used by the darknet cfg file including hue, saturation, exposure, jitter parameters. Also demo additional augmentations that could be used for different kinds of datasets such as rotation, shear, zoom, Gaussian noises, blurring, sharpening effect, etc. Most of the augmentations would be powered by the imgaug library. This notebook will also show how to integrate these augmentations into Pytorch datasets.

| Augmentation | Description | Parameter |

|---|---|---|

| Random Crop | +/- 30% (top, right, bottom, left) | jitter |

| Letterbox | Keep aspect ratio resize, pad with gray color | N/A |

| Horizontal Flip | 50% chance | N/A |

| HSV Hue | Add +/- 179 * hue |

hue |

| HSV Saturation | Multiply 1/sat ~ sat |

saturation |

| HSV Exposure | Multiply 1/exposure ~ exposure |

exposure |

Deterministic_data_loading.ipynb view

Pytorch's Dataset and DataLoader class are easy and convenient to use. It does a really good job in abstracting the multiprocessing behind the scenes. However, the design also poses certain limitations when users try to add more functionalities. This notebook aims to address some of these concerns:

- Resume-able between batches

- Deterministic - results reproducible whether it has been paused/resume/one go.

- Reduced time for first batch - by default the

Dataloaderwould need to iterate up to all the batches that came before the 'To-be-resumed batch' and that could take hours for long datasets. - Cyclic - pick up left over samples that were not sufficient enough to form a batch and combine them with samples from the next epoch.

The goals are acheived by creating a controller/wrapper class around Dataset and DataLoader. This wrapper class is named as DataHelper. It act as an batch iterator and also stores information regarding the running iteration.

COCODataset.ipynb view

Shows how to parse the COCO dataset that follows the format that was used in the original darknet implementation .

• Generate labels • Image loading • Convert labels and image to Tensors • Box coordinates transforms • Build Pytorch dataset • Draw

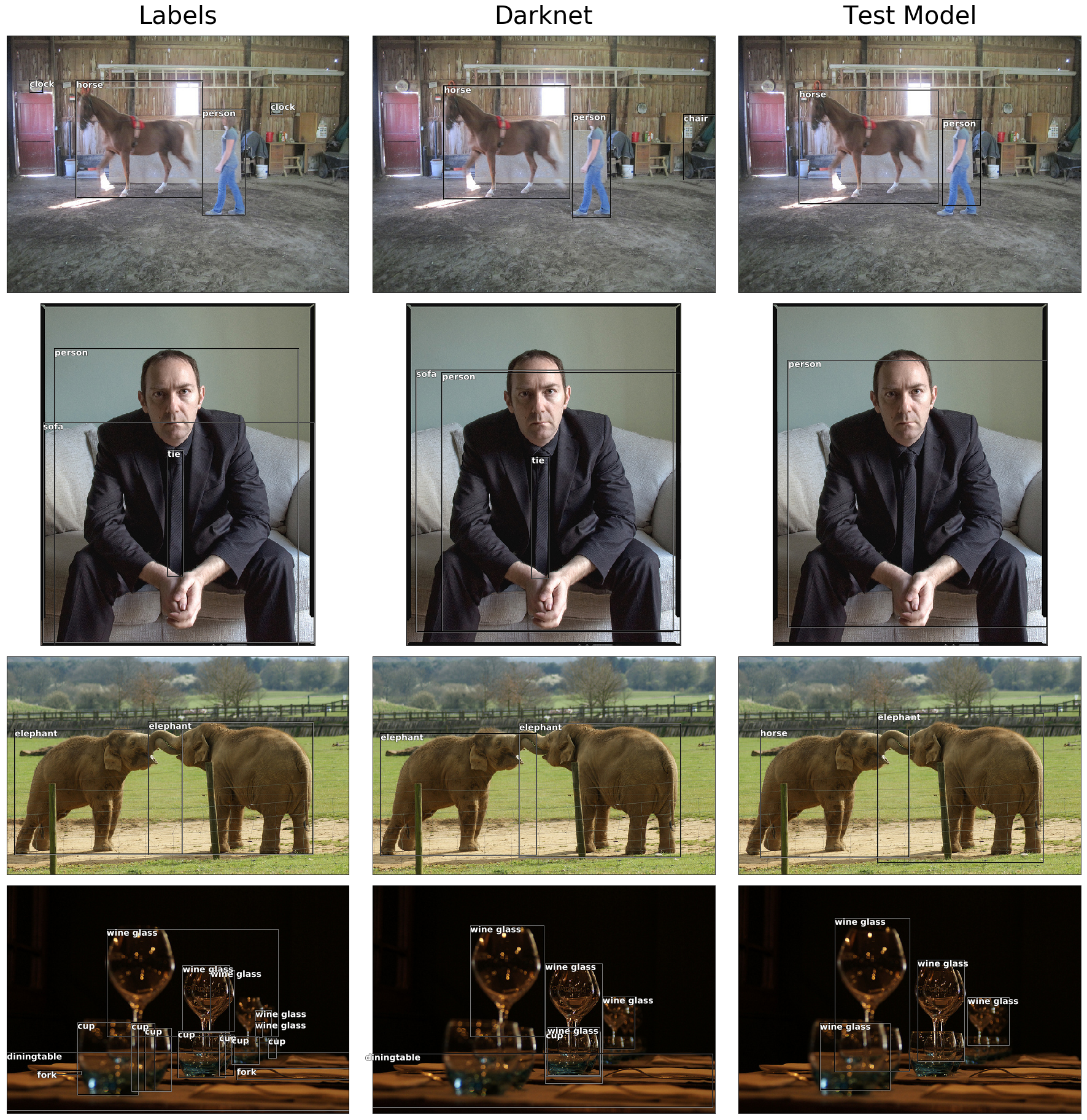

yolo_train.ipynb view

Building up on previous notebooks, this notebook implements the back-propagation and training process of the network. The most important part is figuring out how to convert labels to target masks and tensors that could be trained against. This notebook also modifies YoloNet a little bit to accommodate the changes.

• Multi-box IOU • YoloLoss • Build truth tensor • Generate masks • Loss function • Differential learning rates • Intermediate checkpoints • Train-resuming

Updated to use mseloss for tx, ty. This should improve training performance.

yolo_train_short.ipynb view

Minimal version of yolo_train.ipynb. You can use this notebook if you are only interested in testing with different datasets/augmentations/loss functions.

CVATDataset.ipynb view

After using CVAT to create labels, this notebook will parse the CVAT label format(xml) and convert it to readable format by the network. We will also start using openCV to draw and save image because openCV deals with pixels instead of DPI compared to PLT library which is more convenient.

cvat_data_train.ipynb view

Data is obtained by extracting images from a clip in Star Wars: Rogue One with ffmpeg. There are around 300 images and they are annotated by using CVAT. The notebook will simply overfit the model with custom data while using the darknet53 as feature extraction.

P.S I used this notebook as sanity test for yolo_train.ipynb while I was experimenting with the loss function

evaluate.ipynb view

mAP is an important metric to determine the performance of objection detection tasks. It's difficult to tell whether the model is doing good by looking at either the precision or recall value only. This notebook shows you the steps of creating the ground truth/detection annotations for your own dataset and obtain mAP with the official COCO API.

2018/8/30: Uploaded data/annotations for custom_data_train.ipynb. All notebooks should be working now

2018/9/11: Adapt data augmentations

2018/9/30: New loss function. Adapt darknet cfg augmentations parameters

2018/11/04: Accumlated gradients. Support use of subdivisions for GPU with less memory

2018/12/15: Multi-scale training. New DataHelper class for batch scheduling. custom_data_train.ipynb replaced by cvat_data_train.ipynb. Deterministic data loading with Pytorch's dataset/dataloader. Training now resume-able between batches instead of epochs while maintaining deterministic behavior.

2019/1/23: Add mAP evaluation. NMS speed improvment by reducing operations in loops. Support up to Pytorch 0.4.1.

TODO:

- Integrate pycocotools for evaluation

- mAP (mean average precision)

- Data augmentation (random crop, rotate)

- Implement backhook for YoloNet branching

- Feed Video to detector

- Fix possible CUDA memory leaks

- Fix class and variable names