Deep Speaker: an End-to-End Neural Speaker Embedding System https://arxiv.org/pdf/1705.02304.pdf

Due to the difficulty of the task (very big models and very large datasets) and the lack of information from Baidu, I've re-started another approach for the deep speaker project. I put it in the folder v2 of this repository. Once I have something fully working, I'll resume on this bigger implementation.

This code is not functional yet! I'm making a call for contributors to help make a great implementation! The basics stuffs are already there. Thanks!

Work accomplished so far:

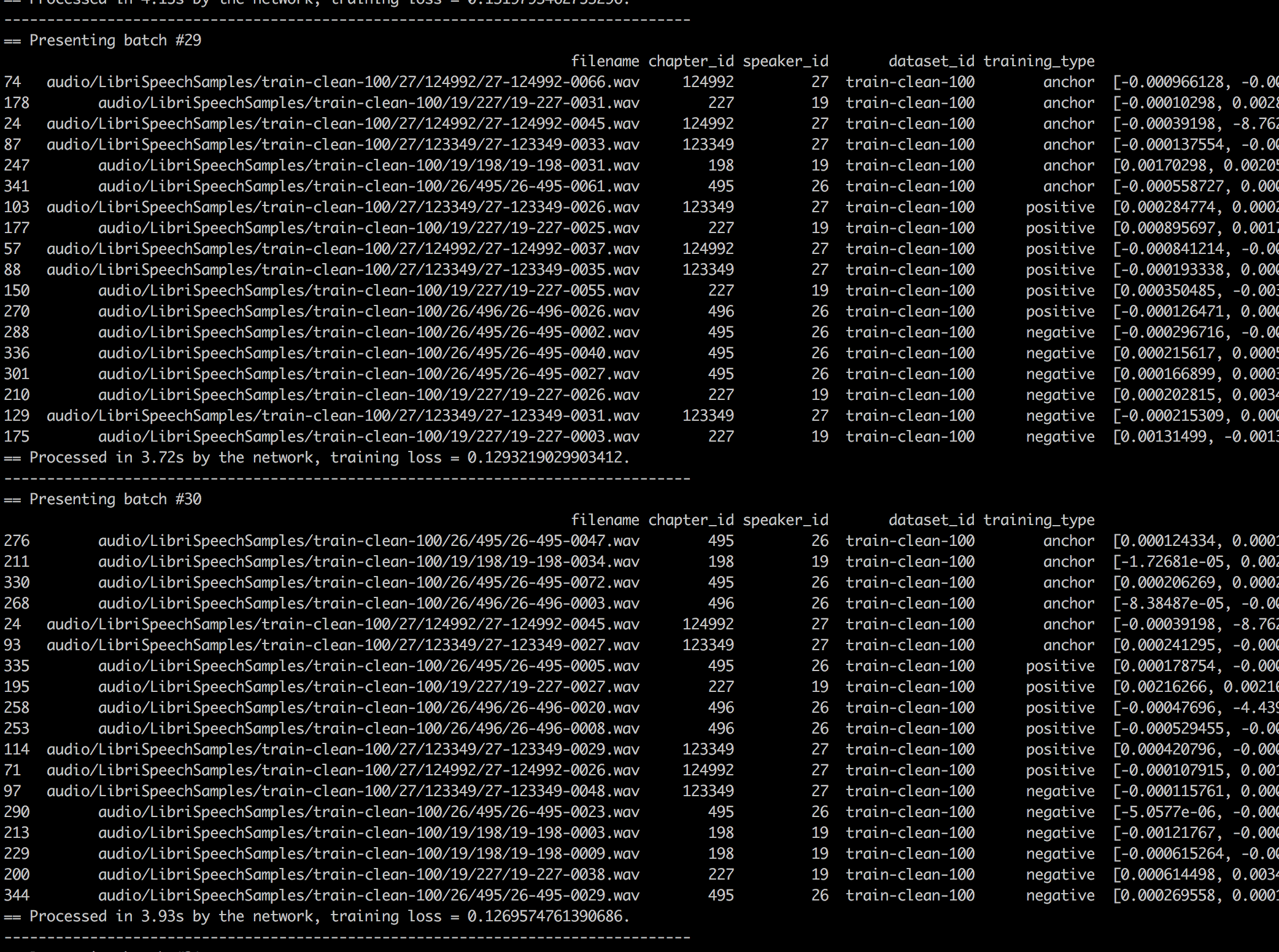

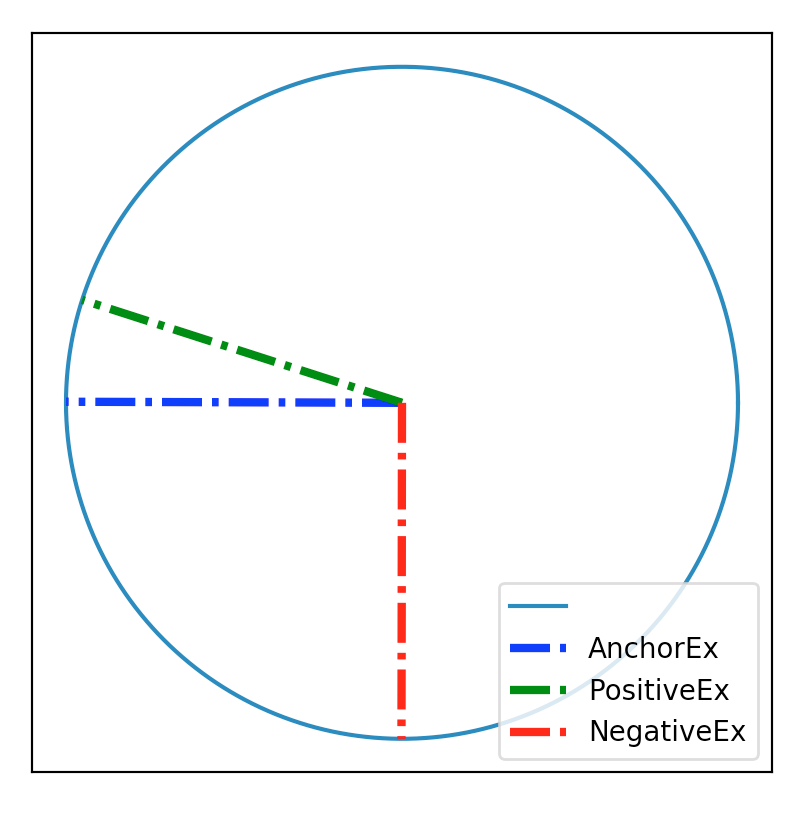

- Triplet loss

- Triplet loss test

- Model implementation

- Data pipeline implementation. We're going to use the LibriSpeech dataset with 2300+ different speakers.

- Train the models

Simply run those commands:

git clone https://github.com/philipperemy/deep-speaker.git

cd deep-speaker

pip3 install -r requirements.txt

cd audio/

./convert_flac_2_wav.sh # make sure ffmpeg is installed!

cd ..

python3 models_train.py

Preconditions:

- Installed tensorflow: https://www.tensorflow.org/install/install_linux

sudo apt-get install python3-tk ffmpeg- ~ 6 GB memory

- install ffmpeg (and add to PATH)

- use git bash for:

cd audio; ./convert_flac_2_wav.sh - other steps analogous to above

Please message me if you want to contribute. I'll be happy to hear your ideas. There are a lot of undisclosed things in the paper, such as:

- Input size to the network? Which inputs exactly?

- How many filter banks do we use?

- Sample Rate?

Available here: http://www.openslr.org/12/

List of possible other datasets: http://kaldi-asr.org/doc/examples.html

Extract of this dataset:

filenames chapter_id speaker_id dataset_id

0 /Volumes/Transcend/data-set/LibriSpeech/dev-clean/1272/128104/1272-128104-0000.wav 128104 1272 dev-clean

1 /Volumes/Transcend/data-set/LibriSpeech/dev-clean/1272/128104/1272-128104-0001.wav 128104 1272 dev-clean

2 /Volumes/Transcend/data-set/LibriSpeech/dev-clean/1272/128104/1272-128104-0002.wav 128104 1272 dev-clean

3 /Volumes/Transcend/data-set/LibriSpeech/dev-clean/1272/128104/1272-128104-0003.wav 128104 1272 dev-clean

4 /Volumes/Transcend/data-set/LibriSpeech/dev-clean/1272/128104/1272-128104-0004.wav 128104 1272 dev-clean

5 /Volumes/Transcend/data-set/LibriSpeech/dev-clean/1272/128104/1272-128104-0005.wav 128104 1272 dev-clean

6 /Volumes/Transcend/data-set/LibriSpeech/dev-clean/1272/128104/1272-128104-0006.wav 128104 1272 dev-clean

7 /Volumes/Transcend/data-set/LibriSpeech/dev-clean/1272/128104/1272-128104-0007.wav 128104 1272 dev-clean

8 /Volumes/Transcend/data-set/LibriSpeech/dev-clean/1272/128104/1272-128104-0008.wav 128104 1272 dev-clean

9 /Volumes/Transcend/data-set/LibriSpeech/dev-clean/1272/128104/1272-128104-0009.wav 128104 1272 dev-clean

Visualization of a possible triplet (Anchor, Positive, Negative) in the cosine similarity space