This is the official repository for Track 2: Multi-Modal Object Detection challenge (ICPR 2024).

This challenge focuses on Object Detection utilizing multi-modal data source including RGB, depth, and infrared images. You can visit the official website for more details or directly participate in this track on codalab.

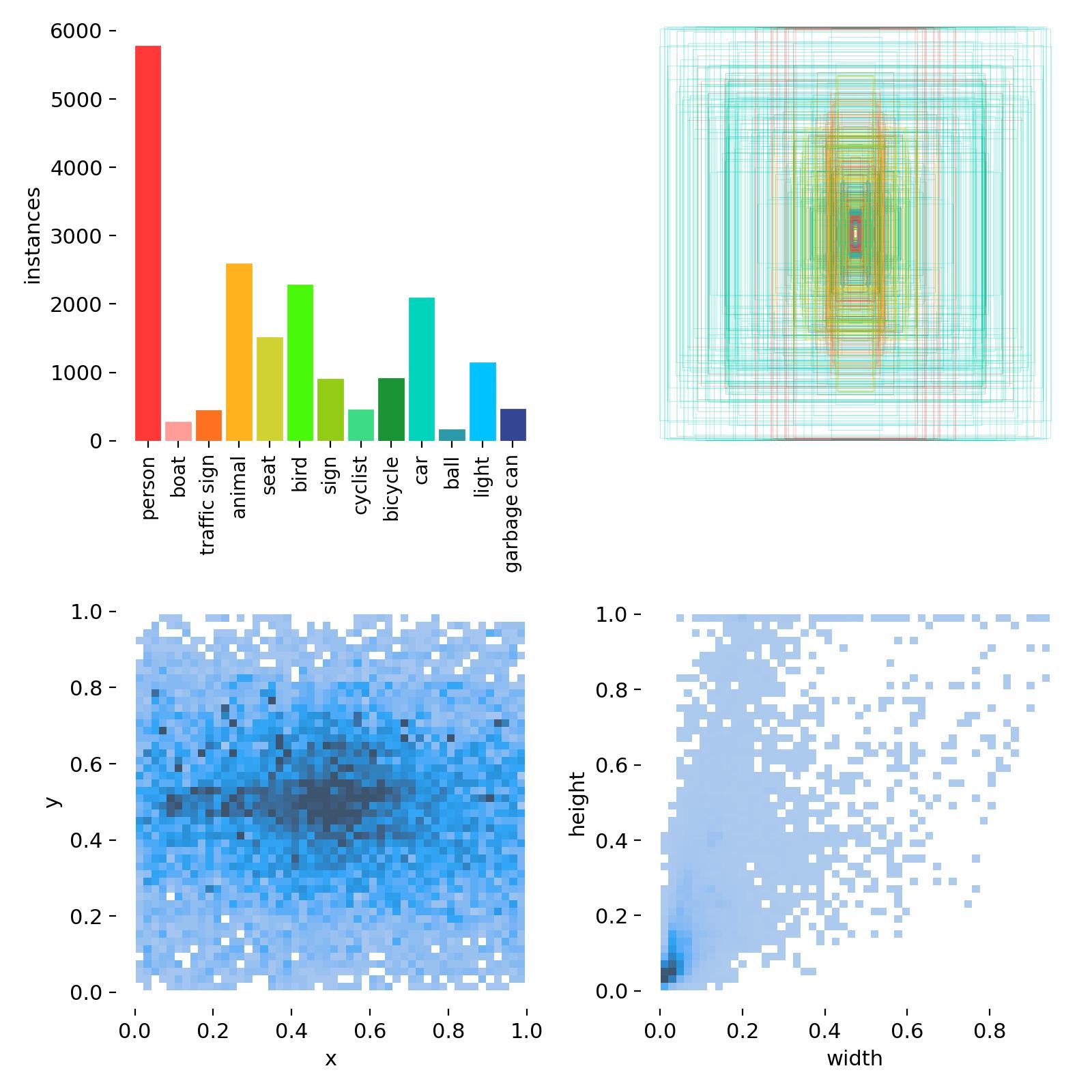

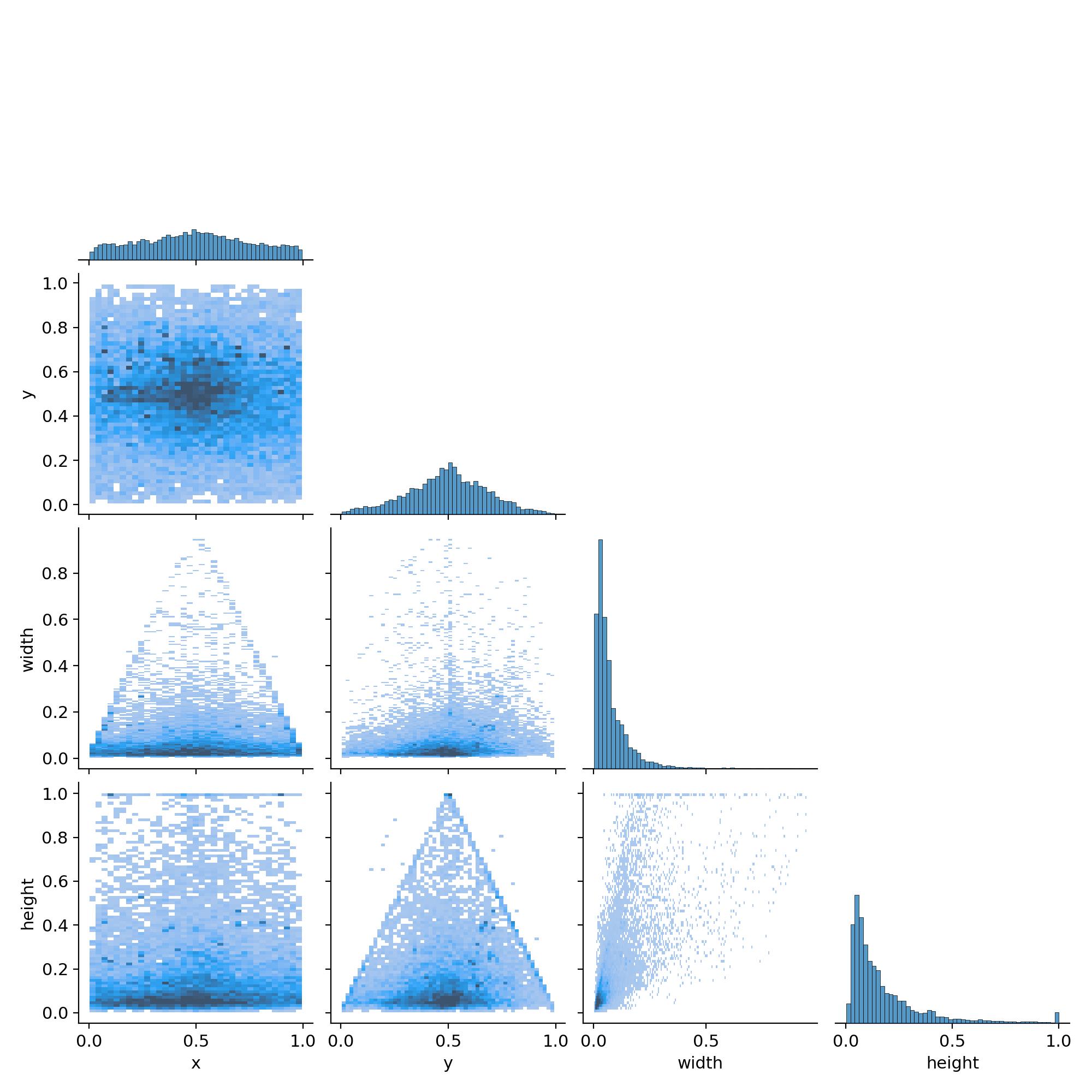

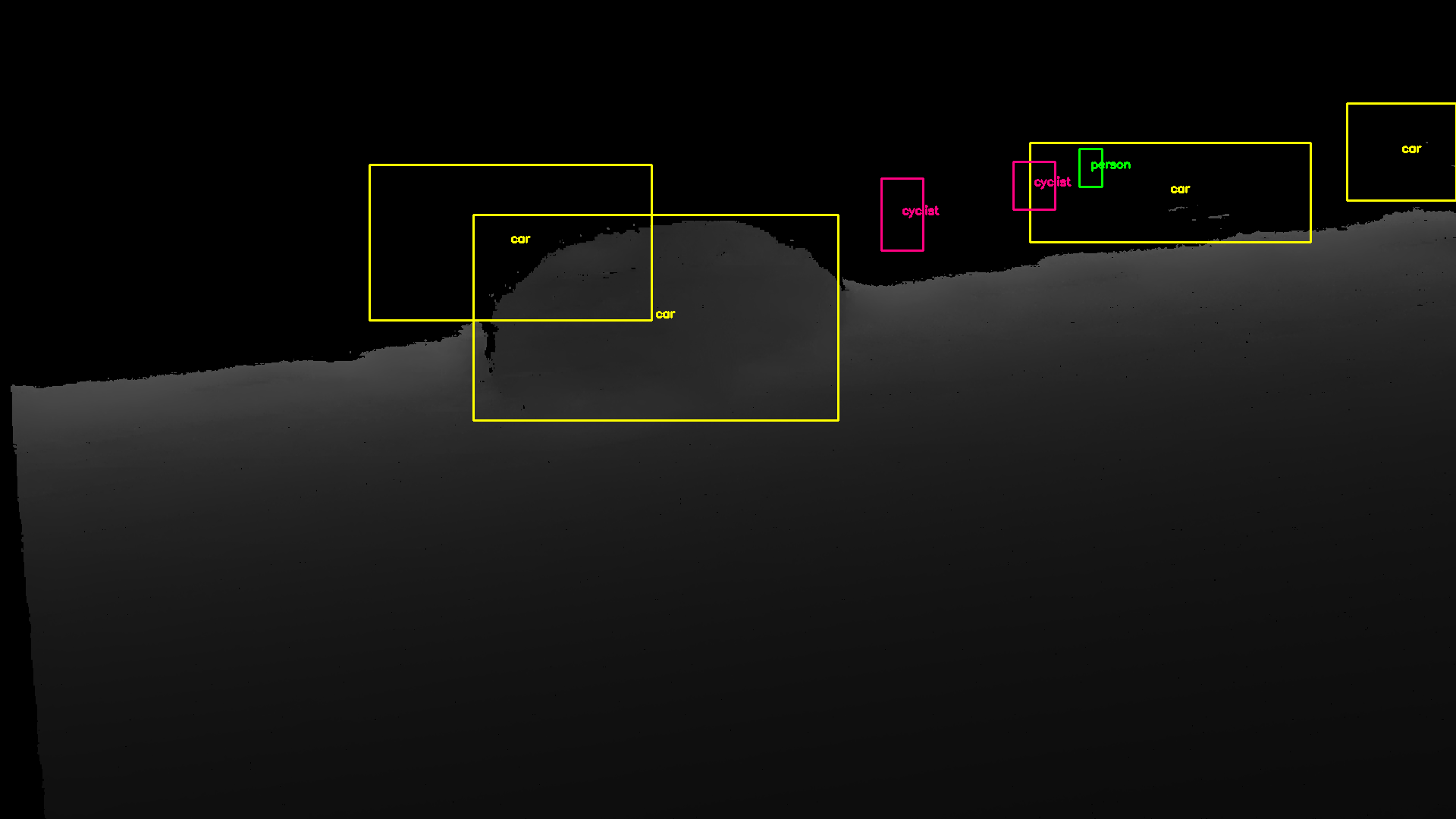

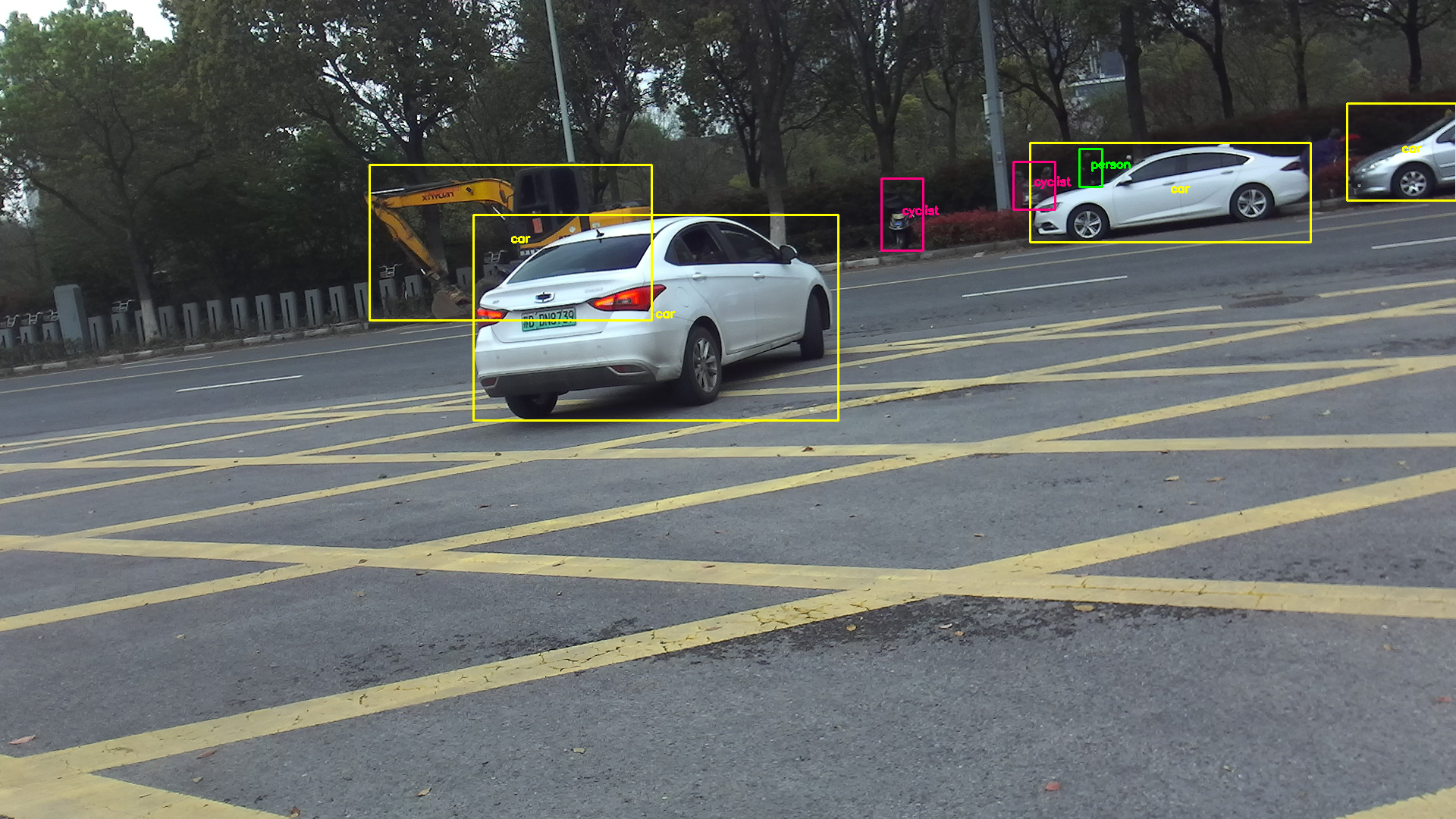

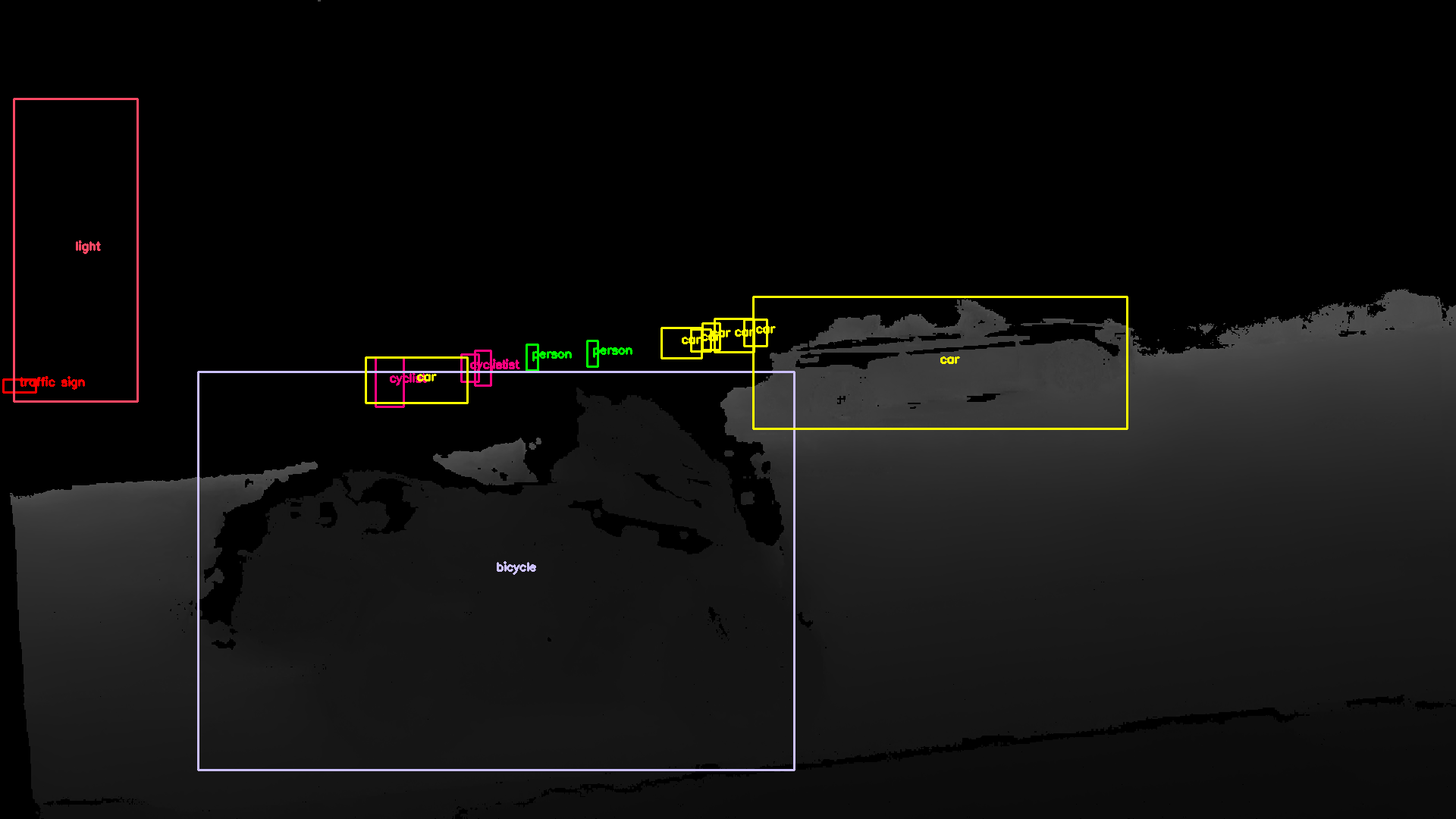

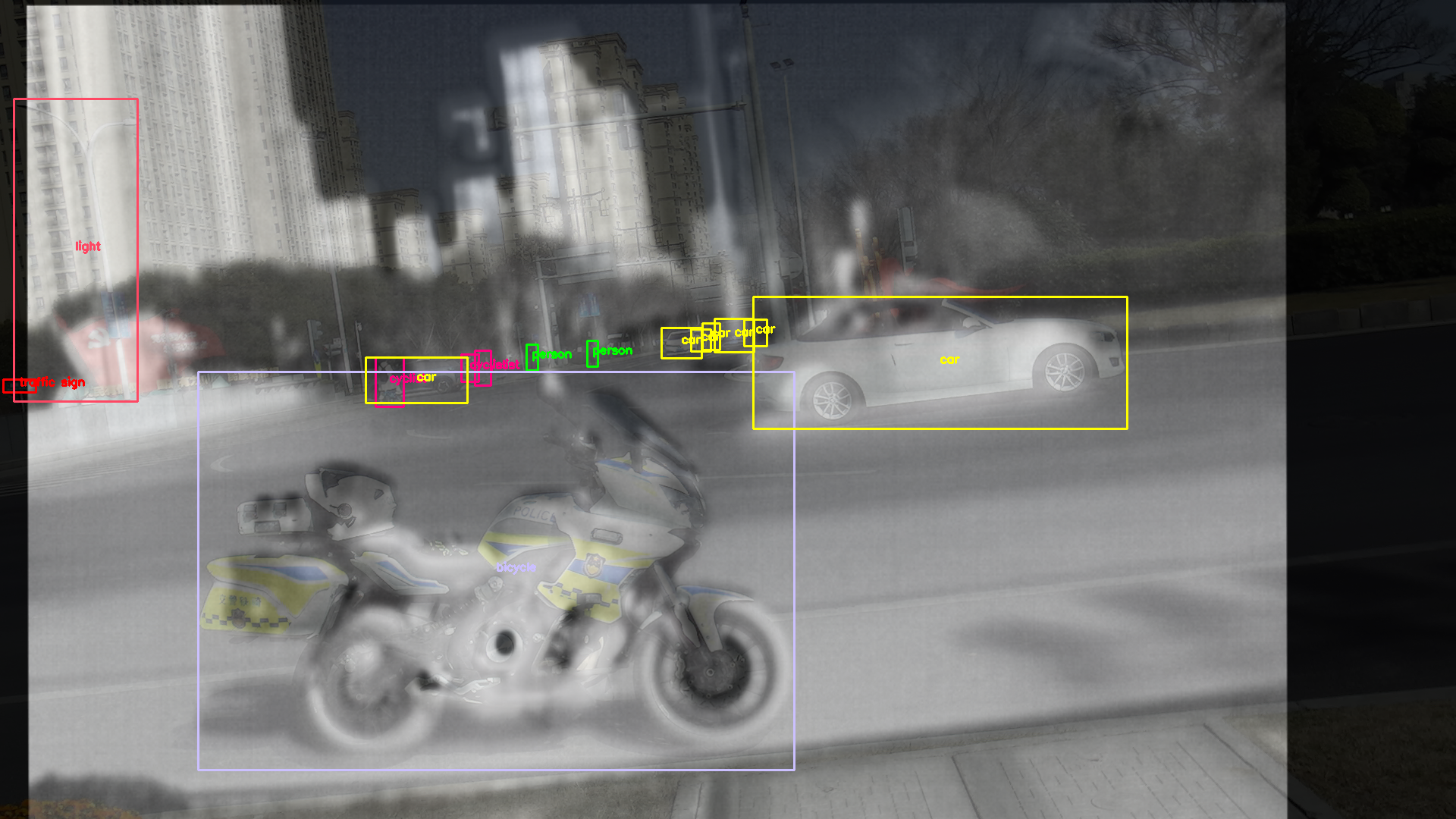

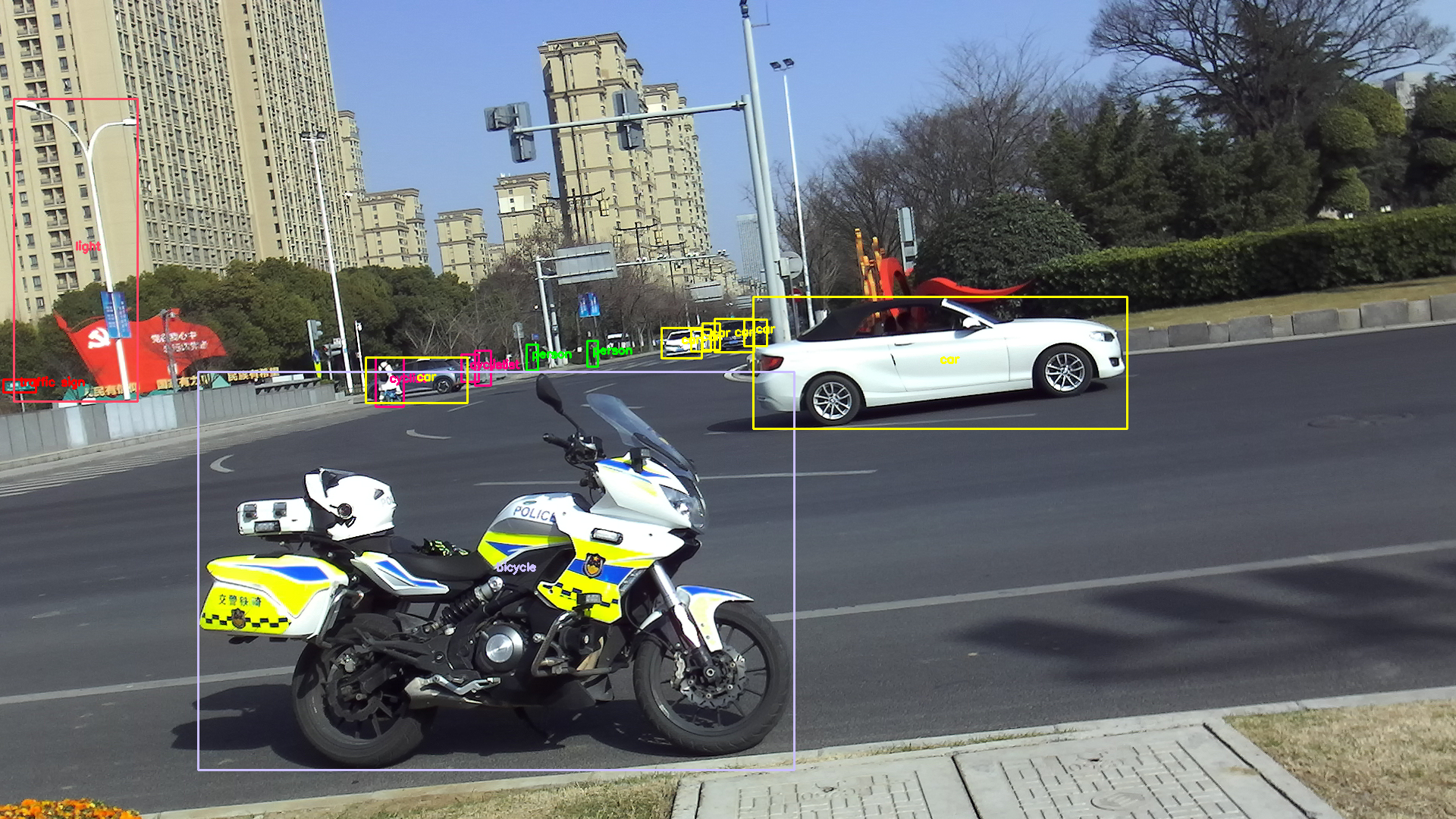

In this track, we provide a dataset named ICPR_JNU MDetection-v1, which comprises 5,000 multi-modal image pairs (4000 for training and 1000 for testing) across 13 classes. Details are as follows in this repo. To participate in this track, please submit your requirements by choosing "Challenge Track 2: Multi-modal Detection" in this Online Form and filling out other options.

| Lables | Labels Correlation |

|---|---|

|

|

| Depth | Thermal-IR | RGB |

|---|---|---|

|

|

|

|

|

|

ICPR_JNU MDetection-v1

├──/images/

│ ├── train

│ │ ├──color

│ │ │ ├── train_0001.png

│ │ │ ├── train_0002.png

│ │ │ ├── ... ...

│ │ │ ├── train_4000.png

│ │ ├──depth

│ │ │ ├── train_0001.png

│ │ │ ├── train_0002.png

│ │ │ ├── ... ...

│ │ │ ├── train_4000.png

│ │ ├──infrared

│ │ │ ├── train_0001.png

│ │ │ ├── train_0002.png

│ │ │ ├── ... ...

│ │ │ ├── train_4000.png

│ ├── val

│ │ ├── ... ...

│ ├── test

│ │ ├──color

│ │ │ ├── test_0001.png

│ │ │ ├── test_0002.png

│ │ │ ├── ... ...

│ │ │ ├── test_1000.png

│ │ ├──depth

│ │ │ ├── test_0001.png

│ │ │ ├── test_0002.png

│ │ │ ├── ... ...

│ │ │ ├── test_1000.png

│ │ ├──infrared

│ │ │ ├── test_0001.png

│ │ │ ├── test_0002.png

│ │ │ ├── ... ...

│ │ │ ├── test_1000.png

└──/labels/

├── /train/color/

│ ├── train_0001.txt

│ ├── train_0002.txt

│ │ ├── ... ...

│ ├── train_4000.txt

├── /val/color

│ ├── ... ...

├── /test/color/

│ ├── test_0001.txt

│ ├── test_0002.txt

│ │ ├── ... ...

│ ├── test_1000.txt

└───

This code is based on yolo-v5, you can follow the README_yolo.md or README_yolo.zh-CN.md first to build an environment. We have modified it to accommodate this multimodal task, while you can also build your own model to accomplish this task.

In this code, we provide a /data/ICPR_JNU_MMDetection_v1.yaml to suit this dataset. You should prepare the dataset and change the path to your own in this file.

- ❗Note!!! The validation set is not provided, you should divide the train set appropriately by yourself to validate during training.

- To build your own model, you should redesign the modules in ./models/yolo.py at least.

- To train your own model, you should modify the Hyperparameters in ./data/hyps first.

- Train your own model directly using:

python train.py

Generate the predictions pred.zip for test set:

python test_model.py- ❗Note that labels in testset are all blank, only on purpose of generating your predictions conveniently. Results

pred.zipwill be generated automatically and it's the only file you need to submit to Codalab for evaluation. More details of evaluation can be found here.

If you have any questions, please email us at yangxiao2326@gmail.com.