Yanyang Han, Ju Liu, Xiaoxi Liu, Xiao Jiang, Lingchen Gu, Xuesong Gao, Weiqiang Chen

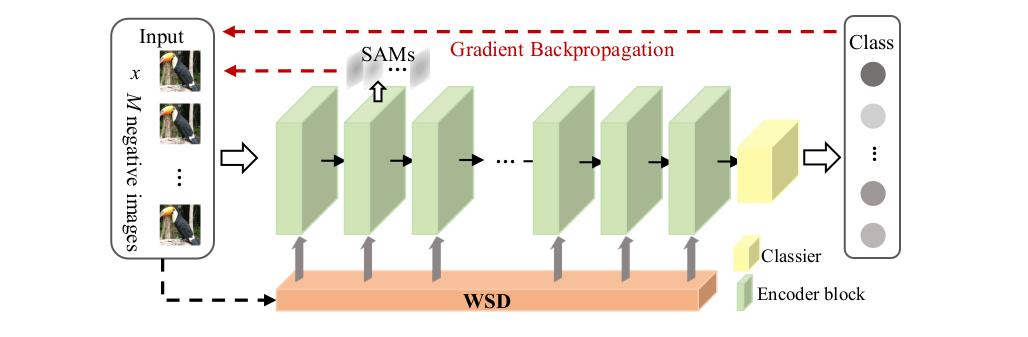

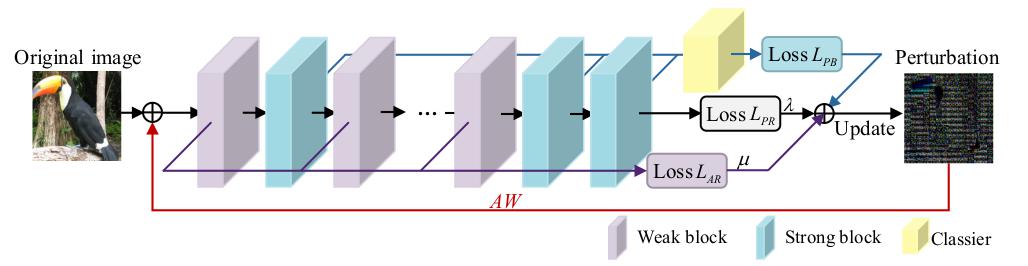

Abstract: Adversarial examples can attack multiple unknown convolutional neural networks (CNNs) due to adversarial transferability, which reveals the vulnerability of CNNs and facilitates the development of adversarial attacks. However, most of the existing adversarial attack methods possess a limited transferability on vision transformers (ViTs). In this paper, we propose a partial blocks search attack (PBSA) method to generate adversarial examples on ViTs, which significantly enhance transferability. Instead of directly employing the same strategy for all encoder blocks on ViTs, we divide encoder blocks into two categories by introducing the block weight score and exploit distinct strategies to process them. In addition, we optimize the generation of perturbations by regularizing the self-attention feature maps and creating an ensemble of partial blocks. Finally, perturbations are adjusted by an adaptive weight to disturb the most effective pixels of original images. Extensive experiments on the ImageNet dataset are conducted to demonstrate the validity and effectiveness of the proposed PBSA. The experimental results reveal the superiority of the proposed PBSA to state-of-the-art attack methods on both ViTs and CNNs. Furthermore, PBSA can be flexibly combined with existing methods, which significantly enhances the transferability of adversarial examples.

pip install -r requirements.txtWe select 1000 images of different categories from the ILSVRC 2012 validation dataset. In our experiments, all these images are resized to 224 × 224 × 3 before being fed into ViTs to load model parameters correctly.

(top)

DATA_DIR points to the root directory containing the validation images of ImageNet (original imagenet). We support attack types FGSM, PGD, MI-FGSM, DIM, TI, and SGM by default.

python test.py \

--test_dir "$DATA_DIR" \

--src_model deit_tiny_patch16_224 \

--tar_model tnt_s_patch16_224 \

--attack_type pma \

--eps 16 \

--index "all" \

--batch_size 128For other model families, the pretrained models will have to be downloaded and the paths updated in the relevant files under vit_models.

(top) Code borrowed from DeiT repository, TRM repository and TIMM library. We thank them for their wonderful code bases.

If you find our work useful, please consider giving a star ⭐ and citation. Han, Y., Liu, J., Liu, X. et al. Enhancing adversarial transferability with partial blocks on vision transformer. Neural Comput & Applic (2022). https://doi.org/10.1007/s00521-022-07568-9