TensorFlow implementation of Wasserstein GAN

Other implementations:

DCGAN model/ops are a modified version of Taehoon Kim's implementation @carpedm20.

Download dataset:

python download.py celebATo train:

python main.py --dataset celebA --is_train --is_cropOR modify and use run.py:

python run.pyNote: a NumPy array of the input data is created by default. This is to avoid batch by batch IO. You can turn this option off if the available memory is too small on your system or if your dataset is too large.

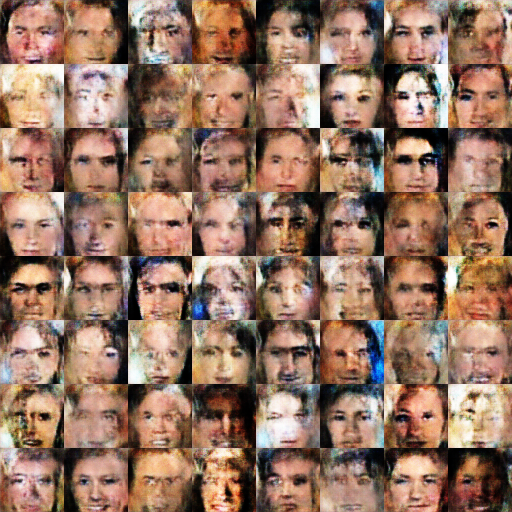

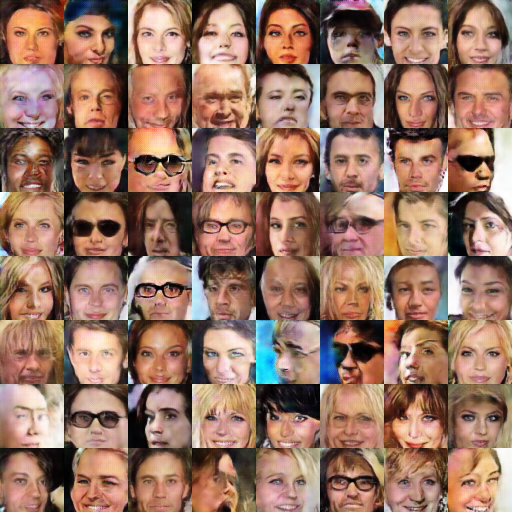

python main.py --dataset celebA --is_train --is_crop --preload_data FalseMy experiments show that training with a Wasserstein loss is much more stable than the conventional heuristic loss . However, the generated image quality is much lower. See examples below.

The architectures of the generator and discriminator (critic) is the same in both experiments. For cross-checking purposes here are the things that are different:

- Batch Normalization parameters: epsilon = 1e-3 (1e-5), momentum = 0.99 (0.9) for Wasserstein (Heuristic).

- RMSProp (Adam) for Wasserstein (Heuristic).