This project demonstrates how to extend the LLM models' capability to answer a question related to a given document. The project consists of two programs. One is for preparation, and the other is for question and answering using LLM. The preparation program will read a PDF file and generate a database (vector store). The LLM model will pick up a collection of a fraction of the input document that is related to the given query from the user and then answer the query by referring to the picked-up documents. This technique is so called RAG (Retrieval Augmented Generation).

| # | file name | description |

|---|---|---|

| 1 | vectorstore_generator.py |

Reads a PDF file and generates a vectorstore. You can modify this program to make it read and use the other document file format in this RAG chatbot demo. |

| 2 | openvino-chatbot-rag-pdf.py |

LLM chatbot using OpenVINO. Answer to the query by refeering a vectorstore. |

| 3 | llm-model-downloader.py |

Downloads LLM models from HuggingFace and converts them into OpenVINO IR models. This program downloads follosing models by default: * dolly-v2-3b* neural-chat-7b-v3-1* tinyllama-1.1b-chat-v0.6* youri-7b-chat.You can download llama2-7b-chat by uncomment some lines in the code. |

| 4 | .env |

Some configurations (model name, model precision, inference device, etc) |

- Install prerequisites

python -m venv venv

venv\Scripts\activate

python -m pip install -U pip

pip install -U setuptools wheel

pip install -r requirements.txt- Download LLM models

This program downloads the LLM models and converts them into OpenVINO IR models. If you don't want to download many LLM models, you can comment out the models in the code to save time.

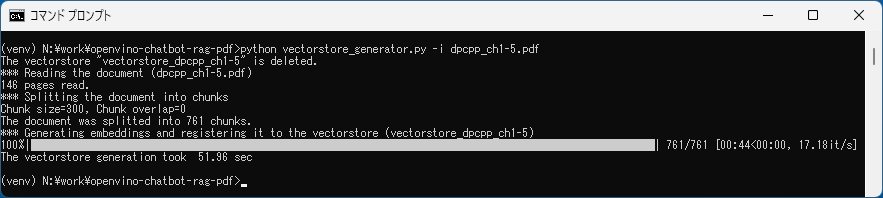

phthon llm-model-downloader.py- Preparation - Read a PDF file and generate a vectorstore

python vectorstore_generator.py -i input.pdf./vectorstore_{pdf_basename} directory will be created. The data of the vectorstore will be stored in the directory. E.g. ./vectorstore_input.

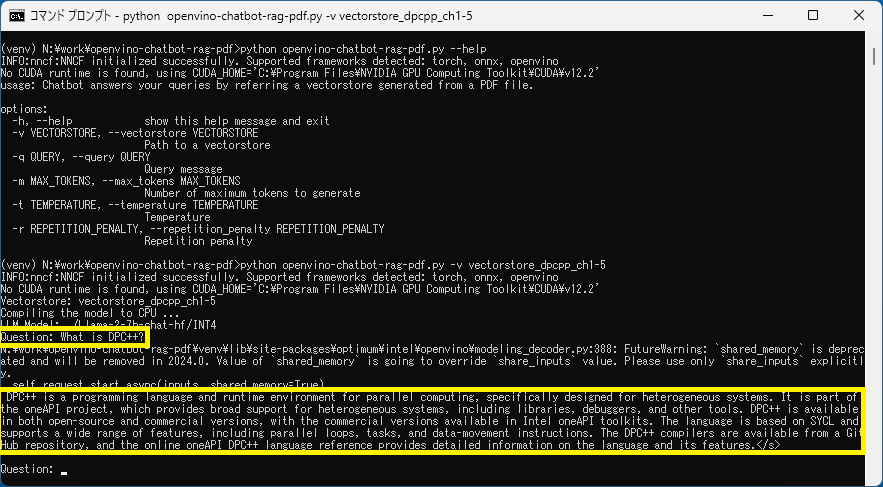

- Run LLM Chatbot

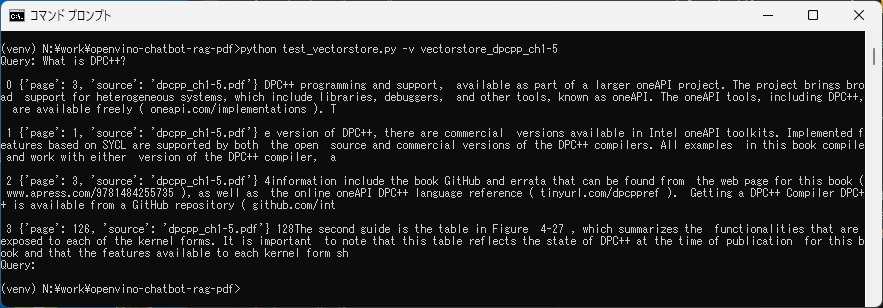

python openvino-chatbot-rag-pdf.py -v vectorstore_inputYou can check which fraction of the input documents are picked up from the vectorstore based on the input query.

python test_vectorstore.py -v vectorstore_hoge- Windows 11

- OpenVINO 2023.2.0