| page_type | languages | products | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

sample |

|

|

- Overview

- Benefits

- Application architecture

- Getting Started

- Testing the solution

- Azure Monitor queries to extract tokens usage metrics

- Additional Details

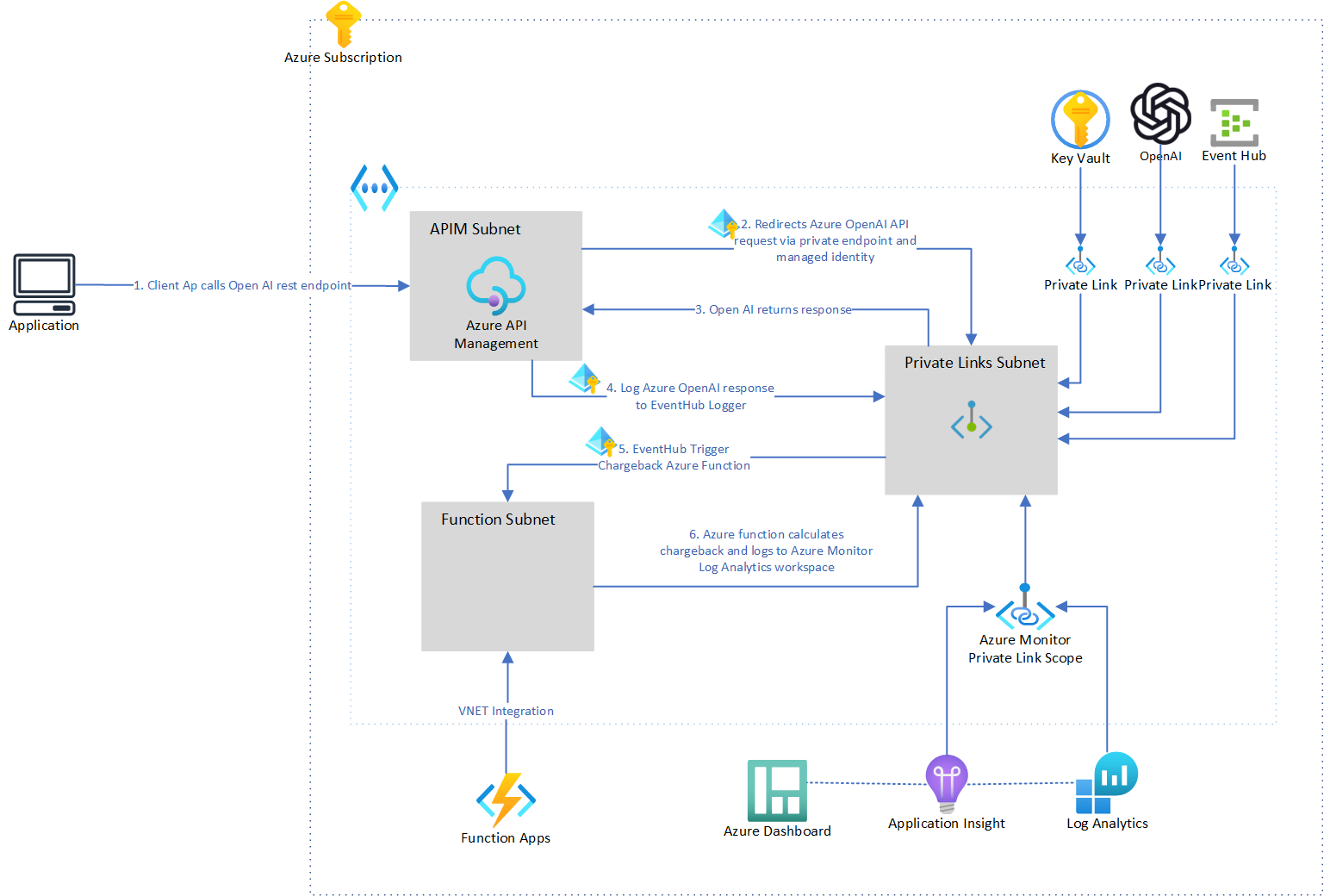

This sample demonstrates hosting Azure OpenAI (AOAI) instance privately within (customer's) Azure tenancy and publishing AOAI via Azure API Management (APIM). APIM plays a key role to montior and govern all the requests routed towards Azure OpenAI. Customer gets full control on who gets to access the AOAI and ability to charge back the AOAI users.

The repo includes:

-

Infrastructure as Code (Bicep templates) to provision Azure resources for Azure OpenAI, Azure API Management, Azure Event Hub and Azure Function App and Azure Private Links configuration.

-

A function app that computes token usage across various consumers of the Azure OpenAI service. This implementation utilizes the Tokenizer package and computes token usage for both streaming and non-streaming requests to Azure OpenAI endpoints.

-

Test scripts to test OpenAI chat and chat completions endpoints in Azure API Management using powershell or shell scripts.

- Private: Azure OpenAI Private Endpoints guarantees that data transmission remains protected from public internet exposure. By creating a private connection, Azure OpenAI Private Endpoints offer a secure and effective pathway for transmitting data between your infrastructure and the OpenAI service, thereby reducing potential security risks commonly associated with conventional public endpoints. Network traffic can be fully isolated to your network and other enterprise grade authentication security features are built in.

- Centralised and Secure Access: By integrating Azure OpenAI with Azure API Management (APIM) through Private Endpoints, you can secure access to Azure OpenAI and oversee access control. Furthermore, you have the ability to monitor service usage and health. Additionally, access to the Azure OpenAI service is also regulated via API Keys to authenticate and capture OpenAI usage.

- Out of the box Token Usage Solution: The solution offers sample code for Azure functions that compute token usage across various consumers of the Azure OpenAI service. This implementation utilizes the Tokenizer package and computes token usage for both streaming and non-streaming requests to Azure OpenAI endpoints.

- Policy as Code: Using Azure APIM policies to configure access control, throttling loging and usage limits. It uses APIM log-to-eventhub policy to capture OpenAI requests and responses and sends it to the chargeback solution for calculation.

- End-to-end observability for applications: Azure Monitor provides access to application logs via Kusto Query Language. Also enables dashboard reports and monitoring and alerting capabilities.

- Larger Token Size: The advanced logging pattern permits capturing an event of up to 200KB, whereas the basic logging pattern accommodates a maximum size of 8,192 bytes. This feature facilitates capturing prompts and responses from models that accommodate larger token sizes, such as GPT4.

- Robust identity controls and comprehensive audit logging: Access to the Azure OpenAI Service resource is limited to Azure Active Directory identities, including service principals and managed identities. This is achieved through the implementation of an Azure API Management custom policy. The logs streaming to the Azure Event Hub capture the identity of the application initiating the request.

- Send token usage data to diverse reporting and analytical tools: Azure APIM sends usage data to an Azure Event Hub. These events can be subsequently processed through integration with Azure Stream Analytics and then routed to data stores, including Azure SQL, Azure CosmosDB, or a PowerBI Dataset.

- Client Application requests the

completionsorchat completionsendpoints of Azure OpenAI Service in Azure API Management. Client App has to pass a valid subscription key in the request header. Azure APIM validates the subscription key and returns an Unauthorized error response if an invalid subscription key is passed. - If the request is valid, Azure API Management authenticates with OpenAI service using managed identity and forwards the request to Azure OpenAI Service via private link.

- Azure OpenAI Service responds back to Azure API Management using private link.

- Azure API Management logs the request and response data to an event hub using

log-to-eventhubpolicy in APIM. APIM authenticates with EventHub using Managed Identity and sends the log data via private link. ChargebackEventHubTriggerAzure Function is triggered when the message arrives in Event Hub from APIM. It calculates prompt and completion token counts. For streaming requests, it uses Tiktoken library to calculate tokens. For non-streaming requests, it uses the token count data returned in the response from OpenAI service.- Azure Function logs the token usage data to Application Insights. Once the data is in Log Analytics workspace for Application insights, it can be queried to get tokens count for the client applications. View sample queries: Token Usage Queries

In order to deploy and run this example, you'll need

- Azure account. If you're new to Azure, get an Azure account for free and you'll get some free Azure credits to get started.

- Azure subscription with access enabled for the Azure OpenAI service. You can request access with this form. If your access request to Azure OpenAI service doesn't match the acceptance criteria, you can use OpenAI public API instead. Learn how to switch to an OpenAI instance.

- Azure account permissions: Your Azure account must have

Microsoft.Authorization/roleAssignments/writepermissions, such as Role Based Access Control Administrator, User Access Administrator, or Owner. If you don't have subscription-level permissions, you must be granted RBAC for an existing resource group and deploy to that existing group.

- Download the Azure Developer CLI

- If you have not cloned this repo, run

azd init -t microsoft/enterprise-azureopenai. If you have cloned this repo, just run 'azd init' from the repo root directory.

Execute the following command, if you don't have any pre-existing Azure services and want to start from a fresh deployment.

- Run

azd auth login - If your account has multiple subscriptions, run

azd env set AZURE_SUBSCRIPTION_ID {Subscription ID}to set the subscription you want to deploy to. - If you would like to give a custom name to the resource group, run

azd env set AZURE_RESOURCE_GROUP {Name of resource group}. If you don't set this, resource group will be namedrg-enterprise-openai-${environmentName}. - Run

azd up- This will provision Azure resources and deploy this sample to those resource - After the application has been successfully deployed you will see a URL printed to the console. Click that URL to interact with the application in your browser. It will look like the following:

Important If you are running

azd upfor the first time, it may take upto 45 minutes for the application to be fully deployed as it it needs to wait for the Azure API Management to be fully provisioned. Subsequent deployments are a lot faster. You can check the status of the deployment by runningazd status.

If you already have existing Azure resources, you can re-use those by setting azd environment values.

- [OPTIONAL]: RUN

azd env set AZURE_SUBSCRIPTION_ID {Subscription ID}if your account has multiple subscriptions and you want to deploy to a specific subscription. - Run

azd env set AZURE_RESOURCE_GROUP {Name of existing resource group} - Run

azd env set AZURE_LOCATION {Location of existing resource group}

If you've only changed the function app code in the app folder, then you don't need to re-provision the Azure resources. You can just run:

azd deploy

If you've changed the infrastructure files (infra folder or azure.yaml), then you'll need to re-provision the Azure resources. You can do that by running:

azd up

To clean up all the resources created by this sample:

- Run

azd down - When asked if you are sure you want to continue, enter

y - When asked if you want to permanently delete the resources, enter

y

The resource group and all the resources will be deleted.

- Update the variables in the test script files with API Management service name

apimServiceNameand subscription keyapimSubscriptionKey. - To test OpenAI endpoints with stream enabled, update the value of

streamvariable totruein the test script files.

- Shell

./test-scripts/testcompletions.sh - Powershell

./test-scripts/testcompletions.ps1

- Shell

./test-scripts/testchatcompletions.sh - Powershell

./test-scripts/testchatcompletions.ps1

-

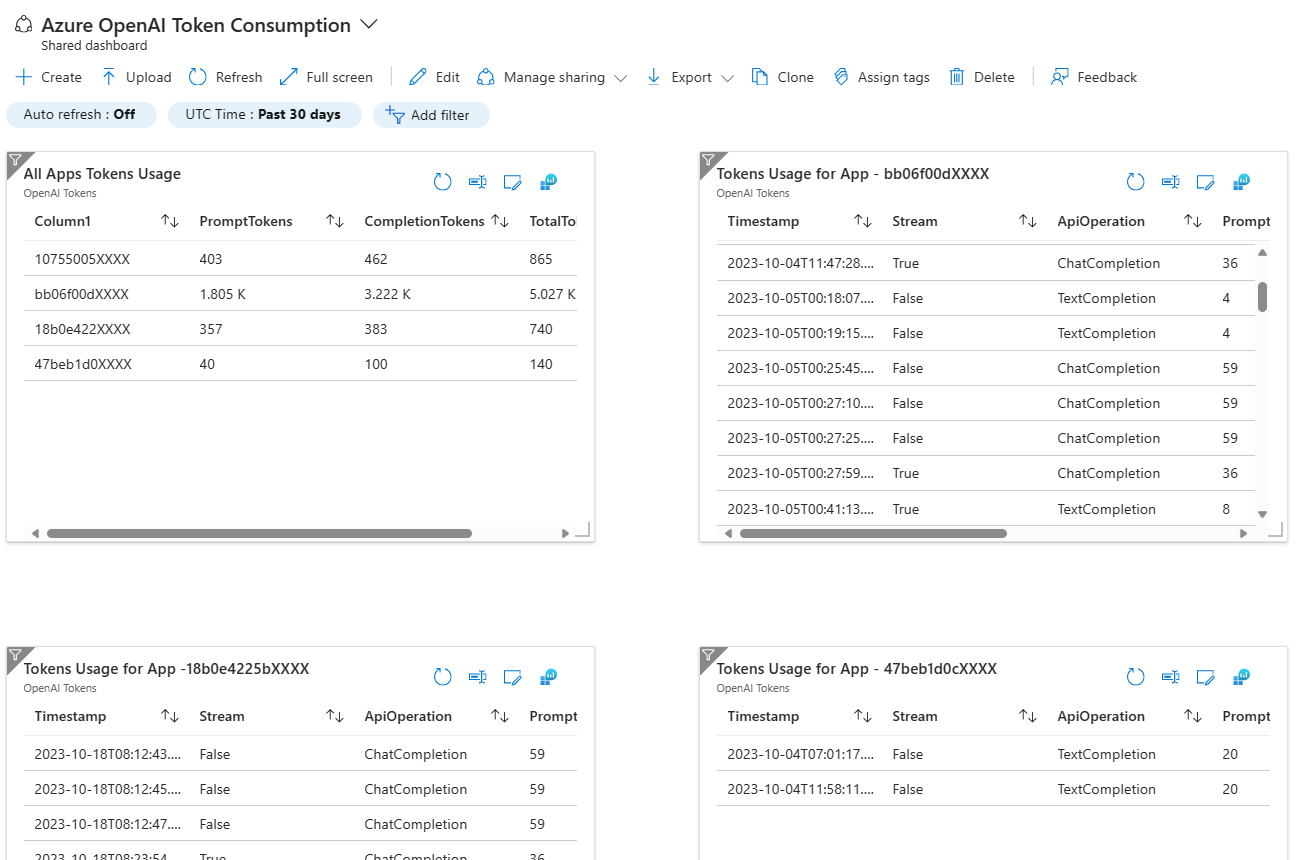

Once the Chargeback functionapp calculates the prompt and completion tokens per OpenAI request, it logs the information to Azure Log Analytics.

-

All custom logs from function app is logged to a table called

customEvents -

Example query to fetch token usage by a specific client key:

customEvents

| where name contains "Azure OpenAI Tokens"

| extend tokenData = parse_json(customDimensions)

| where tokenData.AppKey contains "your-client-key"

| project

Timestamp = tokenData.Timestamp,

Stream = tokenData.Stream,

ApiOperation = tokenData.ApiOperation,

PromptTokens = tokenData.PromptTokens,

CompletionTokens = tokenData.CompletionTokens,

TotalTokens = tokenData.TotalTokens- Example query to fetch token usage for all consumers

customEvents

| where name contains "Azure OpenAI Tokens"

| extend tokenData = parse_json(customDimensions)

| extend

AppKey = tokenData.AppKey,

PromptTokens = tokenData.PromptTokens,

CompletionTokens = tokenData.CompletionTokens,

TotalTokens = tokenData.TotalTokens

| summarize PromptTokens = sum(toint(PromptTokens)) , CompletionTokens = sum(toint(CompletionTokens)), TotalTokens = sum(toint(TotalTokens)) by tostring(AppKey)

| project strcat(substring(tostring(AppKey),0,8), "XXXX"), PromptTokens, CompletionTokens, TotalTokensThe queries can be visualised using Azure Dashboards

Azure OpenAI Swagger Spec is imported in Azure API Management.

Tiktoken library is used to calculate tokens when "stream" is set as "true" to stream back partial progress from GPT models.

If stream is set, partial message deltas will be sent, Tokens will be sent as data-only server-sent events as they become available, with the stream terminated by a data: [DONE] message.

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.