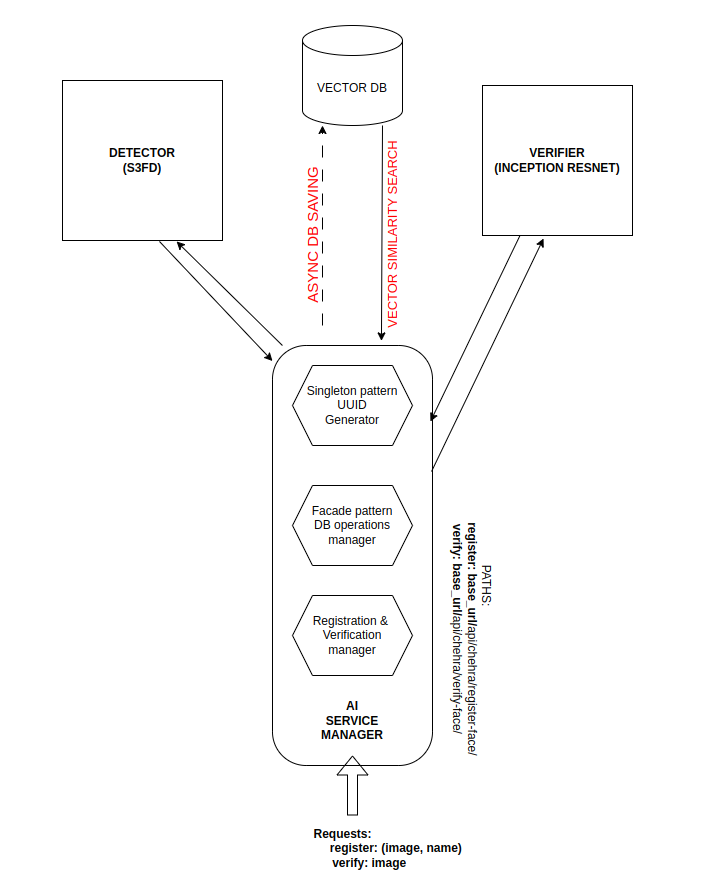

- IRYWAT is a platform which is ready to deploy server where you don't have to care much about how you manager traffic and request flow you just need to focus on building your AI microservice and this will provide a communication layer to that AI service

- This is container based so host your models somehere in this world and bring it's IP and perform the business or any other cool logics with IRYWAT

- here is a recorded video of the APIs from postman.

- one is detector API where we pass an image for registration and other one was the verifier where we send an image for verification.

- These are the steps required to install the project.

- I have built this project on Ubuntu OS but I've not tried installing it in other OS but in that case you can go forward with docker installation.

- Docker

- Python >= 3.8

-

This is a critical step as whole project is based upon this DB installation

docker network create -d bridge network1docker pull ankane/pgvectordocker run --name pgvector-db --rm -e POSTGRES_PASSWORD=test@123 -p 5432:5432 --network network1 ankane/pgvector

-

Here your DB image is pulled and your container will be up and running with username postgres and password test@123 Now you need to configure it.

-

go to shell_scripts/db_setup/ and start this bash script configure_db.sh by

chmod +x ./configure_db.sh./configure_db.sh

-

Congratulations!!! your DB is ready, you can also connect this DB with pgAdmin to run custom queries.

-

Now just be in this directory(root) and type one magic command to turn the server on

docker-compose up

-

In case want to run this application only(not detector & verifier) through docker container then firstly expose your port 5432 to internet using ngrok on which your postgres vectorDB is running then make image of this from dockerfile and then run the container with following commands

docker build -t <name-for-image(foreg: service_manager)> .docker run -p 5050:5050 --rm --name <name-for-container> -e NGROK_PORT=<ngrok-port> <name-of-image(foreg: service_manager)>

-

that's it Now you can hit the detector and verifier APIs and take the feel of this powerfull system

-

Clone the repo

git clone https://github.com/yashtiwari1906/AI-Service-Manager.git

-

cd AI-Service-Manager -

Install dependancies

pip install -r requirements.txt

-

go to prequisites/detector_verifier_servers/ in another terminal and hit following command to switch on the servers for detector and verifier

docker-compose up

-

Now your detector is up at (127.0.0.1:5001/predict) & verifier is up at (127.0.0.1:4001/predict) for predictions you can use https://drive.google.com/file/d/1-5jFNU1p6IkZnRuUXuf0hpqCTLyRIe8L/view?usp=sharing dummy payload to check them individually

-

Now get back to the main terminal and export an environment variable ENV

export ENV=local -

Now hit the main server by

python manage.py runserver

-

Now you can try this by hitting registration folder for registration of face and verification folder for verification of the face under the ai_service_manager folder in the postman collection attached in this repo

- you can find postman collection at https://drive.google.com/file/d/15sDPYq4CAaT1pdMViJRhbQXqHPOOjOwV/view?usp=sharing where the request and response are documented

- but as per general information:

- if running system from local ip for this server will be 127.0.0.1:8000 otherwise 0.0.0.0:5050

two APIs are exposed in this system right now

- Registration:

- {ip}/api/chehra/register-face/

- where you need to provide an image file in the request along with name of the face which you want to register

- Verification:

- {ip}/api/chehra/verify-face/

- here you only need to provide an image

`# Contributing

When contributing to this repository, please first discuss the change you wish to make via issue, email, or any other method with the owners of this repository before making a change.

- check for the dependancy related stuffs and use docker containers to run microservices used in this project

- Update the README.md with details of changes to the interface, this includes new environment variables, exposed ports, useful file locations and container parameters.

- Increase the version numbers in any examples files and the README.md to the new version that this Pull Request would represent. The versioning scheme we use is SemVer.

- You may merge the Pull Request once you have the sign-off of two other developers, or if you do not have permission to do that, you may request the second reviewer merge it for you. `

Choose an Issue

- Pick an issue that interests you - if you're new, look for

good-first-issuetags. - Read the CONTRIBUTING.md file

- Comment on the Issue, and explain why you want to work on it You can showcase any relevant background information on why you can solve the issue.

Set Up Your Environment

Forkour repository to your GitHub account.Cloneyour fork to your local machine. Use the commandgit clone <your-fork-url>.- Create a new branch for your work.

Use a descriptive name, like

fix-login-bugoradd-user-profile-page.

Commit Your Changes

- Commit your changes with a clear commit message.

e.g

git commit -m "Fix login bug by updating auth logic".

Submit a Pull Request

- Push your branch and changes to your fork on GitHub.

- Create a pull request, compare branches and submit.

- Provide a detailed description of what changes you've made and why. Link the pull request to the issue it resolves. 🔗

Review and Merge

- Our team will review your pull request and provide feedback or request changes if necessary.

- Once your pull request is approved, we will merge it into the main codebase 🥳

- you can go in the deployment folder to find the deployment file for the kubernetes deployment of the project.

- Install minikube first and then just deploy every deployment file in it by:

kubectl apply -f <file_name.yaml> - Then type

minikube ipthis will be your IP and31111will be your port which is the nodeport for service-manager-service - so url in cluster based environment will be

http://<minikube_ip>:31111 - One thing to remember when your'e estabilishihng the communication between multiple pods try to make sure your'e calling with port not with targetPort or containerPort

- In GKE cluster you've to deploy these

NodePortasLoadBalancerso that you'll have an external IP and you'll call it throughportnot targetPort or NodePort

Version:0

- Introduction of Concept

- AI Service Manager is born and this is a connector of all the AI microservices

- Docker-compose for instant replication of the server on a machine

- Key-Features

- Singleton pattern is followed to generate uuid to the request

- builder pattern in the detector and verifier microservices

- 4-5 sec latency in the first draft

with respect to API there are just two one for detection and storing the identity and another for verification toggle lists.

Quick Questions

- DB setup is necessary right now for the project to work

- Docker-compose.yml is enough to make this project up and running

- But you need to make a docker network because database will be running as a container

- We'll try in future to remove this dependancy

Copyright (c) 2023 Yash

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

- I invite all the fellow Machine Learning Engineers to come and build this amazing project for the community so that People don't have to worry about the production level server whenever they are planning to launch an AI product

- email: yashtiwari.engineer@gmail.com

- website: https://theyashtiwari.com