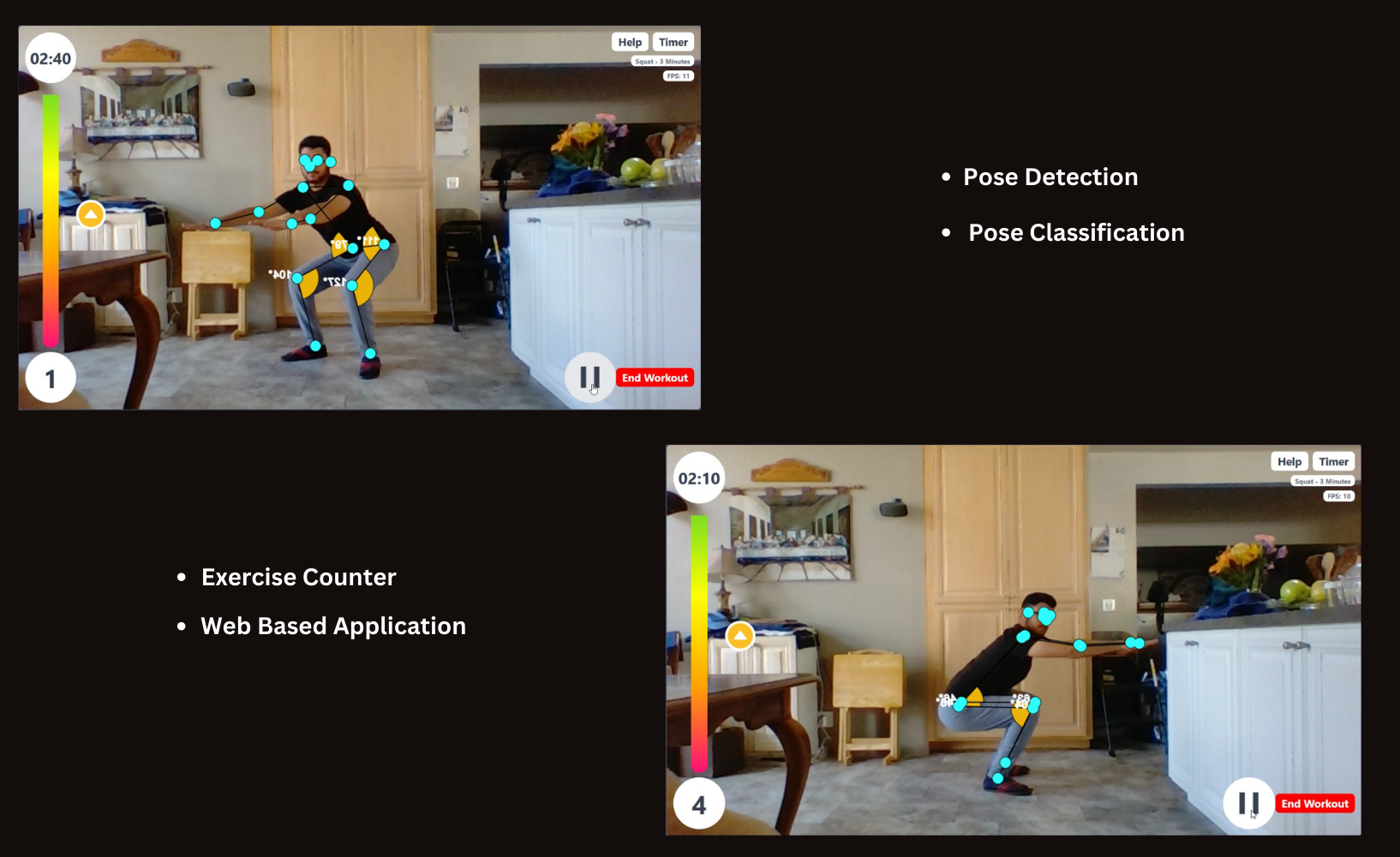

Introducing our web application, which enables anytime, anywhere access to workouts. You may exercise with confidence while the app automatically counts and monitors your repetitions thanks to our pose detecting feature. It's never been simpler to adopt a healthy lifestyle and fit daily exercise into your schedule. Start your fitness adventure right away and enjoy the benefits and ease of working out with our nifty web application. Let's put our health first and live healthier lives by working out frequently.

Pose Detector and Classification is a feature that the AI Workout Assistant program implements, and it runs fully on the client side. This indicates that the user's gadget does not transmit any image data. After the analysis, the application automatically deletes any movies or photographs it processed. By limiting the possibility of illegal access or data breaches and keeping all critical information within the user's device, this strategy guarantees user privacy and data protection.

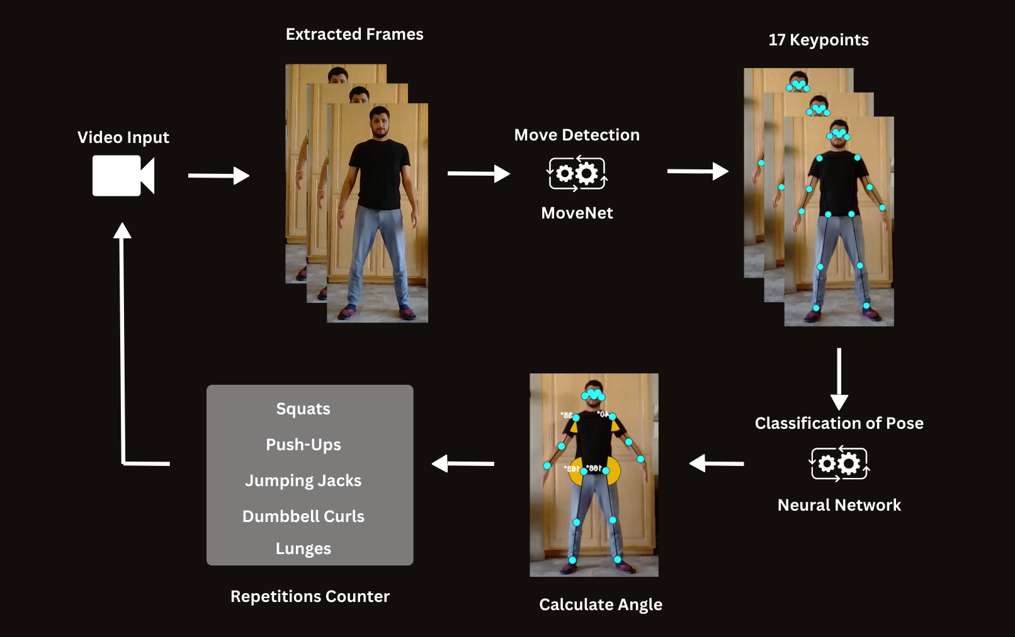

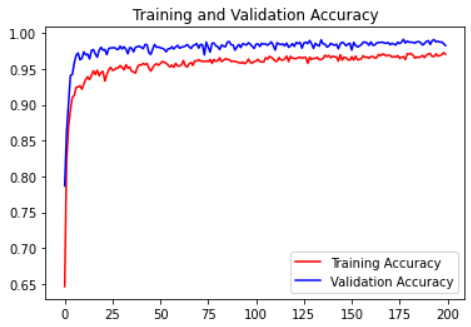

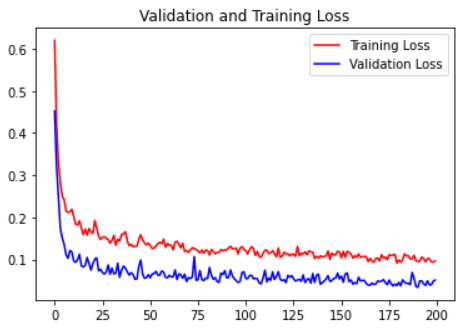

The AI Workout Assistant program processes picture data from movies or webcams using a pose detector powered by the MoveNet model. Keypoints are produced by the position detector and are used as input to categorize various workout types and are crucial for figuring out training repetitions. The classification task is subsequently carried out by a Dense Neural Network (DNN) model, which receives the keypoints. It's crucial to remember that all picture data processing, including posture detection and classification, takes place locally on the user's device, protecting the confidentiality and privacy of their data.

To determine whether or not to exercise, the model uses binary categorization. For instance, whether to perform a push-up or not. You can choose any push-up video with a full body for a positive class. Additionally, you can choose any solo human dancing video for the negative class. Upload those videos using the advanced settings under the assistance section. For each of the data points, you will receive a CSV file. then open this and adhere to the instructions after gathering both good and negative data. The model will be sent to you in tfjs format.

- Prerequisites: you'll need to have Git, Node, and NPM installed and running on your machine.

- Open terminal/powershell/command prompt then clone this repository

git clone https://github.com/yashwanth6/295A_HPE.git cd 295A_HPE - For the Backend:

cd Backend node index.js - Go to Frontend and install dependencies:

cd Frontend npm install- If there is an ERROR

npm ERR! code ERESOLVE npm ERR! ERESOLVE could not resolve npm ERR! npm ERR! While resolving: @tensorflow-models/pose-detection@2.0.0 npm ERR! Found: @mediapipe/pose@0.5.1635988162 npm ERR! node_modules/@mediapipe/pose npm ERR! @mediapipe/pose@"^0.5.1635988162" from the root project

- Apply Command:

npm config set legacy-peer-deps true

- If there is an ERROR

- In the Frontend add this comand to run this app locally.

npm run start-dev

- Then open http://18.191.166.16:8080 to see your app.

- 3D angle is not implemented yet

- Planning: To investigate lightweight models capable of generating 3D keypoints, such as BlazePose, MoVNect, LHPE-nets, or other model.

- Facing low FPS because of the hight quality of videos

- You may compare and watch the frames per second by uploading videos with high and low resolutions.

- Pose Detection with TFJS

- MoveNet Documentation

- MoveNet in TFHub

- Pose Classification

- Other Models for 3D angle

- Original video used in training and testing

- Write unit test

- Add audio effects when the exercise is not correct

- Create better dataset to train our model along with data augmentation techniques

- Generate the user activity tracking graphs for their own profile

- Calculate the calories and suggest diet plan