- Java.

- Download a stable version of Hadoop from Apache mirrors.

- red hat linux system or any linux distro.

- Oracle VM VirtualBox

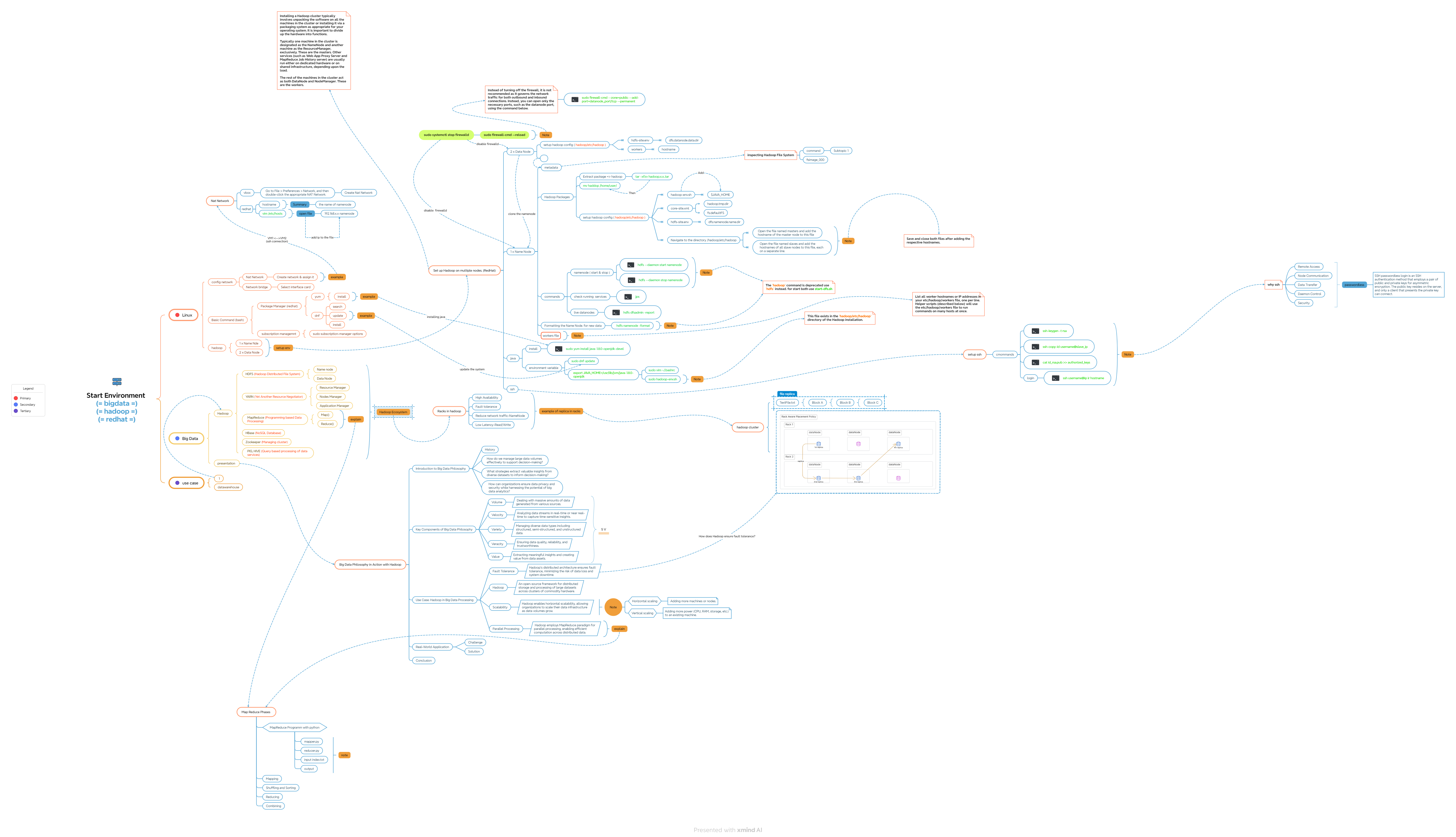

Installing a Hadoop cluster typically involves unpacking the software on all the machines in the cluster or installing it via a packaging system as appropriate for your operating system. It is important to divide up the hardware into functions.

Typically one machine in the cluster is designated as the NameNode and another machine as the ResourceManager, exclusively. These are the masters. Other services (such as Web App Proxy Server and MapReduce Job History server) are usually run either on dedicated hardware or on shared infrastructure, depending upon the load.

The rest of the machines in the cluster act as both DataNode and NodeManager. These are the workers.

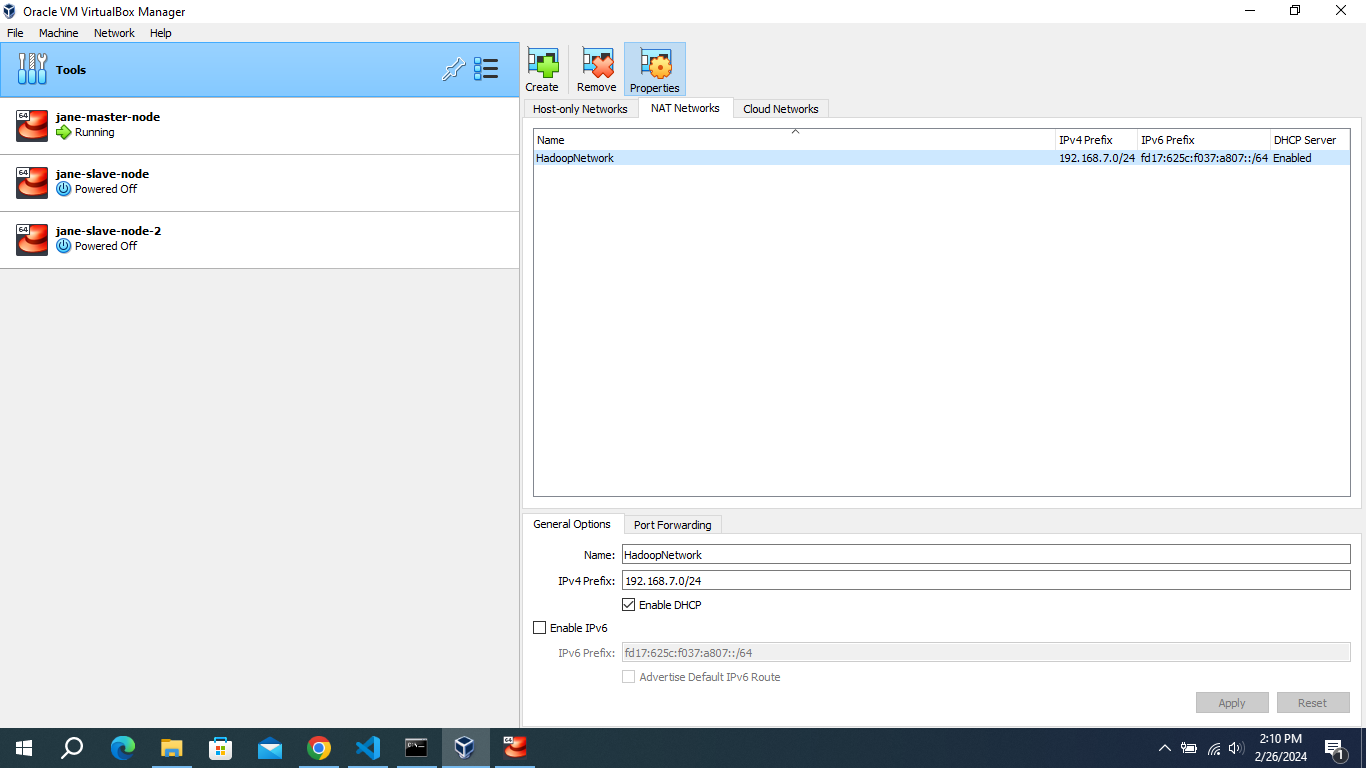

Tools > Preferences ( icon ) > Network, and then double-click the appropriate NAT Network.

- Select the NAT network option in VirtualBox.

- Create a new NAT network if one doesn't exist already.

- Configure the network with the following example IP address:

192.168.7.0. - Enable DHCP for the network.

- Click on "Apply" to save the changes.

First, make sure you have installed Vim on your system. For example, in Red Hat :

sudo dnf search vim-

Use the command

ifconfigin the terminal for each VM to obtain the IP address. -

Open the hosts file using the command below.

sudo vi hosts- Verify that these IP addresses are correct.

- Put these IP addresses in the

hostsfile as listed below.

192.168.7.4 namenode1

192.168.7.6 datanode1

192.168.7.7 datanode2Once you have completed these steps, proceed to the next step by configuring the hostname file. To do so, open the file located at /etc/hostname:

sudo vi /etc/hostname Then, add the specified hostname, for example, namenode1:

namenode1 # Ensure that if 'localhost' or any other entry is present in the file, remove it and replace it with 'the specified hostname'.Hadoop’s Java configuration is driven by two types of important configuration files:

-

Read-only default configuration - core-default.xml, hdfs-default.xml, yarn-default.xml and mapred-default.xml.

-

Site-specific configuration - etc/hadoop/core-site.xml, etc/hadoop/hdfs-site.xml, etc/hadoop/yarn-site.xml and etc/hadoop/mapred-site.xml.

To configure the Hadoop cluster you will need to configure the environment in which the Hadoop daemons execute as well as the configuration parameters for the Hadoop daemons.

sudo vi ~/.bashrcThen add these arguments to the .bashrc file.

# java

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk

export PATH=$JAVA_HOME/bin:$PATH

# hadoop

export HADOOP_HOME=/home/jane/hadoop

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

export HADOOP_OPTS=-Djava.net.preferIOv4stack=true

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"HDFS daemons are NameNode, SecondaryNameNode, and DataNode. YARN daemons are ResourceManager, NodeManager, and WebAppProxy. If MapReduce is to be used, then the MapReduce Job History Server will also be running. For large installations, these are generally running on separate hosts.

SSH passwordless login is an SSH authentication method that employs a pair of public and private keys for asymmetric encryption. The public key resides on the server, and only a client that presents the private key can connect.

to setup ssh you need only to run commands bellow:

- Generate an RSA SSH key using: ssh-keygen -t rsa:

ssh-keygen -t rsa- Append the contents of the

id_rsa.pubfile to theauthorized_keysfile using:

cat id_rsa.pub >> authorized_keys- Use

ssh-copy-idto copy yourSSHpublic key to theauthorized_keysfile on the remote machine:

ssh-copy-id username@slave_ip- To SSH into a machine with the IP address 'ip' or the hostname 'hostname' with the username 'username', you can use the following command let's try login :

ssh username@ip

# example: ssh 'jane@192.168.7.6'Administrators should use the etc/hadoop/hadoop-env.sh and optionally the etc/hadoop/mapred-env.sh and etc/hadoop/yarn-env.sh scripts to do site-specific customization of the Hadoop daemons’ process environment.

At the very least, you must specify the JAVA_HOME so that it is correctly defined on each remote node.

Administrators can configure individual daemons using the configuration options shown below in the table:

for etc/hadoop/hadoop-env.sh :

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk

#allow a specific user to run the

export HDFS_NAMENODE_USER="jane"

export HDFS_DATANODE_USER="jane"

export YARN_RESOURCEMANAGER_USER="jane"

export HDFS_SECONDARYNAMENODE_USER="jane"This section deals with important parameters to be specified in the given configuration files:

etc/hadoop/core-site.xml.

| Parameter | Value | Notes |

|---|---|---|

| fs.defaultFS | NameNode URI | hdfs://host:port/ |

| io.file.buffer.size | 131072 | Size of read/write buffer used in SequenceFiles. |

now let's apply this config in our hadoop core-site.xml file:

first open the file

sudo vi ~/hadoop/etc/hadoop/core-site.xmlThen add these configurations to the etc/hadoop/core-site.xml file.

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://namenode1:9000</value>

</property>

</configuration>The hdfs-site.xml file in Hadoop is a core configuration file used to specify various settings related to the Hadoop Distributed File System (HDFS). It contains parameters that govern the behavior of HDFS daemons such as the NameNode, DataNode, and Secondary NameNode. The settings defined in this file are typically related to HDFS-specific configurations such as block size, replication factor, storage directories, and other properties essential for the functioning of the distributed file system.

etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>~/hdfs/namenode</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>Additional configuration

| Parameter | Value | Notes |

|---|---|---|

| dfs.namenode.name.dir | Path on the local filesystem where the NameNode stores the namespace and transactions logs persistently. | transactions logs persistently. If this is a comma-delimited list of directories then the name table is replicated in all of the directories, for redundancy. |

| dfs.hosts / dfs.hosts.exclude | List of permitted/excluded DataNodes | If necessary, use these files to control the list of allowable datanodes. |

| dfs.blocksize | 268435456 | HDFS blocksize of 256MB for large file-systems. |

| dfs.namenode.handler.count | 100 | More NameNode server threads to handle RPCs from large number of DataNodes. |

If you need more information or want to understand these configurations better, please refer to more config.

| Parameter | Value | Notes |

|---|---|---|

| dfs.datanode.data.dir | Comma separated list of paths on the local filesystem of a DataNode where it should store its blocks. | If this is a comma-delimited list of directories, then data will be stored in all named directories, typically on different devices. |

- open etc/hadoop/hdfs-site.xml using :

sudo vim ~/hadoop/etc/hadoop/hdfs-site.xml

# Note:

# ~ => /home/username

# example => /home/jane/hadoop/etc/hadoop/hdfs-site.xml

# jane (essadi) => usernameThen add these configurations to the etc/hadoop/hdfs-site.xml file.

<configuration>

<property>

<name>dfs.datanode.data.dir</name>

<value>~/hdfs/datanode</value>

</property>

</configuration>sudo vi ~/hadoop/etc/hadoop/yarn-site.xmlConfigurations for ResourceManager and NodeManager:

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>namenode1</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.class</name>

<value>org.hadoop.mapred.shufflehandler</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>namenode1:8088</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>namenode1:8031</value>

</property>

</configuration>List all worker hostnames or IP addresses in your etc/hadoop/workers file, one per line. Helper scripts (described below) will use the etc/hadoop/workers file to run commands on many hosts at once. It is not used for any of the Java-based Hadoop configuration. In order to use this functionality, ssh trusts (via either passphraseless ssh or some other means, such as Kerberos) must be established for the accounts used to run Hadoop.

sudo vi ~/hadoop/etc/hadoop/workersThe Hadoop workers file, typically named workers, is a configuration file used in a Hadoop cluster setup to specify the hostnames or IP addresses of machines that will act as worker nodes. Each line in this file represents a single worker node.

datanode1

datanode2Once all the necessary configuration is complete, distribute the files to the HADOOP_CONF_DIR directory on all the machines. This should be the same directory on all machines.

In general, it is recommended that HDFS and YARN run as separate users. In the majority of installations, HDFS processes execute as ‘hdfs’. YARN is typically using the ‘yarn’ account.

To start a Hadoop cluster you will need to start both the HDFS and YARN cluster.

The first time you bring up HDFS, it must be formatted. Format a new distributed filesystem as hdfs:

hdfs namenode -formatall of the HDFS processes can be started with a utility script. As hdfs:

start-dfs.shall of the YARN processes can be started with a utility script. As yarn:

start-yarn.shTo check if the node is running successfully, use the command below.

hdfs dfsadmin -reportIf the data nodes do not appear, please check the ports. An easier solution is to disable firewalld sudo systemctl disable firewalld, which I do not recommend. Instead, we can selectively allow ports to facilitate connections between nodes.

To check the ports for the datanode, go to the terminal and type

netstat -tunl | grep LISTENLook for the port 9866. To open this port in Red Hat, you can use the following command:

sudo firewall-cmd --zone=public --add-port=9866/tcp --permanentThis command adds port 9866 for TCP traffic in the firewall settings permanently. After executing this command, remember to reload the firewall settings for changes to take effect:

sudo firewall-cmd --reloadAfter adding the ports permanently, now restart your Hadoop by running the command below:

start-dfs.shMapReduce is a programming model for processing and generating large datasets in parallel across a distributed cluster of computers. A common example is the word count problem, where you want to count the frequency of each word in a large collection of documents.

Now let's run the code by copying the Python scripts from the MapReduce folder. Next, create a text file and name it as desired, such as 'index.txt'.

cd ~/Desktop

touch index.txtadd some content to your file any content Then, upload the file to HDFS using the command below

#for create a folder

hdfs dfs -mkdir /user

#now put the text folder

hdfs dfs -put index.txt /user/index.txt

#for check the file if exists in hdfs run the command bellow

hdfs dfs -ls /userand execute the MapReduce program using the command:

#make sure you replace ... with the correct path

hadoop jar ~/hadoop/share/.../lib/hadoop-streaming-v-x.jar

-input /user/index.txt

-output /users/output

-mapper mapper.py

-reducer reducer.pyThis guide provides a good starting point for setting up a basic Hadoop cluster. However, it is crucial to remember that this is a fundamental setup, and additional configurations might be necessary depending on your specific needs and environment.