We release updates on a rolling basis, you can watch the repo/follow the author to be notified!

Contact author: Majed El Helou

🔥 News-Jan21: VIDIT augmented with depth information is used in the NTIRE workshop challenges, part of CVPR 2021. The competition starts on January 5th 2021, and is made up of the depth guided one-to-one and any-to-any illumination transfer.

🔥 News-May20: VIDIT is used for the relighting challenge in the AIM workshop, part of ECCV 2020. Check out the relighting competition beginning May 13th 2020, it is made up of 3 tracks for one-to-one and any-to-any illumination transfer, and for illumination estimation.

[ECCV AIM 2020 Paper] - [Supp.] - [Video]

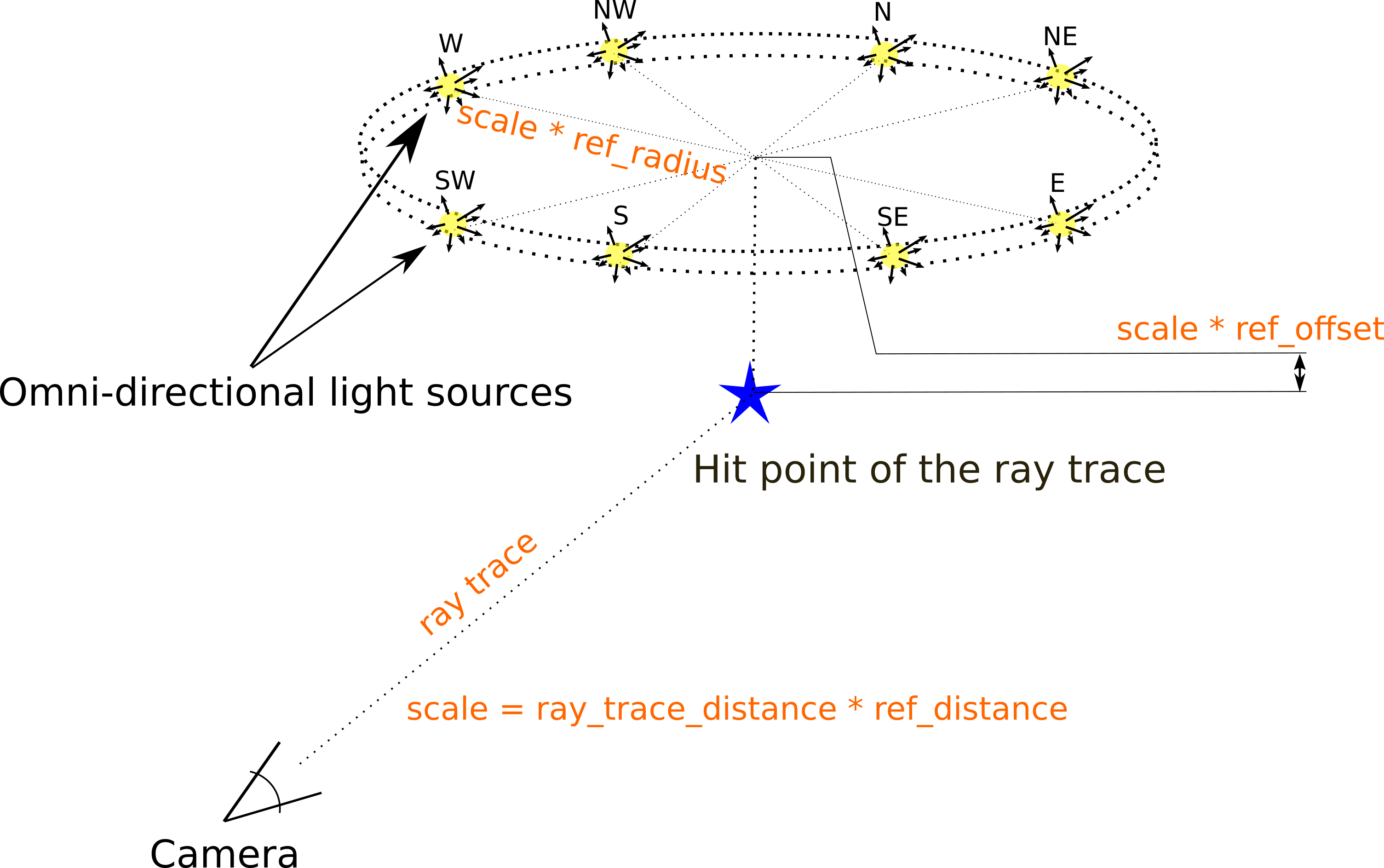

Abstract: Deep image relighting is gaining more interest lately, as it allows photo enhancement through illumination-specific retouching without human effort. Aside from aesthetic enhancement and photo montage, image relighting is valuable for domain adaptation, whether to augment datasets for training or to normalize input test data. Accurate relighting is, however, very challenging for various reasons, such as the difficulty in removing and recasting shadows and the modeling of different surfaces. We present a novel dataset, the Virtual Image Dataset for Illumination Transfer (VIDIT), in an effort to create a reference evaluation benchmark and to push forward the development of illumination manipulation methods. Virtual datasets are not only an important step towards achieving real-image performance but have also proven capable of improving training even when real datasets are possible to acquire and available. VIDIT contains 300 virtual scenes used for training, where every scene is captured 40 times in total: from 8 equally-spaced azimuthal angles, each lit with 5 different illuminants.

VIDIT includes 390 different Unreal Engine scenes, each captured with 40 illumination settings, resulting in 15,600 images. The illumination settings are all the combinations of 5 color temperatures (2500K, 3500K, 4500K, 5500K and 6500K) and 8 light directions (N, NE, E, SE, S, SW, W, NW). Original image resolution is 1024x1024.

Coming soon, for now you can get access through codalab to Training and Validation data.

For all our competition participants working on challenge papers, you can obtain the validation ground-truth sets from the links below. For now they are on GDrive, but we are working on setting up all data (Train/Validation/Test_input) on a permanent public EPFL institutional server.

Track 1: [Validation_GT]

Track 2: [Validation_GT]

Note: ground-truth test data will remain private.

Track 1 (1024x1024): [Train] - [Validation_Input] - [Validation_GT] - [Test_Input]

Track 2 (1024x1024): [Train] - [Validation_Input] - [Validation_GT] - [Test_Input]

Track 3 (512x512): [Train] - [Validation_Input] - [Validation_GT] - [Test_Input]

The images are downsampled by 2 for the competition track 3 to ease the computations:

smallsize_img = cv2.resize(origin_img, (512, 512), interpolation=cv2.INTER_CUBIC)

If you want to use VIDIT for other purposes, the training images are provided in full 1024x1024 resolution in the "Train" link of Track 2 above. Also available for download from a Google Drive mirror here: [Download]

-

(arXiv) DSRN: an Efficient Deep Network for Image Relighting (S. D. Das, N. A. Shah, S. Dutta, H. Kumar): [Paper]

-

(Software tool) Deep Illuminator: Data augmentation through variable illumination synthesis (G. Chogovadze): [Code]

-

(NeurIPSW2020) MSR-Net: Multi-Scale Relighting Network for One-to-One Relighting (S. D. Das, N. A. Shah, S. Dutta): [Paper]

-

(ECCVW2020) WDRN : A Wavelet Decomposed RelightNet forImage Relighting (D. Puthussery, Hrishikesh P.S., M. Kuriakose, and Jiji C.V): [Paper]

-

Encoder-decoder latent space manipulation (A. Dherse, M. Everaert, and J. Gwizdala): [Paper] - [Code]

-

Norm-Relighting-U-Net (M. Afifi and M. Brown): [Code]

-

(ECCVW2020) Deep relighting networks for image light source manipulation (L. Wang, Z. Liu, C. Li, W. Siu, D. Lun): [Paper] - [Code]

-

(ECCVW2020) An ensemble neural network for scene relighting with light classification (Dong, L., Jiang, Z., Li, C.)

-

(ECCVW2020) SA-AE for any-to-any relighting (Hu, Z., Huang, X., Li, Y., Wang, Q.): [Paper]

@article{elhelou2020vidit,

title = {{VIDIT}: Virtual Image Dataset for Illumination Transfer},

author = {El Helou, Majed and Zhou, Ruofan and Barthas, Johan and S{\"u}sstrunk, Sabine},

journal = {arXiv preprint arXiv:2005.05460},

year = {2020}

}

@inproceedings{elhelou2020aim,

title = {{AIM} 2020: Scene Relighting and Illumination Estimation Challenge},

author = {El Helou, Majed and Zhou, Ruofan and S\"usstrunk, Sabine and Timofte, Radu and others},

booktitle = {Proceedings of the European Conference on Computer Vision Workshops (ECCVW)},

year = {2020}

}