head.mp4

- System requirement: Ubuntu20.04

- Tested GPUs: A100, RTX3090

Create conda environment:

conda create -n champ python=3.10

conda activate champInstall packages with pip:

pip install -r requirements.txt-

Download pretrained weight of base models:

-

Download our checkpoints: \

Our checkpoints consist of denoising UNet, guidance encoders, Reference UNet, and motion module.

Finally, these pretrained models should be organized as follows:

./pretrained_models/

|-- champ

| |-- denoising_unet.pth

| |-- guidance_encoder_depth.pth

| |-- guidance_encoder_dwpose.pth

| |-- guidance_encoder_normal.pth

| |-- guidance_encoder_semantic_map.pth

| |-- reference_unet.pth

| `-- motion_module.pth

|-- image_encoder

| |-- config.json

| `-- pytorch_model.bin

|-- sd-vae-ft-mse

| |-- config.json

| |-- diffusion_pytorch_model.bin

| `-- diffusion_pytorch_model.safetensors

`-- stable-diffusion-v1-5

|-- feature_extractor

| `-- preprocessor_config.json

|-- model_index.json

|-- unet

| |-- config.json

| `-- diffusion_pytorch_model.bin

`-- v1-inference.yaml

We have provided several sets of example data for inference. Please first download and place them in the example_data folder.

Here is the command for inference:

python inference.py --config configs/inference.yamlAnimation results will be saved in results folder. You can change the reference image or the guidance motion by modifying inference.yaml.

You can also extract the driving motion from any videos and then render with Blender. We will later provide the instructions and scripts for this.

Note: The default motion-01 in inference.yaml has more than 500 frames and takes about 36GB VRAM. If you encounter VRAM issues, consider switching to other example data with less frames.

We thank the authors of MagicAnimate, Animate Anyone, and AnimateDiff for their excellent work. Our project is built upon Moore-AnimateAnyone, and we are grateful for their open-source contributions.

Visit our roadmap to preview the future of Champ.

If you find our work useful for your research, please consider citing the paper:

@misc{zhu2024champ,

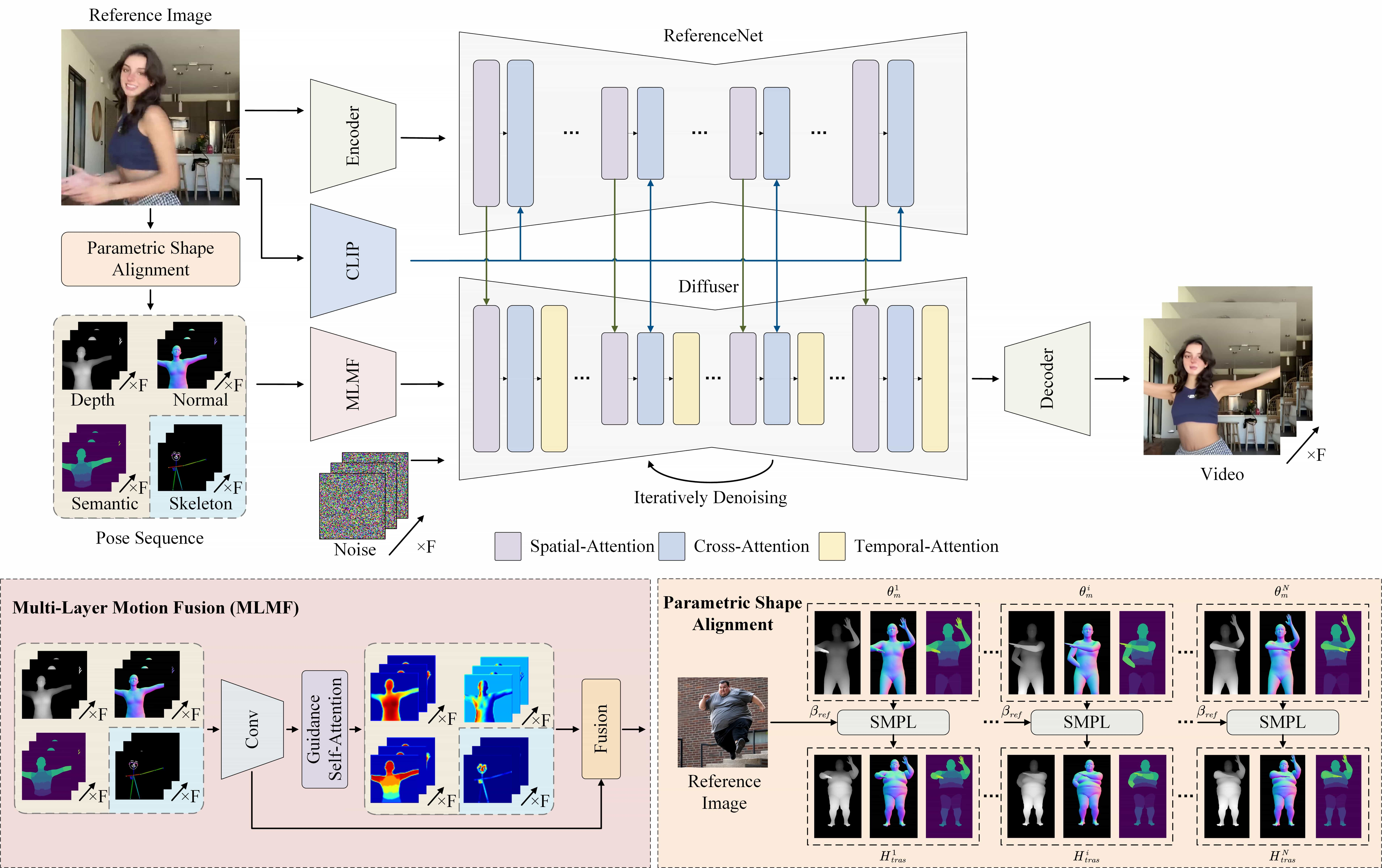

title={Champ: Controllable and Consistent Human Image Animation with 3D Parametric Guidance},

author={Shenhao Zhu and Junming Leo Chen and Zuozhuo Dai and Yinghui Xu and Xun Cao and Yao Yao and Hao Zhu and Siyu Zhu},

year={2024},

eprint={2403.14781},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Multiple research positions are open at the Generative Vision Lab, Fudan University! Include:

- Research assistant

- Postdoctoral researcher

- PhD candidate

- Master students

Interested individuals are encouraged to contact us at siyuzhu@fudan.edu.cn for further information.