Making a web_crawler

.nvmrc is for setting the node version

npm init for generating the json file(package.json), i am going to use .gitignore to ignore all the packages that might be installed while building this,

so use npm install , package.json is going to handle it all

npm install --save-dev jest for developer only

adding node_modules to .gitignore as specified before touch .gitignore

npm install jsdom -- for getting urls by crawling

~ Read JSDOM documenatation

modifed the script in package.json => npm start for running, main.js is entry point

"scripts": {

"start": "node main.js",

"test": "echo \"Error: no test specified\" && exit 1"

},

"test": "echo \"Error: no test specified\" && exit 1" to "jest"

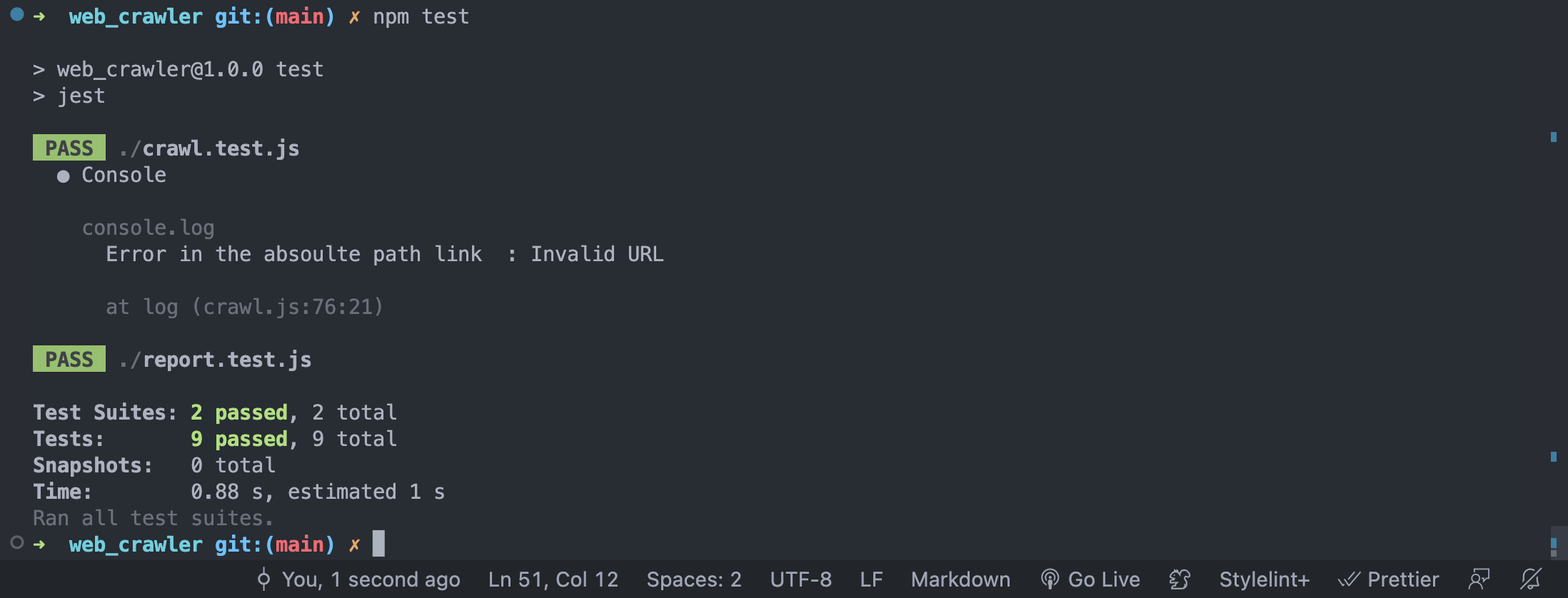

npm test

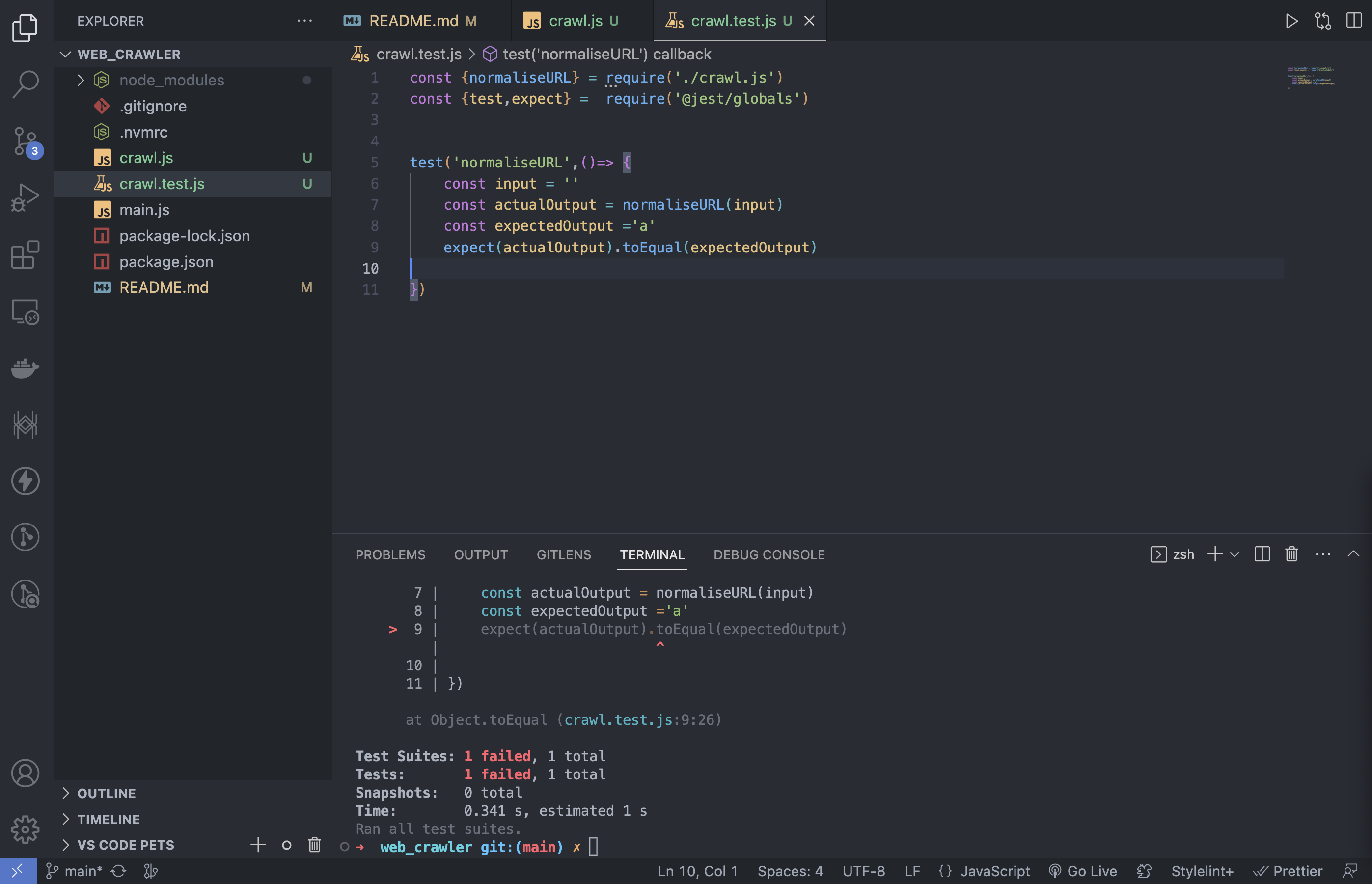

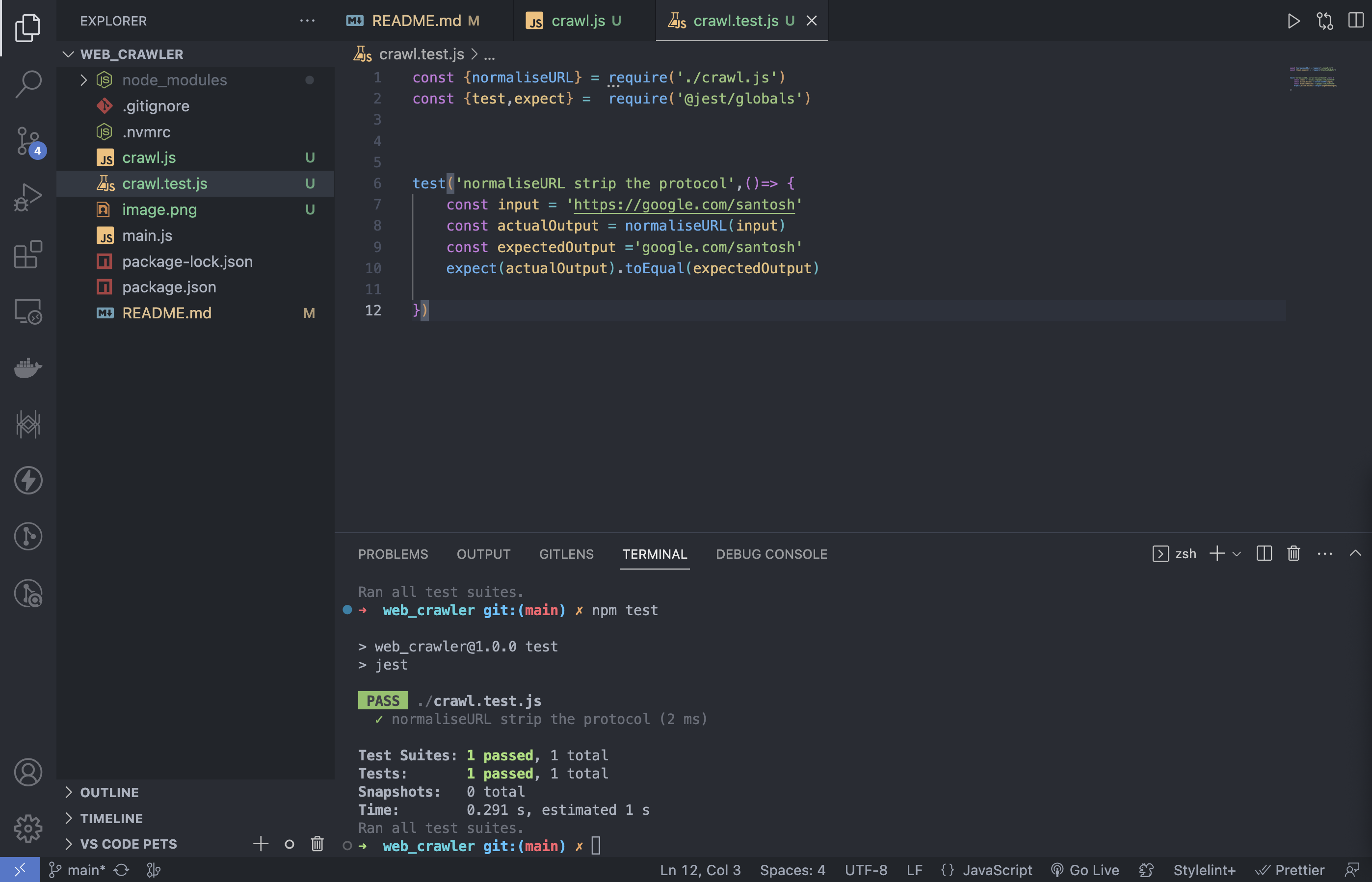

Why normalise URLS Cloudfare Docs => for crawler.js file

After modifying the crawl.js to take only the hostname+path, stripping the protocol

note for capials check in urls , the URL constructor in the crawl.js actually takes care of it

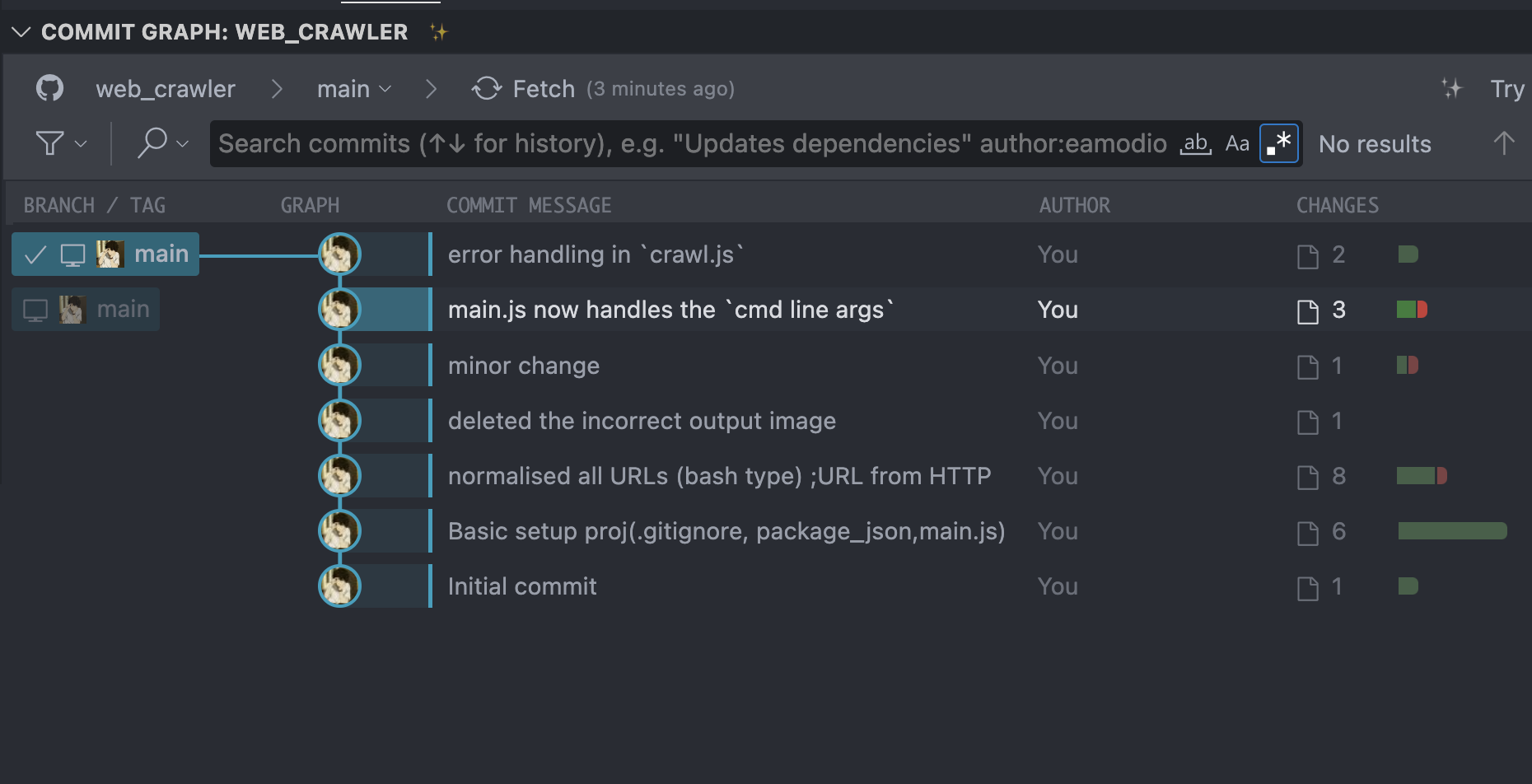

TIMELINE TILL NOW

Note all the Tests are done using jest

so npm test

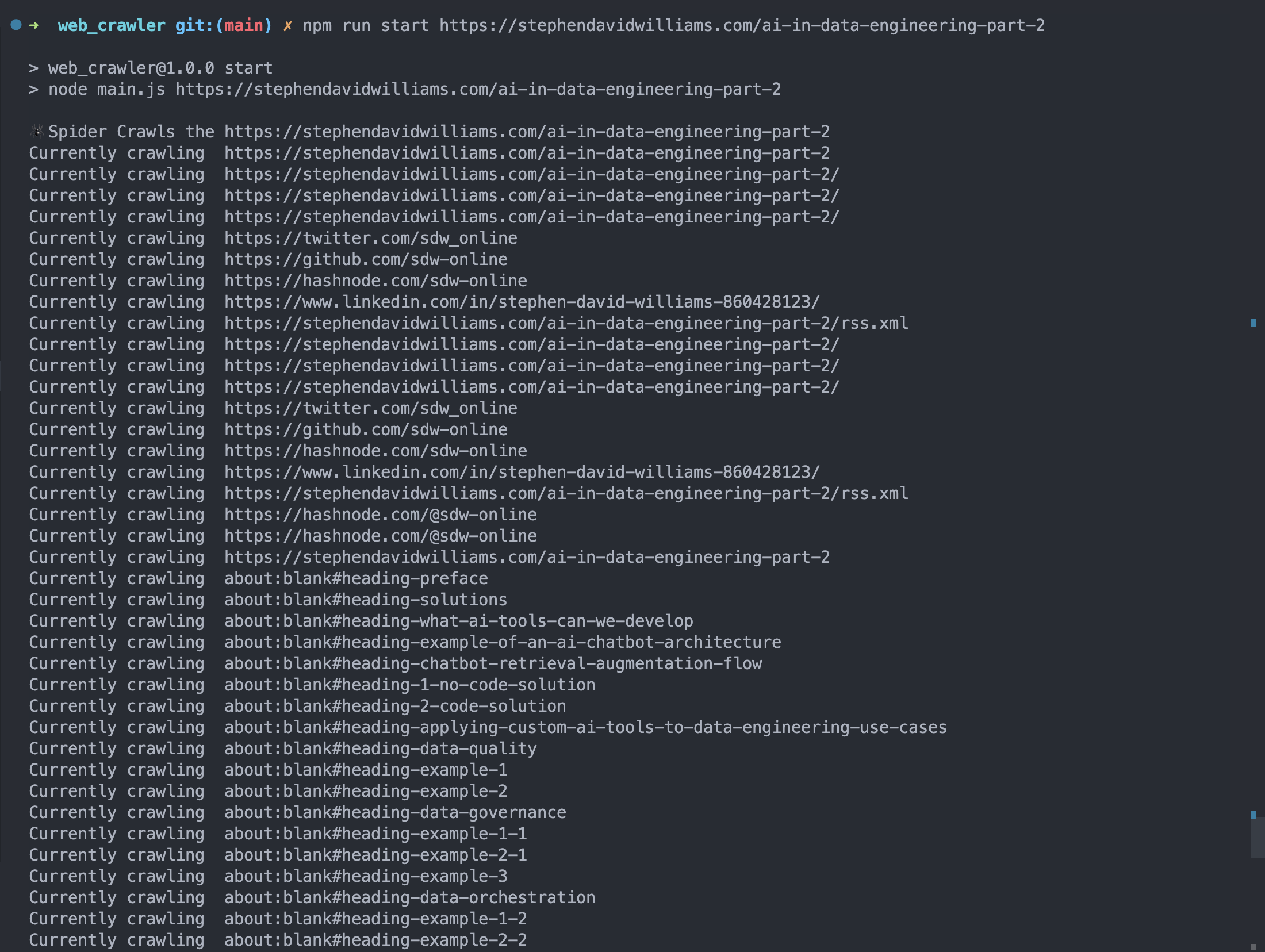

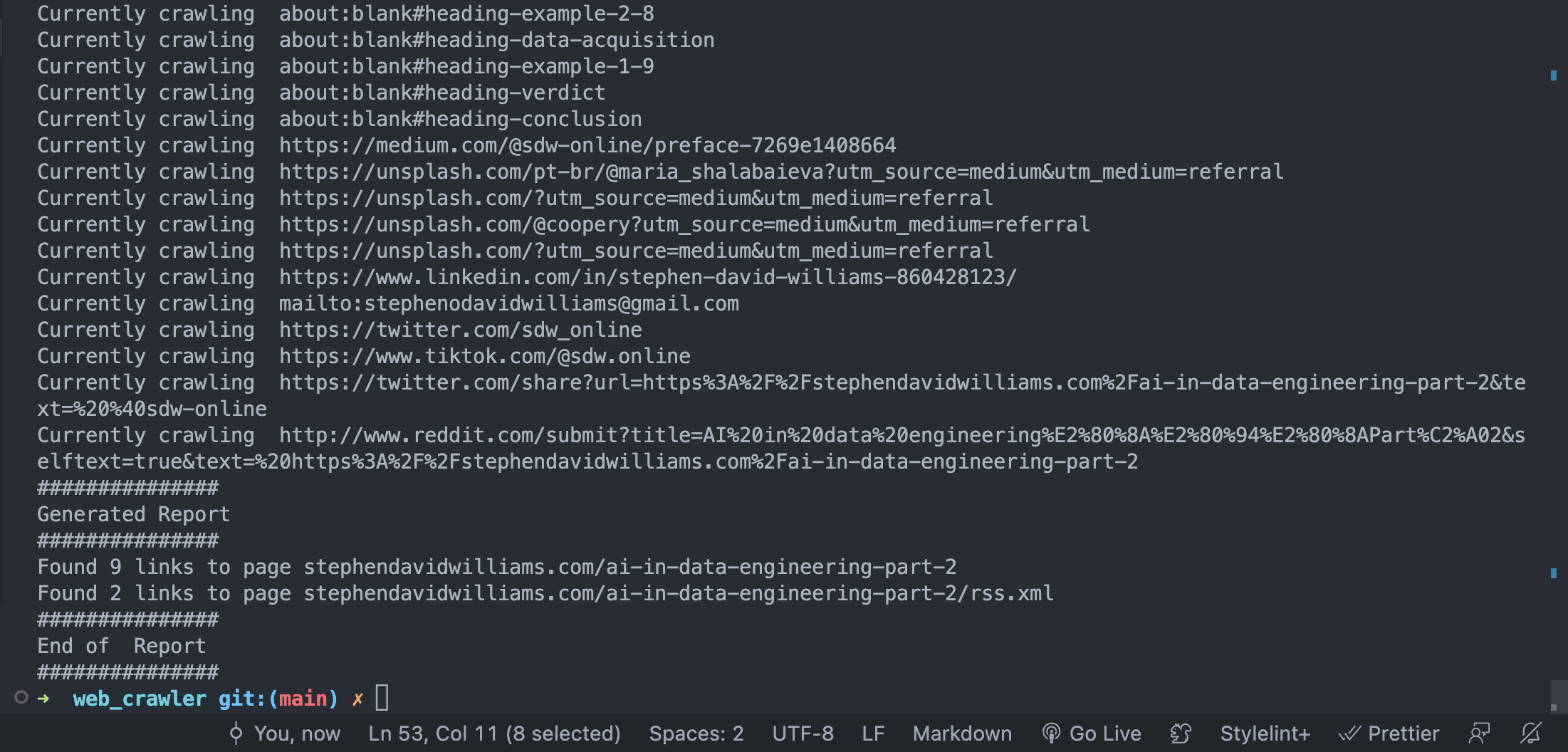

Demo

npm run start https://stephendavidwilliams.com/ai-in-data-engineering-part-2

Note:- Although this crawler is working fine with most of the websites,

There is a website called Medium, crawling throught that website is making it loop endlessly, for this instead of incrementing the count of the already visited websites, we can

- Stop at a website which have all the link repeated from the already visited URLs

- Stop a the exact moment the link is repeated <-- seems faulty in logic

I am thinking of implementing these two later

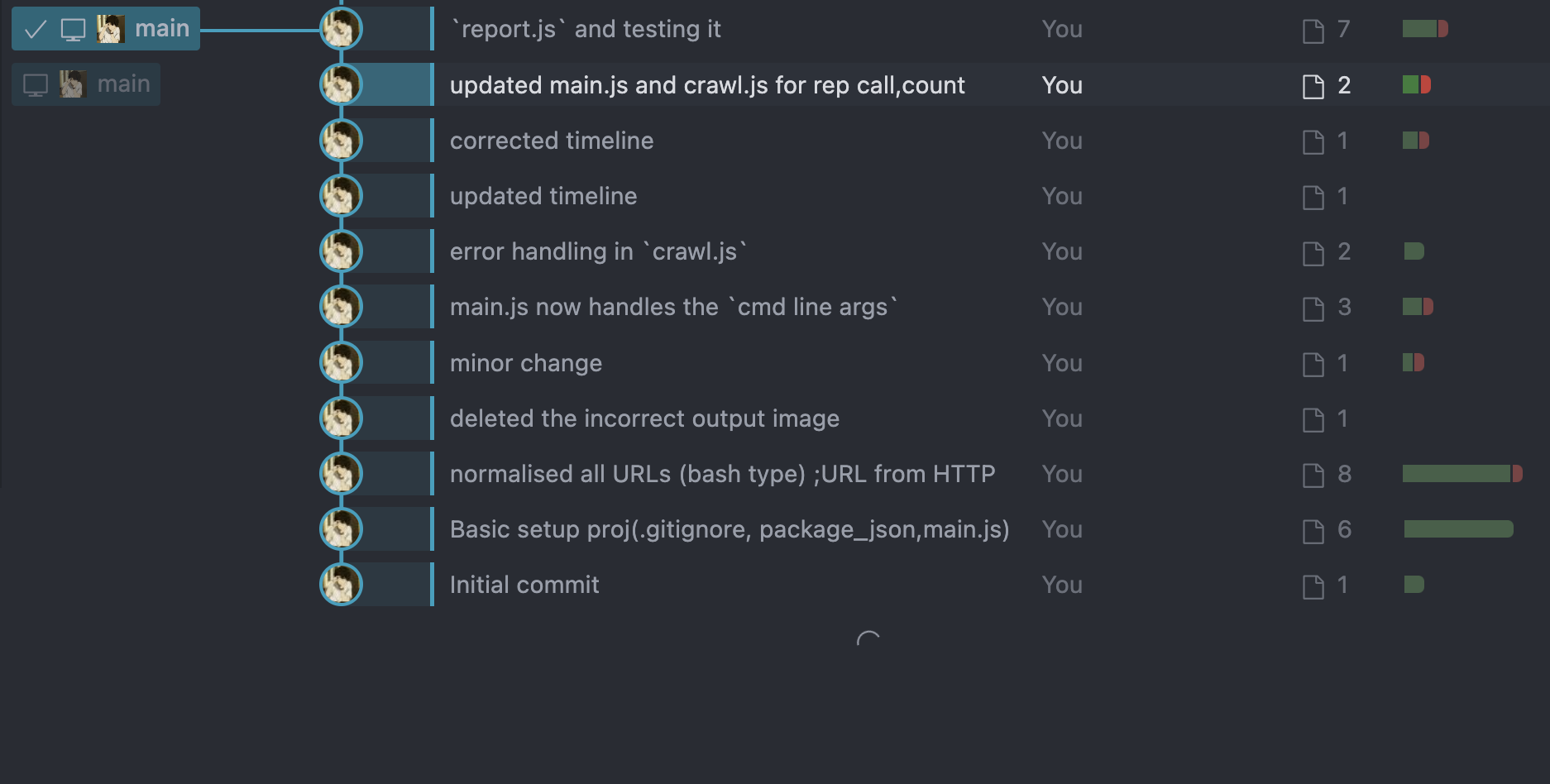

Recently started using gitlens therfore putting out the timeline once again