Automated Login Scripts to Keep Accounts Active

Objective

Cloud service providers have been known to delete the contents of personal accounts or close the accounts themselves after a period of inactivity, sometimes without the end-user's knowledge. This project aims to prevent that from happening by automating logins at regular intervals.

Each login process is recorded in a csv log file. Because it contains personally identifiable information, this log file is saved to a private repository.

.

├── mega/

│ ├── [2021] mega_1 log.csv

│ ├── [2021] mega_2 log.csv

│ ├── [2022] mega_1 log.csv

│ └── [2022] mega_2 log.csv

└── onedrive/

├── [2022] onedrive_1 log.csv

└── [2022] onedrive_2 log.csv

There will be one log file per account per year, organised into the folder of its respective platform. Logs are rotated at the start of each year; each log file is prefixed with a [YYYY] yearstamp.

Process Workflow and Code Organisation

flowchart LR

A([login-platform-auto.yml]) -->|Call workflow| B([reusable-autolog.yml])

F[[Run keep-platform-active.py]]

F ==>|output|G[("[Year] platform_account log.csv"<br/><em>Private Repository</em>)]

B -->|login job| F

B -.->|schedule-maintenance job| C{{Login and Logging Successful?}}

C ==>|Yes| D[[Run reset-schedule.py]]

C ==>|No| E[[Run reschedule-next-run.py]]

D -->|next run back at usual time tomorrow| A

E -->|next run in one hour| A

Each platform's workflow is triggered by cron schedule; default cron times are defined in default-schedule.csv

For greater clarity, we will be using the MEGA platform as an example to illustrate the workflow run process.

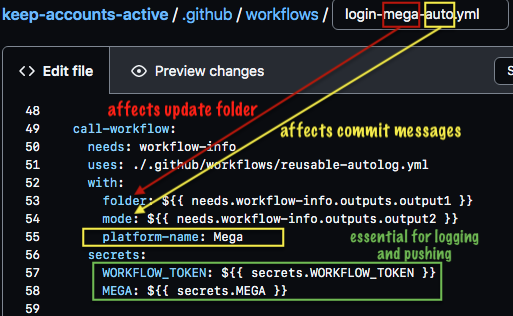

login-mega-auto.ymlis triggered by cron schedule and callsreusable-autolog.yml, passing into it the following arguments:folder: mega,mode: auto,platform-name: Megareusable-autolog.ymlfirst runs itsloginjob. It does a sparse checkout of the private repository, only cloning the mega folder, following which it runs thekeep-mega-active.pyscript.

Script modules (click to read verbose description)

keep-mega-active.py begins by importing login credentials (from GitHub secrets or .env file) in the form of a JSON string and parsing it into a Python dictionary. It then initialises a LoginLogger object, passing into it the necessary .env variables, urls, and Xpath/CSS page selectors.

-

login_logger.py: defines the LoginLogger class containing methods to automate the login process using Playwright. -

logging_formatter.py: CsvFormatter class instantiates the Formatter class (from Python's logging module) to provide appropriate formatting for both the console and csv log file. -

log_concat.py: After each Playwright browser run,update_logs()is called to append the new log file to the existing file in the mega folder, or simply moves the new file into the folder if logs have yet to be created for the year (filename not found in folder).

This process is repeated for the length of the Python dictionary.

- After

keep-mega-active.pyexits, theloginjob commits and pushes the updated mega folder to the private repository. reusable-autolog.ymlstarts theschedule-maintenancejob, which selects and runs a maintenance script depending on the outcome of theloginjob. Maintenance scripts serve to modify the cron value oflogin-mega-auto.yml, affecting how soon the workflow gets triggered again.

Maintenance scripts (click to read verbose description)

-

reset-schedule.py: runs upon successful completion of theloginjob; restores cron schedule inlogin-mega-auto.ymlto the default value defined indefault-schedule.csv -

reschedule-next-run.py: runs upon failed outcome of theloginjob; modifies the cron schedule to increment the hour value by 1 ("39 06 * * *" becomes "39 07 * * *")

How to Run using GitHub Actions

Dependencies: required Python 3 libraries to install

- python-dotenv

- pandas

-

playwright (only the firefox browser is needed)

- run

playwright install firefoxin the terminal after installing playwright.

- run

Prepare the env variables on your local machine

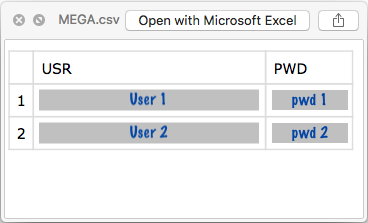

- Save login credentials in a csv file in the following format. One csv file per cloud platform. Note that column headers must be named USR and PWD.

-

Save this script

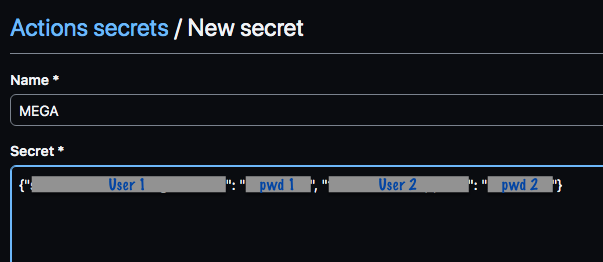

csv-to-json.pyto a new folder, and move the csv file into the folder. Run the script. It should output a text file containing a single JSON string. - Copy and paste the JSON string into a new repository secret with a name of your choice on GitHub.

-

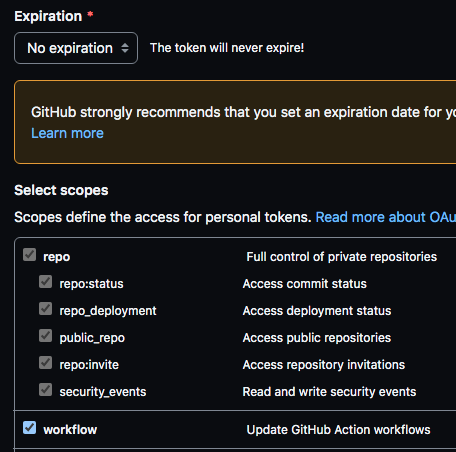

You will need a Personal Access Token with no expiration date and with permissions enabled for repo and workflow. Modify your existing token or create a new one here. Copy and paste your token's value in a new repository secret called

WORKFLOW_TOKEN.

Prepare your private repository on GitHub

-

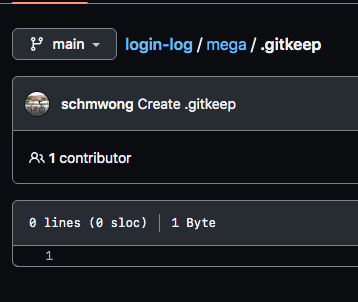

Create a new private repository called login-log (you may choose another name but it will require modifying

reusable-autolog.yml). -

In your private repository, create a new folder for each platform, and in it create an empty

.gitkeepfile (because GitHub does not allow empty folders).

Configure your workflow files

-

Ensure that the correct secrets are passed from the caller workflow. The

WORKFLOW_TOKENsecret is needed to update the private repo as well as the cron value of the caller workflow. -

Take care to name the caller workflow file appropriately; its middle name corresponds to the folder to be updated on the private repository. (Of course, you could also hard code the name yourself by directly modifying the

foldercontext in the yml file instead of relying on the Bash output.) -

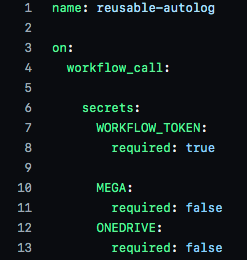

Ensure that the same secrets and inputs are passed into the reusable workflow

reusable-autolog.yml. It passes secrets from all platforms to itsenvcontext. -

Do a test run using a manual caller workflow, such as

login-mega-manual.yml. Take note of the log output in the console to ensure all steps run as expected.

Disclaimer

The automated scripts wholly rely on virtual machines hosted by GitHub Actions to execute them. While every effort has been made to ensure timely execution, there have been instances of temporary disruption to GitHub services. Scheduled events are often delayed, more so especially during periods of high loads of GitHub Action workflow runs. The material embodied in this repository is provided to you "as-is" and without warranty of any kind, express, implied or otherwise, including without limitation, any warranty of fitness for a particular purpose. In no event shall the author be liable to you or anyone else for any direct, special, incidental, indirect or consequential damages of any kind, or any damages whatsoever, including without limitation, loss of profit, loss of use, savings or revenue, or the claims of third parties, whether or not the author has been advised of the possibility of such loss, however caused and on any theory of liability, arising out of or in connection with the possession, use or performance of this repository.