Official implementation of NeuroCLIP.

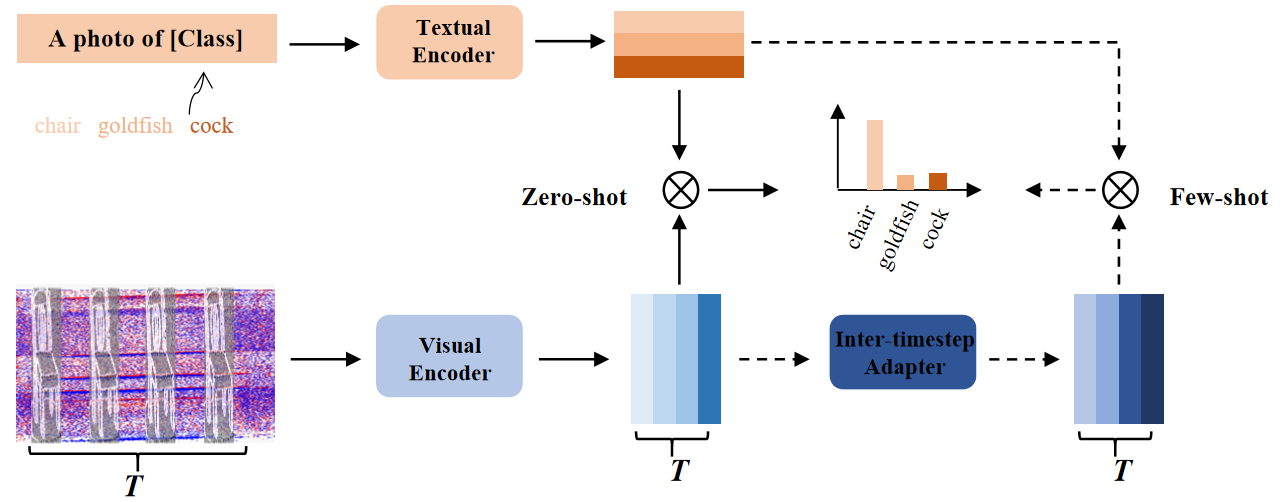

NeuroCLIP uses the CLIP’s 2D pre-trained knowledge to understand the neuromorphic data.

Create a conda environment and install dependencies:

git clone https://github.com/yfguo91/NeuroCLIP.git

cd NeuroCLIP

conda create -n neuroclip python=3.7

conda activate neuroclip

pip install -r requirements.txt

# Install the according versions of torch and torchvision

conda install pytorch torchvision cudatoolkit

# Install the modified dassl library (no need to re-build if the source code is changed)

cd Dassl3D/

python setup.py develop

cd ..The dataset will be download automatically.

Edit the running settings in scripts/zeros.sh, e.g. config file and output directory. Then run Zero-shot NeuroCLIP:

cd scripts

bash zeros.shIf you need the post-search for the best view weights, add --post-search and modulate the search parameters in the config file. More search time leads to higher search results but longer time.

Set the shot number and other settings in scripts/fews.sh. Then run NeuroCLIP with the inter-tiemstep adapter:

cd scripts

bash fews.sh--post-search is also optional.

This repo benefits from CLIP, SimpleView and the excellent codebase Dassl,PointCLIP. Thanks for their wonderful works.

@article{guo2023neuroclip,

title={NeuroCLIP: Neuromorphic Data Understanding by CLIP and SNN},

author={Guo, Yufei and Chen, Yuanpei},

journal={arXiv preprint arXiv:2306.12073},

year={2023}

}If you have any question about this project, please feel free to contact yfguo@pku.edu.cn.