Official PyTorch code for Variational Structured Attention Networks for Deep Visual Representation Learning.

Guanglei Yang, Paolo Rota , Xavier Alameda-Pineda, Dan Xu, Mingli Ding, Elisa Ricci, accepted at IEEE Transactions on Image Processing (TIP).

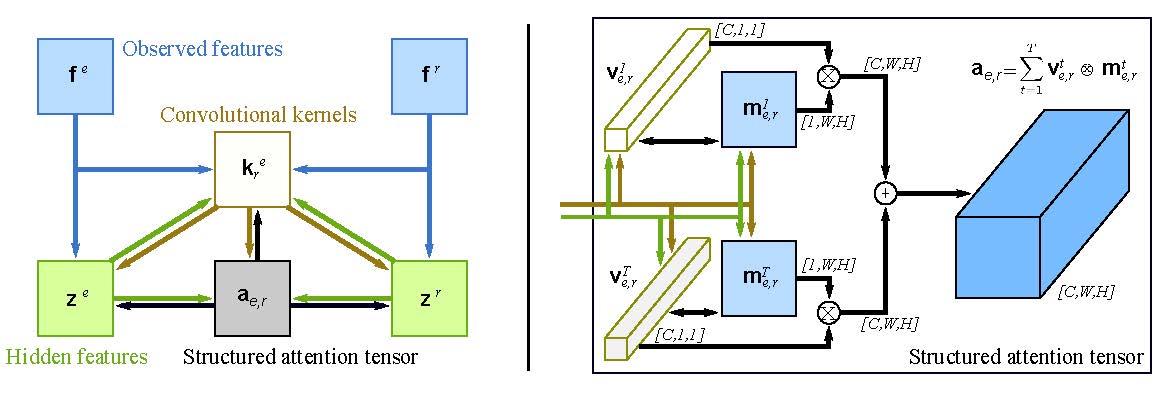

Framework:

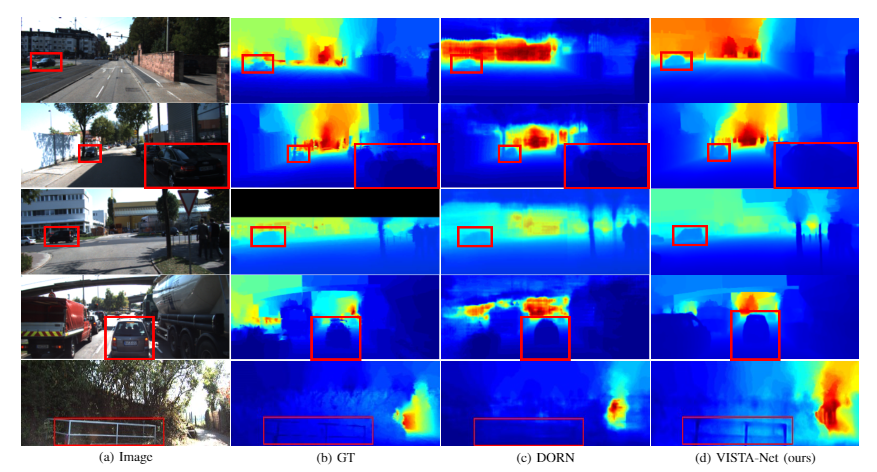

visualization results:

visualization results:

git clone https://github.com/ygjwd12345/VISTA-Net.git

cd VISTA-net/This code needs Pytorch 1.0+ and python 3.6. Please install dependencies by running

pip install -r requirements.txtand you also need to install pytorch-enconding and detail-api.

mkdir -p pytorch/dataset/nyu_depth_v2

python utils/download_from_gdrive.py 1AysroWpfISmm-yRFGBgFTrLy6FjQwvwP pytorch/dataset/nyu_depth_v2/sync.zip

cd pytorch/dataset/nyu_depth_v2

unzip sync.ziptest set

go to utils

wget http://horatio.cs.nyu.edu/mit/silberman/nyu_depth_v2/nyu_depth_v2_labeled.mat

python extract_official_train_test_set_from_mat.py nyu_depth_v2_labeled.mat splits.mat ../pytorch/dataset/nyu_depth_v2/official_splits/

cd dataset

mkdir kitti_dataset

cd kitti_dataset

### image move kitti_archives_to_download.txt into kitti_dataset

wget -i kitti_archives_to_download.txt

### label

wget https://s3.eu-central-1.amazonaws.com/avg-kitti/data_depth_annotated.zip

unzip data_depth_annotated.zip

cd train

mv * ../

cd ..

rm -r train

cd val

mv * ../

cd ..

rm -r val

rm data_depth_annotated.zipFirst way is running ./scripts/prepare_pcontext.py.

Seconde way is just download from google drive (about 2.42GB).

Third it also auto down load and prepocess but expend huge time.

running ./scripts/prepare_pascal.py.

running ./scripts/prepare_citys.py.

train

CUDA_VISIBLE_DEVICES=0,1,2,3 python bts_main.py arguments_train_nyu.txt

CUDA_VISIBLE_DEVICES=0,1,2,3 python bts_main.py arguments_train_eigen.txttest

CUDA_VISIBLE_DEVICES=1 python bts_test.py arguments_test_nyu.txt

python ../utils/eval_with_pngs.py --pred_path vis_att_bts_nyu_v2_pytorch_att/raw/ --gt_path ../../dataset/nyu_depth_v2/official_splits/test/ --dataset nyu --min_depth_eval 1e-3 --max_depth_eval 10 --eigen_crop

CUDA_VISIBLE_DEVICES=1 python bts_test.py arguments_test_eigen.txt

python ../utils/eval_with_pngs.py --pred_path vis_att_bts_eigen_v2_pytorch_att/raw/ --gt_path ./dataset/kitti_dataset/ --dataset kitti --min_depth_eval 1e-3 --max_depth_eval 80 --do_kb_crop --garg_cropfor mult GPU, we recommand is at least 4 GPU at least 12 GB or at least 2 GPU at least 24GB

Train

CUDA_VISIBLE_DEVICES=0,1,2,3 python train.py --dataset pcontext \

--model encnet --attentiongraph --aux --se-loss \

--backbone resnet101 --checkname attentiongraph_res101_pcontext_v2Test

python test.py --dataset pcontext \

--model encnet --attentiongraph --aux --se-loss \

--backbone resnet101 --resume ./pcontext/attentiongraph_res101_pcontext_v2/model_best.pth.tar --split val --mode testval --msCUDA_VISIBLE_DEVICES=0,1,2 python -m torch.distributed.launch train_surface_normal.py\

--log_folder './log/training_release/' --operation 'train_robust_acos_loss' \

--learning_rate 0.0001 --batch_size 32 --net_architecture 'd_fpn_resnext101' \

--train_dataset 'scannet_standard' --test_dataset 'scannet_standard' \

--test_dataset 'nyud' --val_dataset 'scannet_standard'Please consider citing the following paper if the code is helpful in your research work:

@article{yang2021variational,

title={Variational Structured Attention Networks for Deep Visual Representation Learning},

author={Yang, Guanglei and Rota, Paolo and Alameda-Pineda, Xavier and Xu, Dan and Ding, Mingli and Ricci, Elisa},

journal={IEEE Transactions on Image Processing (TIP)},

year={2021}

}

@article{xu2020probabilistic,

title={Probabilistic graph attention network with conditional kernels for pixel-wise prediction},

author={Xu, Dan and Alameda-Pineda, Xavier and Ouyang, Wanli and Ricci, Elisa and Wang, Xiaogang and Sebe, Nicu},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)},

year={2020}

}