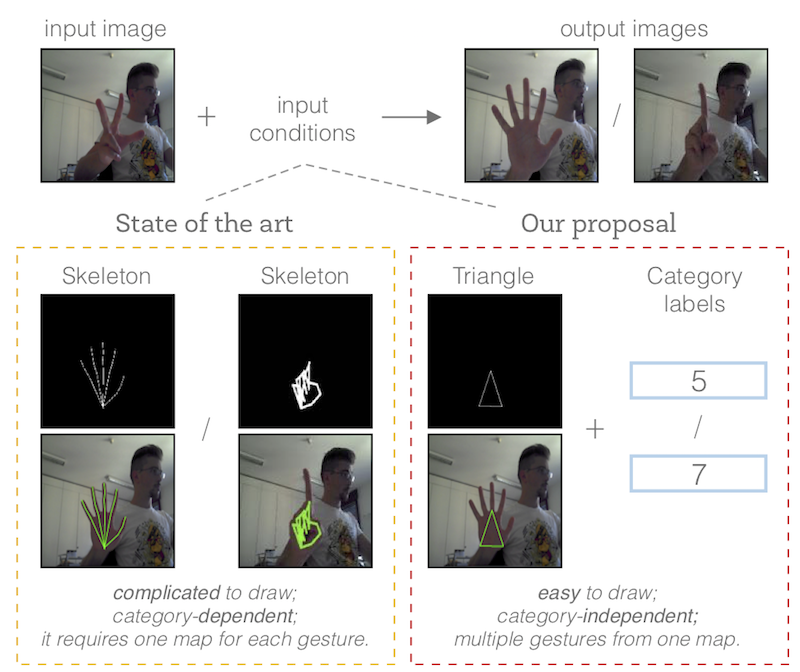

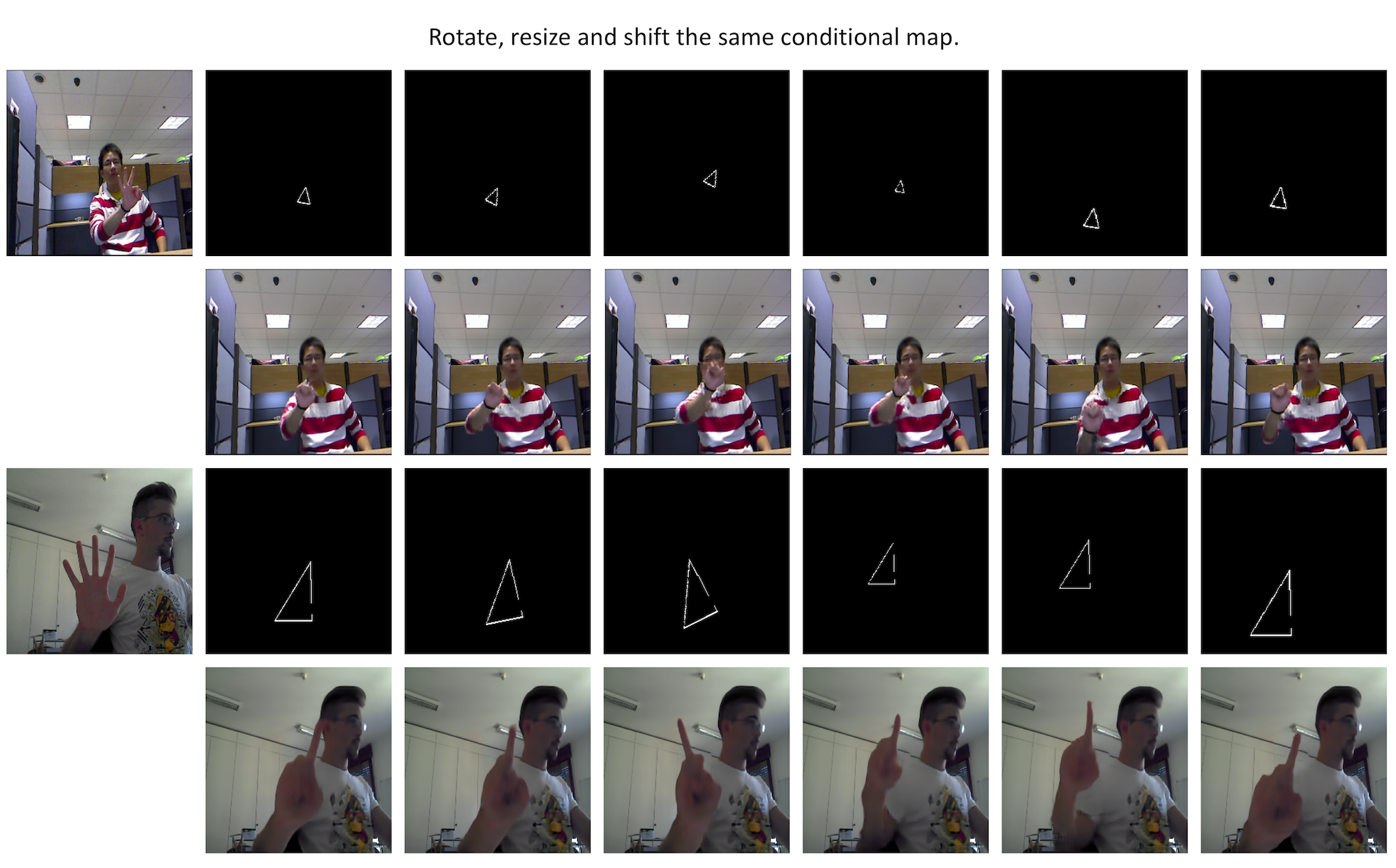

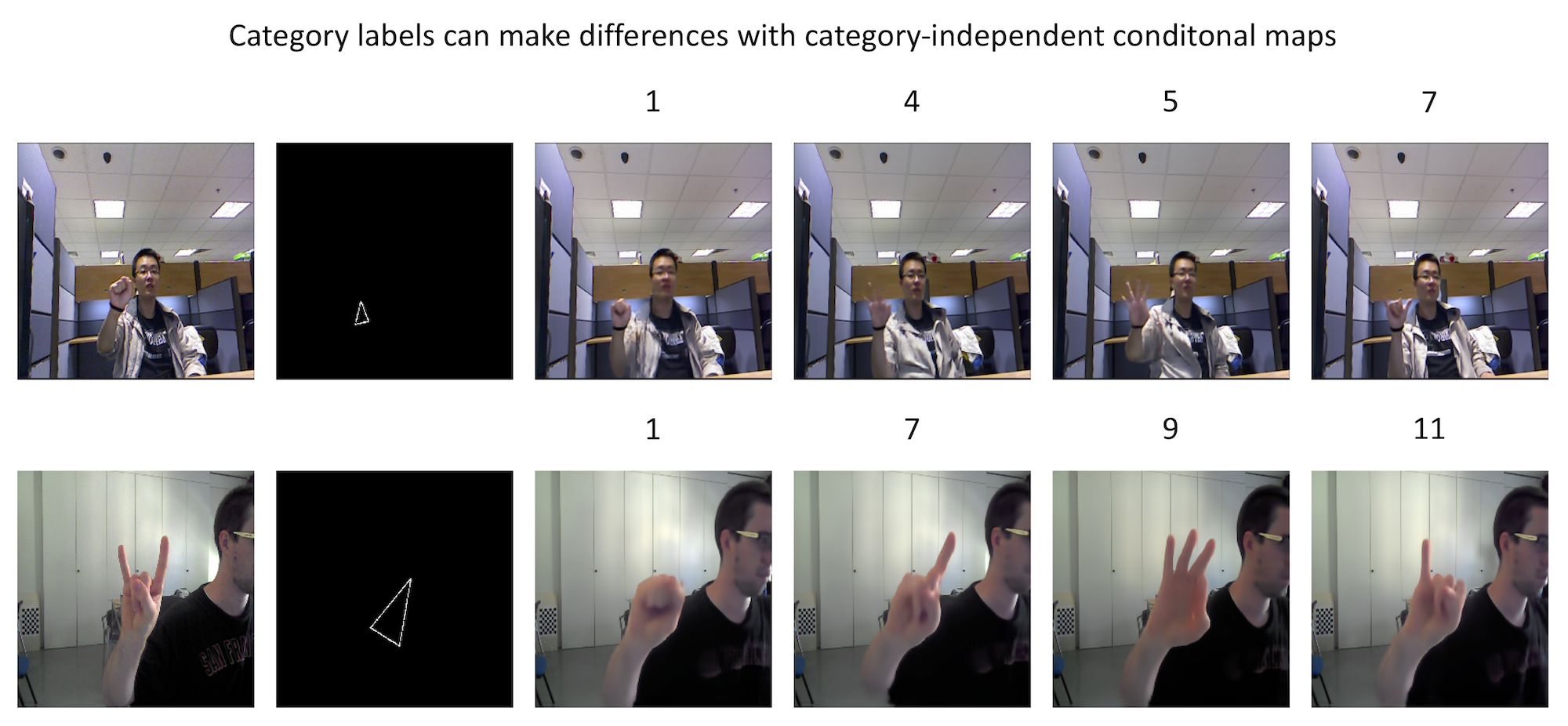

A new gesture-to-gesture translation framework. Gesture-to-Gesture Translation in the Wild via Category-Independent Conditional Maps, published in ACM International Conference on Multimedia, 2019.

- Original Dataset

We provide an user-friendly configuring method via Conda system, and you can create a new Conda environment using the command:

conda env create -f environment.yml

1.Download dataset and copy them into ./datasets

2.Modify the scripts to train/test:

- Training

sh ./scripts/train_trianglegan_ntu.sh <gpu_id>

sh ./scripts/train_trianglegan_senz3d.sh <gpu_id>

- Testing

sh ./scripts/test_trianglegan_ntu.sh <gpu_id>

sh ./scripts/train_trianglegan_senz3d.sh <gpu_id>

3.The pretrained model is saved at ./checkpoints/{model_name}. Check here for all the available TriangleGAN models.

4.We provide an implementation of GestureGAN, ACM MM 2018 [paper]|[code].

sh ./scripts/train_gesturegan_ntu.sh <gpu_id>

sh ./scripts/train_gesturegan_senz3d.sh <gpu_id>

This code is based on the pytorch-CycleGAN-and-pix2pix. Thanks to the contributors of this project.

- Pose Guided Person Image Generation, NIPS 2017, [Tensorflow]

- GANimation: Anatomically-aware Facial Animation from a Single Image, ECCV 2018, [PyTorch]

- StarGAN: Unified Generative Adversarial Networks for Multi-Domain Image-to-Image Translation, CVPR 2018, [PyTorch]

- GestureGAN for Hand Gesture-to-Gesture Translation in the Wild, ACM MM 2018, [Pytorch]

- Animating Arbitrary Objects via Deep Motion Transfer, CVPR 2019, [Pytorch]

We recommend to evaluate the performances of the compared models mainly based on this repo: GAN-Metrics

If you take use of our datasets or code, please cite our papers:

@inproceedings{liu2019gesture,

title={Gesture-to-gesture translation in the wild via category-independent conditional maps},

author={Liu, Yahui and De Nadai, Marco and Zen, Gloria and Sebe, Nicu and Lepri, Bruno},

booktitle={Proceedings of the 27th ACM International Conference on Multimedia},

pages={1916--1924},

year={2019}

}

If you have any questions, please contact me without hesitation (yahui.liu AT unitn.it).