This is the origin Pytorch implementation of FPPformer in the following paper: [Take an Irregular Route: Enhance the Decoder of Time-Series Forecasting Transformer] (Accepted by IEEE IoT).

It owns two versions. The first version is the default one with channel-independent multivariate forecasting formula. The second version is the one, required by one of the reviewers, with additional cross-variable modules. Two versions are distinguished by the argument '--Cross' in main.py.

Starting from this work, we provide the response letters during the revision. If you have any question, you may first refer to the response letters to seek possible answers.

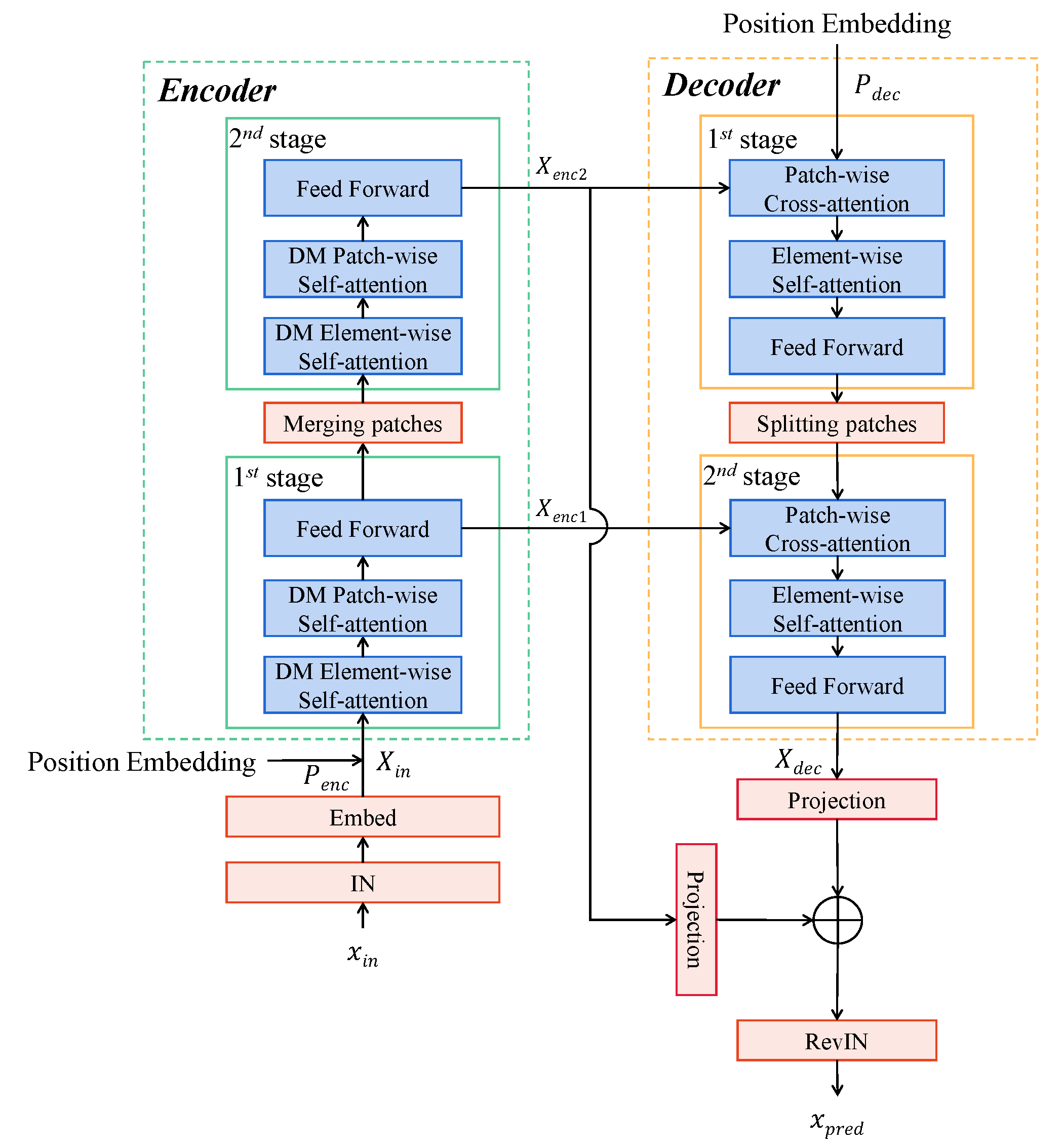

The overview of our proposed FPPformer is illustrated in Figure 1 and its major enhancement on vanilla TSFT concentrates on addressing the preceding two problems of decoder.

Figure 1. An overview of FPPformer’s hierarchical architecture with two-stage encoder and two-stage decoder. Different from the vanilla one, the encoder owns bottom-up structure while the decoder owns top-down structure. Note that the direction of the propagation flow in decoder is opposite to the vanilla one to highlight the top-down structure. 'DM' in the stages of encoder means 'Diagonal-Masked'.

- Python 3.8.8

- matplotlib == 3.3.4

- numpy == 1.20.1

- pandas == 1.2.4

- scipy == 1.9.0

- scikit_learn == 0.24.1

- torch == 1.11.0

Dependencies can be installed using the following command:

pip install -r requirements.txtETT, ECL, Traffic and weather dataset were acquired at: here. Solar dataset were acquired at: Solar. M4 dataset was acquired at: M4.

After you acquire raw data of all datasets, please separately place them in corresponding folders at ./FPPformer/data.

We place ETT in the folder ./ETT-data, ECL in the folder ./electricity and weather in the folder ./weather of here (the folder tree in the link is shown as below) into folder ./data and rename them from ./ETT-data,./electricity, ./traffic and ./weather to ./ETT, ./ECL, ./Traffic and./weather respectively. We rename the file of ECL/Traffic from electricity.csv/traffic.csv to ECL.csv/Traffic.csv and rename its last variable from OT/OT to original MT_321/Sensor_861 separately.

The folder tree in https://drive.google.com/drive/folders/1ZOYpTUa82_jCcxIdTmyr0LXQfvaM9vIy?usp=sharing:

|-autoformer

| |-ETT-data

| | |-ETTh1.csv

| | |-ETTh2.csv

| | |-ETTm1.csv

| | |-ETTm2.csv

| |

| |-electricity

| | |-electricity.csv

| |

| |-traffic

| | |-traffic.csv

| |

| |-weather

| | |-weather.csv

We place Solar in the folder ./financial of here (the folder tree in the link is shown as below) into the folder ./data and rename them as ./Solar respectively.

The folder tree in https://drive.google.com/drive/folders/1Gv1MXjLo5bLGep4bsqDyaNMI2oQC9GH2?usp=sharing:

|-dataset

| |-financial

| | |-solar_AL.txt

As for M4 dataset, we place the folders ./Dataset and ./Point Forecasts of M4 (the folder tree in the link is shown as below) into the folder ./data/M4. Moreover, we unzip the file ./Point Forecasts/submission-Naive2.rar to the current directory.

The folder tree in https://drive.google.com/drive/folders/1Gv1MXjLo5bLGep4bsqDyaNMI2oQC9GH2?usp=sharing:

|-M4-methods

| |-Dataset

| | |-Test

| | | |-Daily-test.csv

| | | |-Hourly-test.csv

| | | |-Monthly-test.csv

| | | |-Quarterly-test.csv

| | | |-Weekly-test.csv

| | | |-Yearly-test.csv

| | |-Train

| | | |-Daily-train.csv

| | | |-Hourly-train.csv

| | | |-Monthly-train.csv

| | | |-Quarterly-train.csv

| | | |-Weekly-train.csv

| | | |-Yearly-train.csv

| | |-M4-info.csv

| |-Point Forecasts

| | |-submission-Naive2.rar

Then you will obtain folder tree:

|-data

| |-ECL

| | |-ECL.csv

| |

| |-ETT

| | |-ETTh1.csv

| | |-ETTh2.csv

| | |-ETTm1.csv

| | |-ETTm2.csv

| |

| |-M4

| | |-Dataset

| | | |-Test

| | | | |-Daily-test.csv

| | | | |-Hourly-test.csv

| | | | |-Monthly-test.csv

| | | | |-Quarterly-test.csv

| | | | |-Weekly-test.csv

| | | | |-Yearly-test.csv

| | | |-Train

| | | | |-Daily-train.csv

| | | | |-Hourly-train.csv

| | | | |-Monthly-train.csv

| | | | |-Quarterly-train.csv

| | | | |-Weekly-train.csv

| | | | |-Yearly-train.csv

| | | |-M4-info.csv

| | |-Point Forecasts

| | | |-submission-Naive2.csv

| |

| |-Solar

| | |-solar_AL.txt

| |

| |-Traffic

| | |-Traffic.csv

| |

| |-weather

| | |-weather.csv

We select seven typical deep time series forecasting models, i.e., Triformer, Crossformer, Scaleformer, PatchTST, FiLM and TSMixer as baselines in multivariate/univariate forecasting experiments. Their source codes origins are given below:

| Baseline | Source Code |

|---|---|

| Triformer | https://github.com/razvanc92/triformer |

| Crossformer | https://github.com/Thinklab-SJTU/Crossformer |

| Scaleformer | https://github.com/BorealisAI/scaleformer |

| PatchTST | https://github.com/yuqinie98/PatchTST |

| FiLM | https://github.com/tianzhou2011/FiLM |

| TSMixer | https://github.com/google-research/google-research/tree/master/tsmixer |

Moreover, the default experiment settings/parameters of aforementioned seven baselines are given below respectively:

| Baselines | Settings/Parameters name | Descriptions | Default mechanisms/values |

|---|---|---|---|

| Triformer | num_nodes | The number of nodes | 4 |

| patch_sizes | The patch size | 4 | |

| d_model | The number of hidden dimensions | 32 | |

| mem_dim | The dimension of memory vector | 5 | |

| e_layers | The number of encoder layers | 2 | |

| d_layers | The number of decoder layers | 1 | |

| Crossformer | seq_len | Segment length (L_seq) | 6 |

| d_model | The number of hidden dimensions | 64 | |

| d_ff | Dimension of fcn | 128 | |

| n_heads | The number of heads in multi-head attention mechanism | 2 | |

| e_layers | The number of encoder layers | 2 | |

| Scaleformer | Basic model | The basic model | FEDformer-f |

| scales | Scales in multi-scale | [16, 8, 4, 2, 1] | |

| scale_factor | Scale factor for upsample | 2 | |

| mode_select | The mode selection method | random | |

| modes | The number of modes | 2 | |

| L | Ignore level | 3 | |

| PatchTST | patch_len | Patch length | 16 |

| stride | The stride length | 8 | |

| n_head | The number of heads in multi-head attention mechanism | 4 | |

| d_model | The hidden feature dimension | 16 | |

| d_ff | Dimension of fcn | 128 | |

| FiLM | d_model | The number of hidden dimensions | 512 |

| d_ff | Dimension of fcn | 2048 | |

| n_heads | The number of heads in multi-head attention mechanism | 8 | |

| e_layers | The number of encoder layers | 2 | |

| d_layers | The number of decoder layers | 1 | |

| modes1 | The number of Fourier modes to multiply | 32 | |

| TSMixer | n_block | The number of block for deep architecture | 2 |

| d_model | The hidden feature dimension | 64 |

Commands for training and testing FPPformer of all datasets are in ./scripts/Main.sh.

More parameter information please refer to main.py.

We provide a complete command for training and testing FPPformer:

For multivariate forecasting:

python -u main.py --data <data> --features <features> --input_len <input_len> --pred_len <pred_len> --encoder_layer <encoder_layer> --patch_size <patch_size> --d_model <d_model> --Cross <Cross> --learning_rate <learning_rate> --dropout <dropout> --batch_size <batch_size> --train_epochs <train_epochs> --patience <patience> --itr <itr> --train

For univariate forecasting:

python -u main_M4.py --data <data> --freq <freq> --input_len <input_len> --pred_len <pred_len> --encoder_layer <encoder_layer> --patch_size <patch_size> --d_model <d_model> --learning_rate <learning_rate> --dropout <dropout> --batch_size <batch_size> --train_epochs <train_epochs> --patience <patience> --itr <itr> --train

Here we provide a more detailed and complete command description for training and testing the model:

| Parameter name | Description of parameter |

|---|---|

| data | The dataset name |

| root_path | The root path of the data file |

| data_path | The data file name |

| features | The forecasting task. This can be set to M,S (M : multivariate forecasting, S : univariate forecasting |

| target | Target feature in S task |

| freq | Sampling frequency for M4 sub-datasets |

| checkpoints | Location of model checkpoints |

| input_len | Input sequence length |

| pred_len | Prediction sequence length |

| enc_in | Input size |

| dec_out | Output size |

| d_model | Dimension of model |

| representation | Representation dims in the end of the intra-reconstruction phase |

| dropout | Dropout |

| encoder_layer | The number of encoder layers |

| patch_size | The size of each patch |

| Cross | Whether to use cross-variable attention |

| itr | Experiments times |

| train_epochs | Train epochs of the second stage |

| batch_size | The batch size of training input data in the second stage |

| patience | Early stopping patience |

| learning_rate | Optimizer learning rate |

The experiment parameters of each data set are formated in the Main.sh files in the directory ./scripts/. You can refer to these parameters for experiments, and you can also adjust the parameters to obtain better mse and mae results or draw better prediction figures. We provide the commands for obtain the results of FPPformer-Cross in the file ./scripts/Cross.sh, those of FPPformer with longer input sequence lengths in the file ./scripts/LongInput.sh, those of FPPformer with different encoder layers in the file ./scripts/ParaSen.sh.

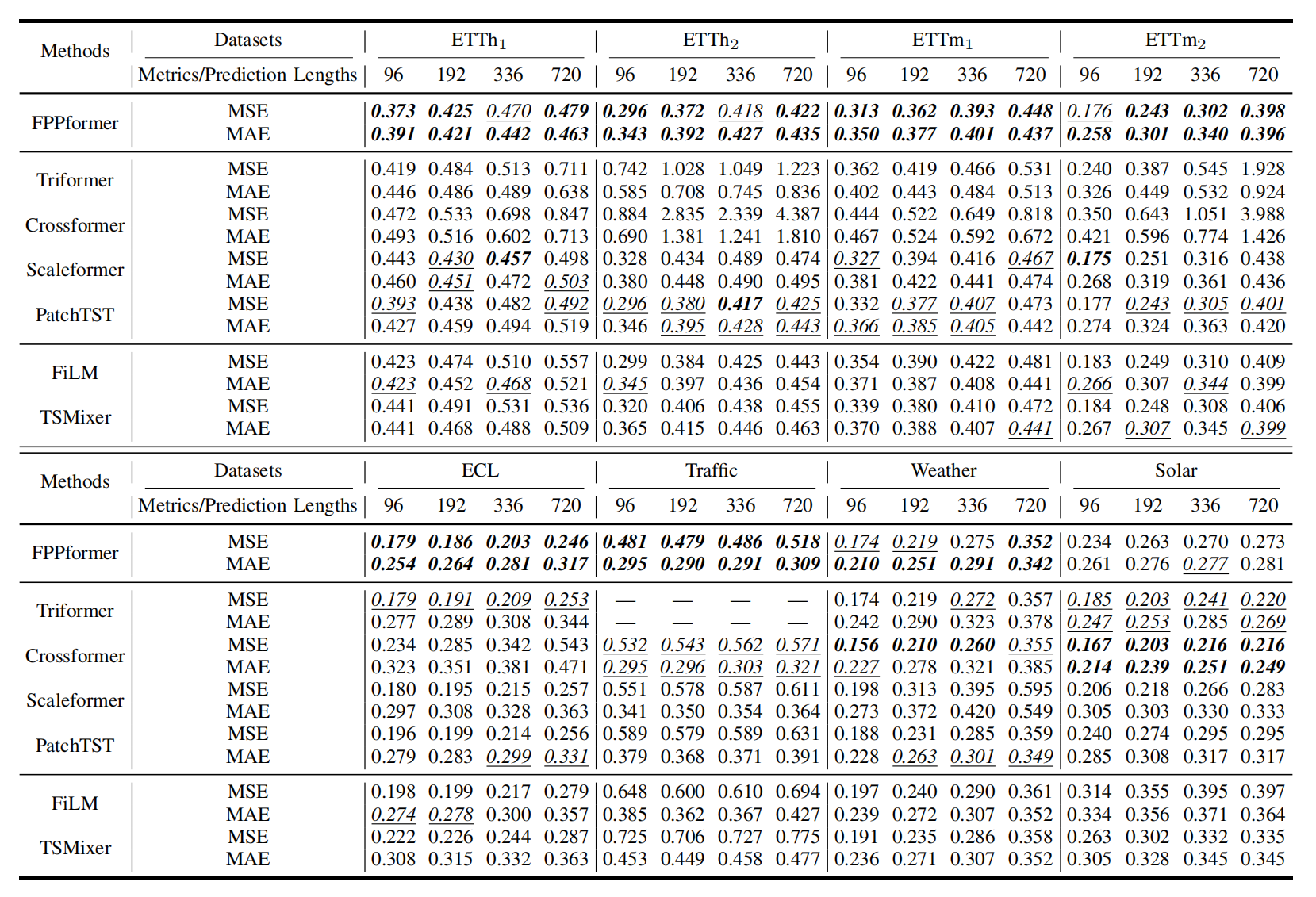

Figure 2. Multivariate forecasting results

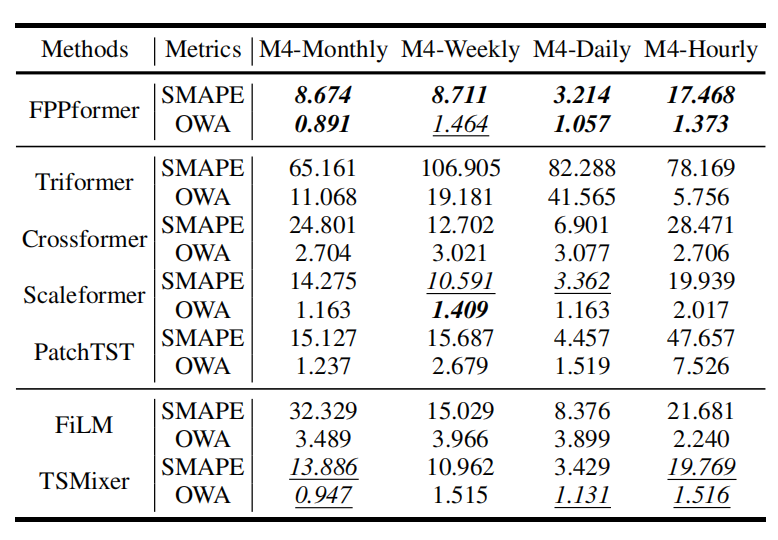

Figure 3. Univariate forecasting results

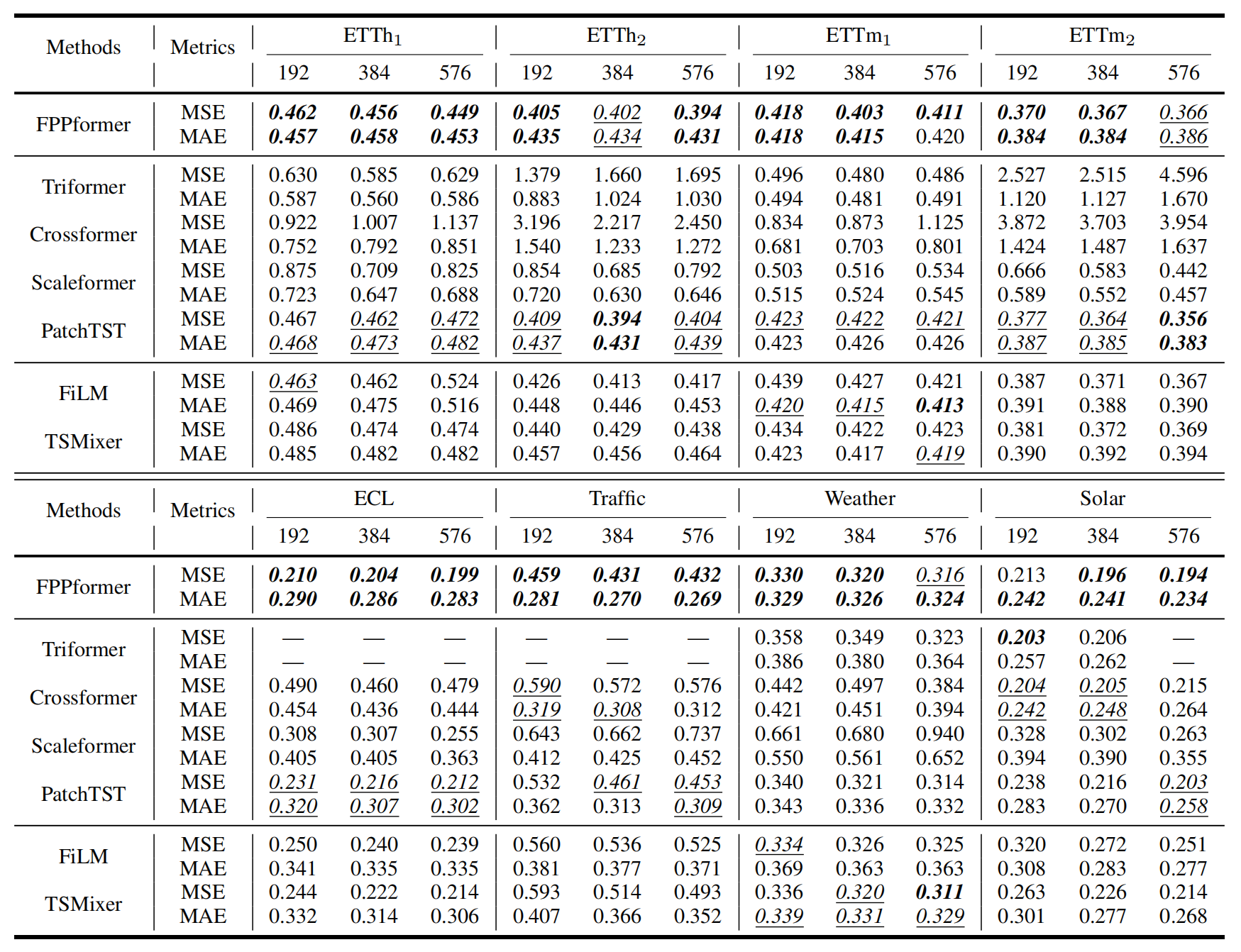

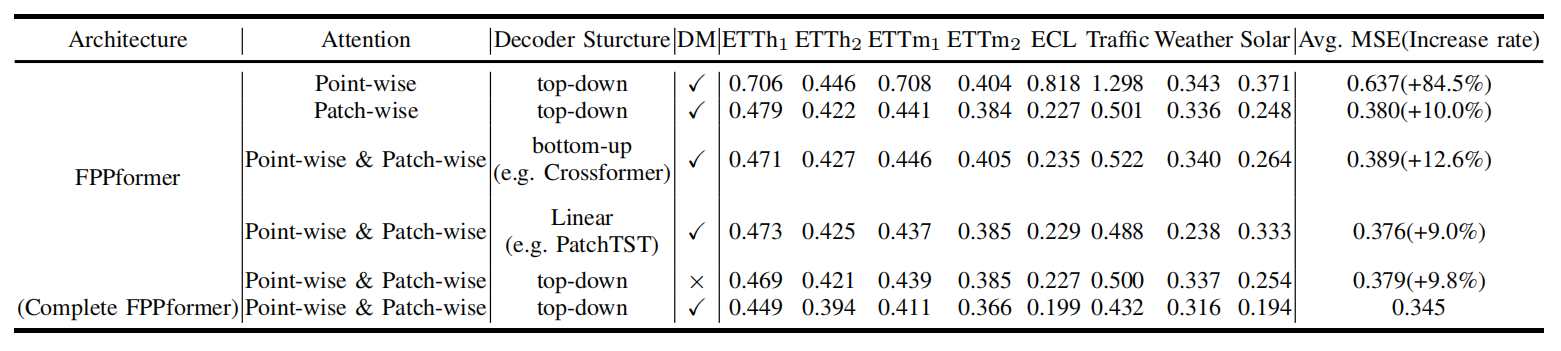

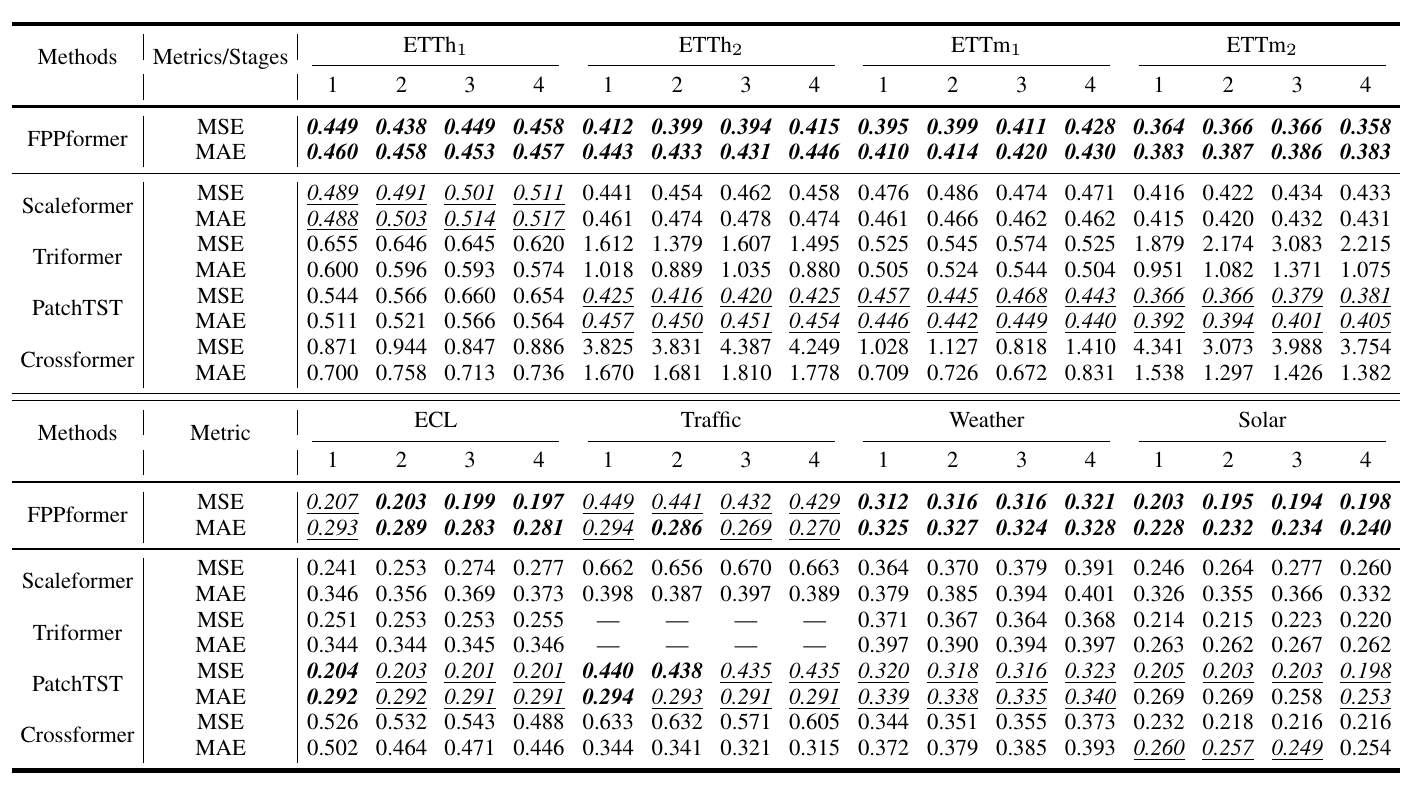

Moreover, we present the full results of multivariate forecasting results with long input sequence lengths in Figure 4, that of ablation study in Figure 5 and that of parameter sensitivity in Figure 6.

Figure 4. Multivariate forecasting results with long input lengths

Figure 5. Ablation results with the prediction length of 720

Figure 6. Results of parameter sensitivity on stage numbers

If you have any questions, feel free to contact Li Shen through Email (shenli@buaa.edu.cn) or Github issues. Pull requests are highly welcomed!