This is the official repository of Lost and Found: Overcoming Detector Failures in Online Multi-Object Tracking.

We propose BUSCA (Building Unmatched trajectorieS Capitalizing on Attention), a compact plug-and-play module that can be integrated with any online Tracker by Detection (TbD) and enhance it. BUSCA is fully-online and requires no retraining.

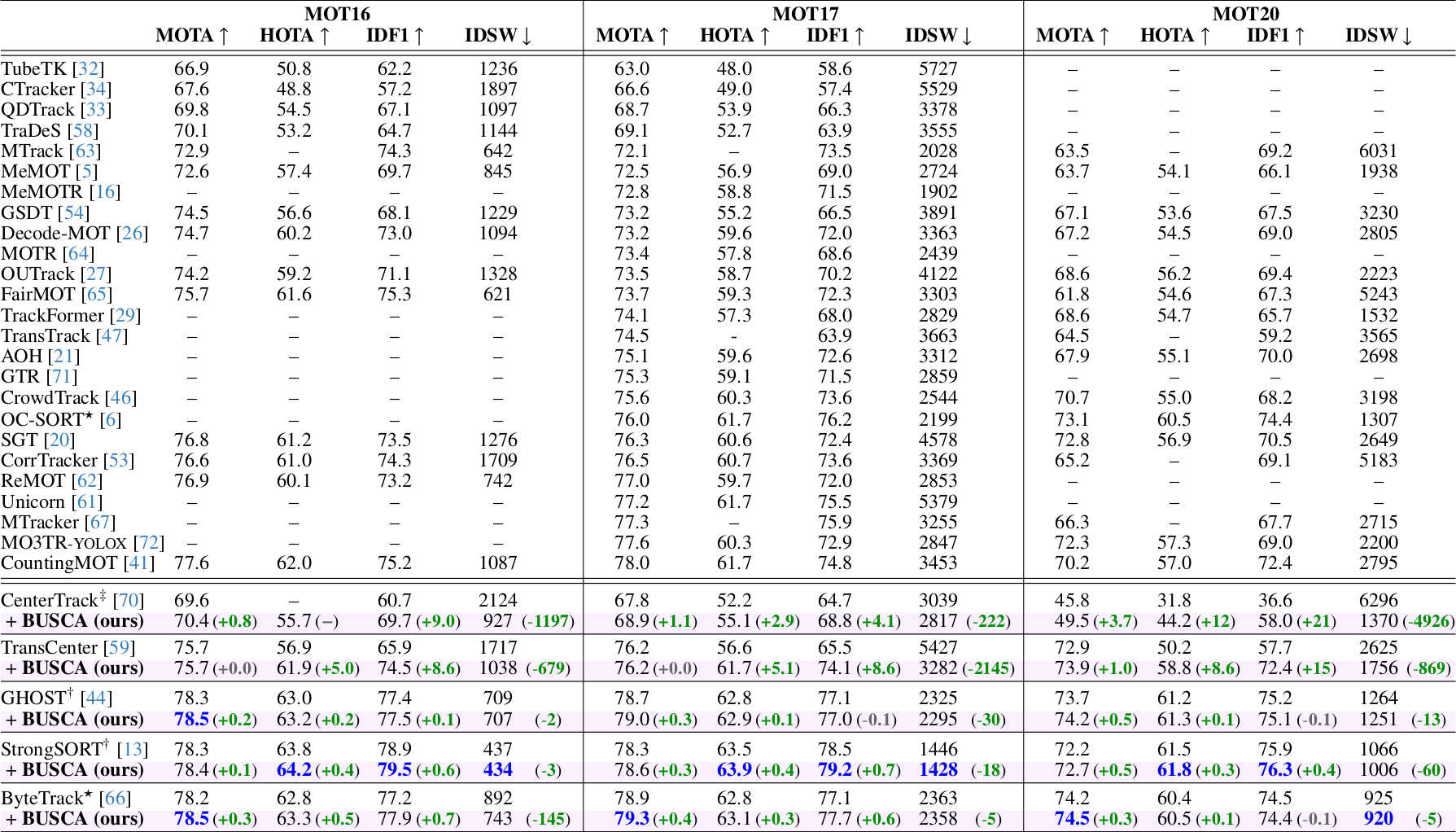

Multi-object tracking (MOT) endeavors to precisely estimate the positions and identities of multiple objects over time. The prevailing approach, tracking-by-detection (TbD), first detects objects and then links detections, resulting in a simple yet effective method. However, contemporary detectors may occasionally miss some objects in certain frames, causing trackers to cease tracking prematurely. To tackle this issue, we propose BUSCA, meaning 'to search', a versatile framework compatible with any online TbD system, enhancing its ability to persistently track those objects missed by the detector, primarily due to occlusions. Remarkably, this is accomplished without modifying past tracking results or accessing future frames, i.e., in a fully online manner. BUSCA generates proposals based on neighboring tracks, motion, and learned tokens. Utilizing a decision Transformer that integrates multimodal visual and spatiotemporal information, it addresses the object-proposal association as a multi-choice question-answering task. BUSCA is trained independently of the underlying tracker, solely on synthetic data, without requiring fine-tuning. Through BUSCA, we showcase consistent performance enhancements across five different trackers and establish a new state-of-the-art baseline across three different benchmarks.

You can download the BUSCA repository with the following command:

git clone https://github.com/lorenzovaquero/BUSCA.git

cd BUSCAThe official code of BUSCA supports five different multi-object trackers (StrongSORT, ByteTrack, GHOST, TransCenter, and CenterTrack), but its design allows it to be integrated into any online tracker by detection. You can choose which one(s) you want to download with the following commands:

git submodule update --init trackers/StrongSORT/ # https://github.com/dyhBUPT/StrongSORT

git submodule update --init trackers/ByteTrack/ # https://github.com/ifzhang/ByteTrack

git submodule update --init trackers/GHOST/ # https://github.com/dvl-tum/GHOST

git submodule update --init trackers/TransCenter/ # https://gitlab.inria.fr/robotlearn/TransCenter_official

git submodule update --init trackers/CenterTrack/ # https://github.com/xingyizhou/CenterTrackBUSCA's dependencies can be found on requirements.txt. You can install them using A) a Docker container or B) directly through pip.

-

The

Dockerfilewe provide contains all the dependencies for BUSCA and the 5 supported baseline trackers. In order to build and run the container, we provide the scriptsbuild.shandrun_docker.sh:./build.sh # Creates the image named "busca" ./run_docker.sh # Instantiates a container named "busca_container"

Keep in mind that, for building the Docker image, you will need GPU access during build (specifically, for TransCenter's MSDA and DCN). This can be achieved by using

nvidia-container-runtime. You can find a short guide here and some troubleshooting tips here. If none of this works, you can still proceed with the image creation and later manually install the TransCenter dependencies at runtime, inside the container itself (you will be prompted with a warning when you start the container). -

The dependencies of BUSCA can be installed on the host machine with the following command (using a virtual environment like venv or conda is recommended):

pip3 install -r requirements.txt -f https://download.pytorch.org/whl/cu115 # You may select your specific version of CUDAKeep in mind that, if you follow the pip approach, you will need to manually install the dependencies for the trackers you may want to use.

The trainable components of BUSCA are the appearance feature extractor and the Decision Transformer. If you want to use our pretrained weights, you can find them on the following Google Drive link.

You just need to download them and put model_busca.pth in the folder models/BUSCA/motsynth/ and put model_feats.pth in the folder models/feature_extractor/market1501/ (you may need to create the folder structure at the root of the repository). This can be done with the following command:

mkdir -p models/BUSCA/motsynth

gdown 15LB6SPHtDc-4_fLQtRIzU1YWOTF6vNf -O models/BUSCA/motsynth/model_busca.pth # model_busca.pth

mkdir -p models/feature_extractor/market1501

gdown 1ZNU0yNkhMTlLRSOC0PR82SwK1ic9OJ8Y -O models/feature_extractor/market1501/model_feats.pth # model_feats.pthYou can download the data for MOT17 and MOT20 from the official website.

Each tracker expects slightly different folder structures and data preprocessing (please, check their respective repositories), but most of our scripts expect that the data will be organized as shown (you can edit the path to the datasets folder in run_docker.sh):

/beegfs/datasets

├── MOT17

│ ├── test

│ └── train

└── MOT20

├── test

└── train

In order to use BUSCA, you have to 1) download the base tracker, 2) setup the tracker and install its requirements, 3) apply BUSCA to it, and 4) run the experiment. You can choose your favorite tracker, and in this example we will do it using StrongSORT.

You can download StrongSORT from its official repository using our git submodule.

git submodule update --init trackers/StrongSORT/ # https://github.com/dyhBUPT/StrongSORTEach tracker has different setup processes and requirements. Please, check their official repositories for more detailed steps.

In the case of StrongSORT, you first need to install its requirements (this part is not necessary if you are using the Docker image we provide).

Secondly, you have to download its prepared data from Google Drive or Baidu Wangpan (code "sort"). You can do it with the following command:

mkdir -p trackers/StrongSORT/Dataspace

gdown 1I1Sk6a1i377UhXykqN9jZmbvJLc6izbz -O trackers/StrongSORT/Dataspace/MOT17_ECC_val.json # MOT17_ECC_val.json

gdown 1zzzUROXYXt8NjxO1WUcwSzqD-nn7rPNr -O trackers/StrongSORT/Dataspace/MOT17_val_YOLOX+BoT --folder # MOT17_val_YOLOX+BoTIn order to apply BUSCA to a tracker, you simply have to copy and overwrite the files found in the adapters folder. For our example, you can do it for StrongSORT as follows:

cp -TRv adapters/StrongSORT trackers/StrongSORTYou can run the experiments for the different trackers using the scripts found in the scripts folder:

./scripts/run_strongsort.sh --dataset MOT17 --testset valThe results will be located in the exp folder. Once computed, the tracking metrics for StrongSORT+BUSCA on MOT17-val should be as follows:

| Model | MOTA↑ | HOTA↑ | IDF1↑ | IDs↓ |

|---|---|---|---|---|

| StrongSORT | 76.174 | 69.289 | 81.864 | 234 |

| StrongSORT+BUSCA | 76.795 | 69.392 | 82.272 | 219 |

You can measure the MOTA/HOTA/IDF1 metrics of the different trackers using the official code of TrackEval.

Coming soon! 🔜

- You can apply BUSCA to other trackers! We would be very happy if you could report your new results!

If you find BUSCA useful, please star the project and consider citing us as:

@inproceedings{Vaquero2024BUSCA,

author = {Lorenzo Vaquero and

Yihong Xu and

Xavier Alameda-Pineda and

V{\'{\i}}ctor M. Brea and

Manuel Mucientes},

title = {Lost and Found: Overcoming Detector Failures in Online Multi-Object Tracking},

booktitle = {European Conf. Comput. Vis. ({ECCV})},

pages = {1--19},

year = {2024}

}