Yixin Chen · Sai Kumar Dwivedi · Michael J. Black · Dimitrios Tzionas

- [2023/09/11] Instance-level contact annotations are released for HOT. Check out the DATA.md page for more details.

- [2023/04/19] HOT dataset and model checkpoints are released at the project website!

The code is developed under the following configurations.

- Hardware: >=4 GPUs for training, >=1 GPU for testing (set

[--gpus GPUS]accordingly). - Dependencies: pytorch, numpy, scipy, opencv, yacs, tqdm, etc.

pip3 install -r requirements.txt

The code base is tested under the following pytorch version thats support CUDA capability sm_80 for training.

pip3 install torch==1.11.0+cu113 torchvision==0.12.0+cu113 -f https://download.pytorch.org/whl/cu113/torch_stable.html

- Data: download the HOT dataset from the project website and unzip to

/path/to/dataset. SetDATASET.root_datasetas/path/to/datasetin the config files in./config/*.yaml. - The split files are located inside

./datafor theHOT-Annotated ('hot'),HOT-Generated ('prox'), andFull-Set ('all'). See DATA.md for more details. - The training, validation and testing splits to use during experiments are indicated in the config file

DATASET.list_train(val/test). - The instance-level contact annotations are released. They are more flexible to use, with processing code snippet attached. See DATA.md.

python train.py --gpus 0,1,2,3 --cfg config/hot-resnet50dilated-c1.yaml

To choose which gpus to use, you can either do --gpus 0-7, or --gpus 0,2,4,6.

./hot-resnet50dilated-c1.yaml contains the default training settings for 4-gpu training. The performance and training time may vary for different settings; for example, we can reach slightly better performance when training with larger imgMaxSize and smaller segm_downsampling_rate.

The validation phase is not included in the training phase currently;see this issue for more details.

Evaluate trained models on certain epoch, i.e., 14. Specify the dataset to be evaluated in DATASET.list_val in the config file.

python3 eval_metric.py --cfg config/hot-resnet50dilated-c1.yaml --epoch 14

See evaluation metrics

python3 show_loss.py --cfg config/hot-resnet50dilated-c1.yaml

- Checkpoint: We release our model checkpoints in the project website. Download the checkpoints and put them in

./ckpt/hot-c1. - Put images in a folder, e.g.,

'./demo/test_images', and generate the odgt file bypython3 demo/gen_test_odgt.py - Inference on the images and save visulization results by

python3 eval_inference.py --cfg config/hot-resnet50dilated-c1.yaml --epoch 14

@inproceedings{chen2023hot,

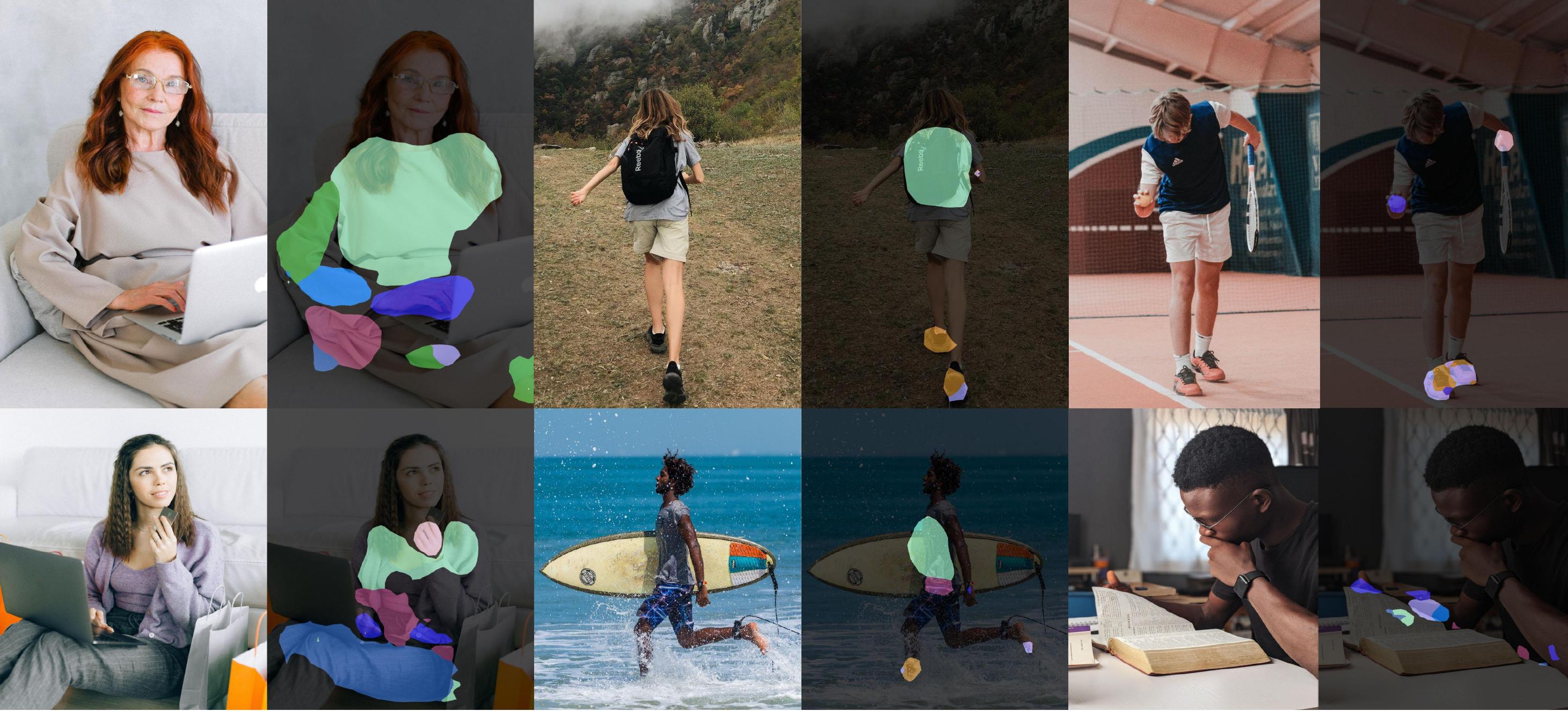

title = {Detecting Human-Object Contact in Images},

author = {Chen, Yixin and Dwivedi, Sai Kumar and Black, Michael J. and Tzionas, Dimitrios},

booktitle = {{Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}},

month = {June},

year = {2023}

}We thank:

- Chun-Hao Paul Huang for his valuable help with the RICH dataset and BSTRO detector's training code.

- Lea Müller, Mohamed Hassan, Muhammed Kocabas, Shashank Tripathi, and Hongwei Yi for insightful discussions.

- Benjamin Pellkofer for website design, IT, and web support.

- Nicole Overbaugh and Johanna Werminghausen for the administrative help.

This code base is built upon semantic-segmentation-pytorch and we thank the authors for their hard work.

This work was supported by the German Federal Ministry of Education and Research (BMBF): Tübingen AI Center, FKZ: 01IS18039B.

This code and model are available for non-commercial scientific research purposes as defined in the LICENSE file. By downloading and using the code and model, you agree to the terms in the LICENSE.

For technical questions, please contact ethanchen@g.ucla.edu

For commercial licensing, please contact ps-licensing@tue.mpg.de