Deep Learning Library

- ymd_util

- C++20 compiler for ymd_util/MNIST.hh

- std::endian

- C++17 compiler

- structured bindings

- fold expression

See hello_MNIST.cc

- Include “DL_core.hh” (and other headers)

- Create Network by “>>” operator

auto DL = ymd::InputLayer<double>{5} >> ymd::ReLU{10} >> ymd::SoftMax_CrossEntropy{5};

- Back Propagete by “<<” operator

DL << std::make_tuple(data,label)

- Update

DL.Update(eps,L1,L2)

- Spliting Train Test data

ymd::split_train_test- You can take return values by structured binding

auto [train_X,train_y,test_X,test_y] = ymd::split_train_test(X,y,0.7);

- You can take return values by structured binding

- Adaptive gradient manager with suitable shape can be made by

NeuralNet::MakeAdaptive - Shuffle data set and mini-batch operation can be achieved with range adaptors

for(auto&& Batch: ymd::zip(train_data,train_label) | ymd::adaptor::shuffle_view{} | ymd::adaptor::sliding_window{batch_size,batch_size}){ for(auto&& DataLabel: Batch){ DL << DataLabel; } DL.Update(adam,L1,L2); }

- Multiple Back Propagation without Update sum up gradients.

- Weight Initialization

- He Normalization (before ReLU)

- Glorot Normalization (before Sigmoid, Identity_SquareError, and SoftMax_CrossEntropy)

- Only Adam is implemented as Adaptive Gradient Update

- No GPU acceleration

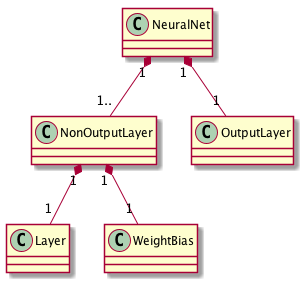

NeuralNet class has NonOutputLayer and OutputLayer. Theses layer

classes are stored with std::tuple<...> and managed by static polymorphism.

NonOutputLayer class has Layer class and WeightBias class. Since the

back propagation of weights and biases require activated values as

well as propagated gradient from the next layer, These 2 classes are

managed together as NonOutputLayer class.

In order to make API simple and recognizable as much as possible, we use operator overload.

auto DL = ymd::InputLayer<double>{5} >> ymd::ReLU{10} >> ymd::SoftMax_CrossEntropy{5};To aboid typing the value type (double in above) multiple time, the

helper classes of defining layer (e.g. ymd::ReLU) does not have

information of the value type, and in the overloaded operator >> the

information is extracted from already created NeuralNet class or the

initial ymd::InputLayer.

The operator << looks like backward operation, so that I decided to

use for back propagation. Since the back propagation requires both

data and label, std::tuple<Data,Label> are used.

DL << std::make_tuple(data,label);The std::make_tuple can be removed by using ymd::zip for data

iteration. (See hello_polynomial.cc)

for(auto&& DataLabel: ymd::zip(data,label)){

DL << DataLabel;

}In C++, the all functions can return only a single

value. Traditionally, we pass the argument by reference and update the

value, however, from C++11, std::tuple can wrap multiple values with

different types and simulate mutiple returning with

std::make_tuple. The returned value can be accepted with std::tie

which makes a tuple of references to its argument values.

auto F(double a,int b){ return std::make_tuple(a,b); }

int main(){

double a;

int b;

std::tie(a,b) = F(0.5,2);

}Unfortunately, this requires declaration of values

beforehand. Moreover, we cannot deduce type by auto.

Structured binding (from C++17) can solve this inconvenience.

auto [a,b] = F(0.5,2);In usual dynamic polymorphism, pointers to classes derived from a

interface class are managed with unified way. This can be achived by

virtual function, whose address is recorded in vtable in class

instances and refered every time the function is called. This dynamic

runtime method cannot be optimized by any compiler.

By using std::tuple (from C++11), parameter pack (from C++11),

generic labmda (from C++14), folding expression (from C++17), and so

on, different classes with same name members can be treated like

dynamic polymorphism.

Since values in std::tuple have different types, we cannot access

them with access operator [].

Here we achive with std::apply (from C++17), folding expression

(C++17) and generic lambda (from C++14). There are some examples from

DL_core.hh.

Feed forward with operator >>.

friend auto operator>>(layer_type input,NeuralNet<Layers...>& nn){

return std::apply([&](auto&&...l){ return (input >> ... >> l); },nn.layers);

}Back propagete with operator <<.

auto operator<<(layer_type real){

std::apply([&](auto&&...l){ (l << ... << real); },layers);

}Call the update member function and sum up the return values.

void Update(value_type eps,value_type L1,value_type L2){

std::apply([=](auto&...l){ (... + l.update(eps,L1,L2)); },layers);

}The std::apply passes the values in std::tuple to function as

arguments. Even though type and size of values are unknown, generic

lambda with variadic template parameter ([](auto...v){ }) can take

and all the values packed in the parameter. The packed parameters are

unpacked with the operator ... like followings:

- comma separated in function, constructor, and template argument

f(v...)->f(v1,v2,v3,...).

- Sequential binary operation (fold expression)

(... + v)->(((v1 + v2) + v3) + ...)(v + ...)->(... + (v3 + (v4 + v5)))(init + ... + v)->((((init + v1) + v2) + v3) + ...)(v + ... + init)->(... + (v3 + (v4 + (v5 + init))))

C# generics must run with all the possible type, however, C++ template does not have to. In C++, when a template is failed to instantiate, the template is simply removed overloaded function set without error.

The type_traits header (from C++11) has many useful template classes

such as std::enable_if, std::common_type, std::is_same and so

on. (XXX_t classes and XXX_v classes are helper classes with are

same with XXX::type and XXX::value respectively. (from C++14))

Here is an exapmle from DL_core.hh.

template<typename Adaptive,

std::enable_if_t<!std::is_same_v<std::remove_reference_t<Adaptive>,

value_type>,std::nullptr_t> = nullptr>

auto Update(Adaptive& a,value_type L1,value_type L2){

return ymd::zip_for_each([=](auto&& l,auto&& a_){ return l.update(a_,L1,L2); },

layers,a);

}

void Update(value_type eps,value_type L1,value_type L2){

std::apply([=](auto&...l){ (... + l.update(eps,L1,L2)); },layers);

}Because of back compatibility, when adaptive update is implemented,

the original argument value_type eps must be accepted.

When you call DL.Update(0.01,0.0,0.0), the upper candidate template

fails to instantiate because the deduced second template parameter,

std::enable_if_t<false> is not implemented (Only

std::enable_if_t<true> is implemented).

The second template parameter of the std::enable_if is

std::enable_if::type and the type of std::enable_if::value. We set

std::nullptr_t, which is the type of nullptr, which prevent users

to set some temlate parameter without intention. (We assume there are

very rare case that users pass nullptr to template.)

There is a similar idiom named enabler idiom as follows:

extern void* enabler;

template<typename T,

typename std::enable_if<std::is_integral<T>::value>::type*& = enabler>

void f(T t){ }- Mac OS X 10.13.6

- g++ (MacPorts gcc8 8.2.0_0) 8.2.0

- g++ -O3 -march=native -Wa,-q -fdiagnostics-color=auto -std=c++2a

Replacing WeightBias class, which manage affine conversion and its

back propagation, can achieve convolutional network and batch

normalization in terms of class architecture.

In terms of implementation, there are some design choices:

- Make

NonOutputLayerclass template of weight and bias type (affine, convolutional, and batch normalization)- Pros

- Reduce implementation outside weight and bias.

- Cons

- How to specify the template parameter in the simple API?

- Make new class something like

NonOutputConvolutionalLayer- Pros

- (Maybe) Does not need complicated TMP.

- Cons

- Need to implement all the function in order to compatible

with

NonOutputLayerclass.

Algorithms of serializing parameters and identifying the network shape are necessary.

In C++17, parallel algorithm were standardized in STL, however, both gcc and clang have not implemented yet. (VC++ did.)

Vectorize operation (SIMD) and parallel operation (multi thread) can be easily implemented as follows:

std::for_each(std::execution::par_unseq,v.begin(),v.end(),[](auto& v){ v *= 2; })The first argument is execution policy to allow parallel and/or

vectorize operation. Many functions in <algorithm>, <numeric>, and

<memory> will be overloeaded parallel version.