- The NB was originally developed on Kaggle: https://www.kaggle.com/code/yogendrayatnalkar/finetuning-segment-anything

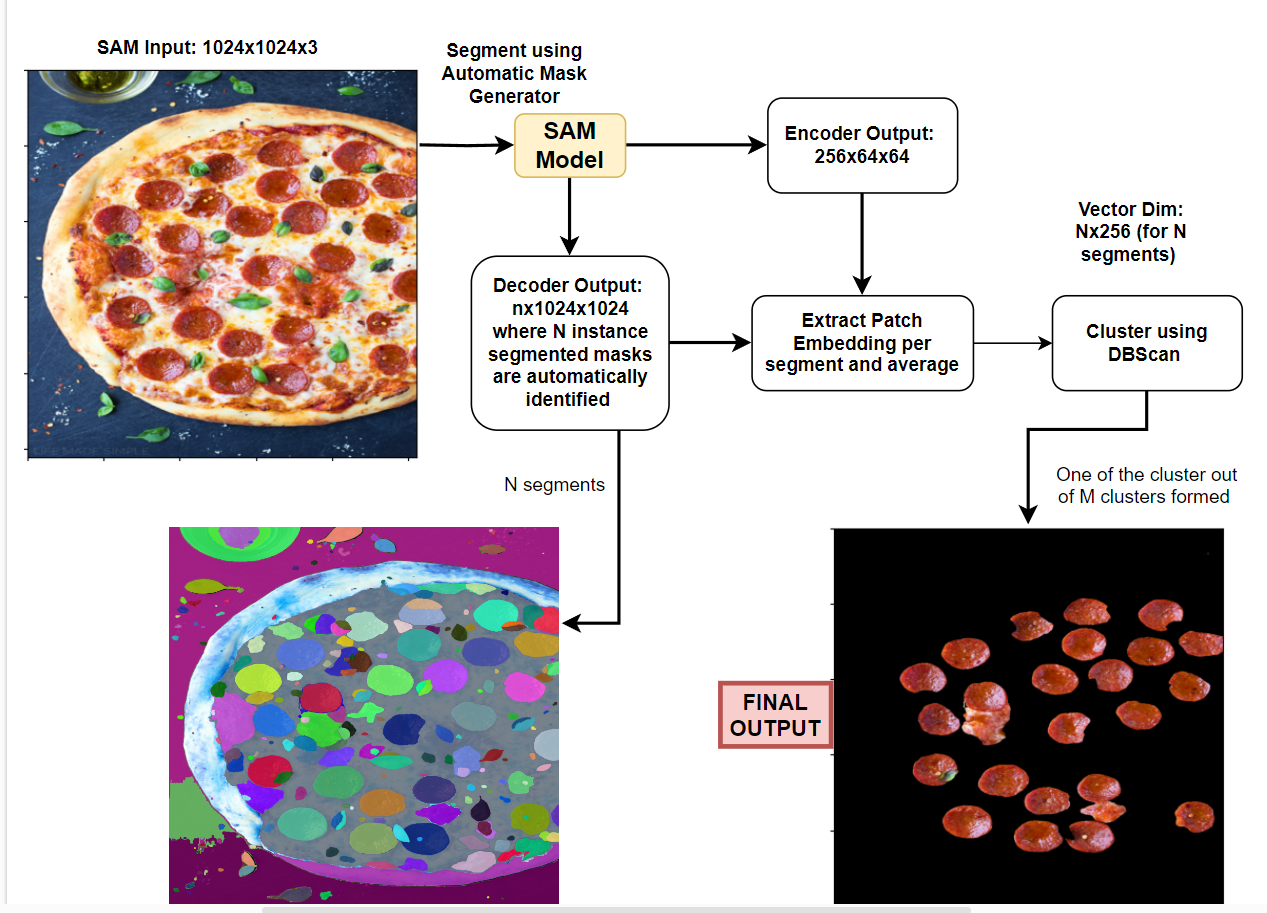

Task: Semantically segment objects from image AUTOMATICALLY with the help of META AI SAM, without PROMPTS/TRAINING

1. Automatic Mask Generation (AMG)

Utilizing the Segment Anything Model (SAM) from the MetaAI SAM repository, perform instance segmentation on the entire image. This process will identify and isolate individual objects within the image.

2. Patch-Embedding Extraction & Single Representation per Instance-Segment

-

For each generated instance-segment, extract the corresponding patch embedding. A patch embedding encapsulates a segment's visual features into a concise vector representation.

-

To ensure effective association of each cluster with its corresponding segmentation mask, each instance segmentation mask should have a unique single embedding or single representation. This mapping allows for the accurate assignment of semantic segmentation classes to individual objects.

-

To achieve this, we can extract all the patch embeddings from the encoder features of SAM and average them per segment.

3. Clustering for Semantic Segmentation

Treating each segment as a distinct data point, apply clustering algorithms to group similar segments together. Each resulting cluster represents a semantic segmentation class, encompassing objects with shared visual characteristics.

-

Extract all patch embeddings from the SAM Encoder.

-

The SAM model takes an input of 1024x1024 pixels.

-

The default patch size is 16x16 pixels.

-

Therefore, the input structure is 64x64x16x16, where there are 64x64 patches, each with a size of 16x16 pixels.

-

The SAM Encoder output is 256x64x64.

-

After shuffling the channels, the output becomes 64x64x256.

-

Observe the input-output transformation:

64x64x16x16 --> 64x64x256 -

This means that each 16x16 patch is represented by a 256-dimensional embedding vector.

- For each segment, find the corresponding patches and average their embedding vectors.

- For example, if there are 30 segments identified using Meta SAM Automatic Mask Generator (AMG), iterate through each segment and:

- Identify the corresponding patches and obtain their embeddings. For instance, if segment1 corresponds to 3 patches, its patch embedding will be 3x256. Similarly, if segment2 corresponds to 10 patches, its patch embedding will be 10x256.

- Average all the patch embeddings corresponding to that segment to obtain a single embedding vector. As a result, segment1's embedding vector will be 1x256, segment2's embedding vector will be 1x256, and so on.

- This process results in a 1x256 embedding vector for each segment. For 30 segments, the representation will be 30x256.

- Cluster the segment embedding vectors using a clustering algorithm, such as DBScan. This algorithm is suitable since the number of segments is unknown.

- Each cluster formed represents a distinct semantic class.**